EKS Cost Optimization: Tutorial & Best Practices

EKS Best PracticesElastic Kubernetes Service (EKS) is a managed Kubernetes platform that Amazon Web Services (AWS) operates. Kubernetes is an open-source container orchestration platform enabling users to automate all aspects of their containerized applications, including deployment and rollback, storage, scaling, self-healing, and network routing.

EKS users benefit from AWS automatically managing their Kubernetes control planes, allowing them to focus exclusively on their data planes (worker nodes, applications, and surrounding infrastructure).

Optimizing the costs for EKS requires understanding how various components of an EKS cluster are charged and how the cluster configuration can be tweaked to mitigate unnecessary expenditures.

The minimum cost of any EKS cluster is $73 per month ($0.10 per hour). This cost is for the managed control plane created for every EKS cluster. On top of this cost, users must provision other resources (such as EC2 instances) to run their clusters.

This article will discuss how users can effectively approach EKS cost optimization for all resources in the cluster.

Summary of key concepts

| Optimizing worker node costs | Worker nodes are often the most expensive components in an EKS cluster, so correctly configuring them will have a significant impact on total cost of ownership. |

| Rightsizing EC2 worker nodes | Worker nodes should be rightsized using metrics data to determine optimal EC2 instance types. |

| Implementing spot instances | Spot instances can save up to 90% compared to on-demand EC2 instances; implementing this feature as part of a cost optimization strategy is essential. |

| AWS reserved instances and savings plans | Reserved instances and savings plans allow users to enter long-term contracts to commit to EC2 instance usage and spend in exchange for a discount. This can be useful for users with predictable instance usage requirements. |

| Fargate | Fargate is a serverless compute option available for EKS users. While this compute option is more expensive than EC2 instances, users may save on labor costs due to the difference in operational overhead. |

| Optimizing pod costs | Pods consume hardware capacity from worker nodes, so optimizing pod configurations is important for maintaining cost efficiency. |

| Vertically rightsizing pods | Pods should be rightsized to allocate the appropriate amount of resources, like CPU and memory. Rightsizing will avoid waste while ensuring optimal pod performance. |

| Horizontally rightsizing pod replicas | Pod replicas should be scaled appropriately according to application demands. Autoscaling solutions should prevent having unnecessary replicas consume valuable compute capacity while ensuring that enough replicas are available for the application to operate correctly. |

| Optimizing data transfer costs | Data transfer costs for AWS are often overlooked but can contribute significantly to the total cost of ownership for EKS clusters. |

| Minimize cross-zone traffic | Traffic crossing availability zones incurs additional charges, so isolating traffic within a zone can be an effective cost optimization technique. |

| Use VPC private endpoints | VPC endpoints allow users to connect to AWS services without traversing the Internet. Since Internet traffic incurs heavy charges from AWS, avoiding this will lead to significant cost savings. |

| Use VPC peering or transit gateways | AWS charges more for traffic traversing the internet than for traffic in the internal network. Therefore, leveraging VPC peering and transit gateways is useful for reducing costs by ensuring network traffic remains within the AWS internal network. |

| Implement a caching strategy | Caching responses from resources like databases can reduce unnecessary network traffic and data transfer charges while improving application performance. |

| Monitor data transfer usage | Analyzing data transfer costs via AWS Cost Explorer or other observability tools will help identify patterns and anomalies as part of a cost optimization strategy. |

| Leverage observability for cost optimization | Observability tools can gather data within an EKS cluster and surrounding AWS infrastructure to provide insight into performance bottlenecks, overallocated resources, long-term trends, and anomalous expenses. |

Optimizing worker node costs

Worker nodes are the host machines where the user’s Kubernetes pods are run. Each node in the cluster is responsible for connecting to the control plane to fetch information related to Kubernetes resources such as pods, secrets, and volumes to run on the host machine. Every EKS cluster must have at least one worker node to deploy the user’s pods.

The counterparts of worker nodes are called master nodes, which run all Kubernetes control plane components, such as the Kube Scheduler, API Server, and Kube Controller Manager. Every EKS cluster will have a fully AWS-managed control plane, so no master node configuration is required.

Worker nodes can be deployed as EC2 instances or serverless EKS Fargate nodes. This section will discuss both compute options. Worker nodes typically contribute most of an EKS cluster’s cost and must be carefully planned to mitigate unwanted expenses.

| Visualize Utilization Metrics | Set Resource Requests & Limits | Set Requests & Limits with Machine Learning | Identify mis-sized containers at a glance & automate resizing | Get Optimal Node Configuration Recommendations | |

|---|---|---|---|---|---|

| Kubernetes |  |

|

|||

| Kubernetes + Densify |  |

|

|

|

|

Rightsizing EC2 worker nodes

There are hundreds of EC2 instance types available on AWS. Each instance type will have a unique hardware combination (CPU, memory, network, and storage) to support specific use cases. Selecting instance types with insufficient resources will lead to resource exhaustion issues and application downtime in an EKS cluster, while choosing instance types that are too large will result in wasted resources and excess costs. A balanced approach to compute capacity will maximize application performance while controlling costs.

Rightsizing worker nodes should be done based on resource utilization data. Rightsizing involves analyzing EC2 instance utilization data to determine an appropriate instance type choice based on many resource-related metrics. While this selection can be done manually, doing so will involve the additional operational overhead of evaluating metrics data and may result in inaccurate rightsizing decisions, especially with hundreds of EC2 instance types to select from. Implementing tools like Densify to automate the rightsizing based on utilization data from a range of resource-related metrics will provide more accurate instance type selections without the administrative overhead of manual decisions made by administrators.

Implementing automated rightsizing for EC2 instances has the benefit of ensuring that an appropriate instance type is being used to support the workloads of an EKS cluster. Workloads need correctly sized instances to ensure that performance and scalability requirements are being met. Additionally, rightsizing is critical for cost optimization by reducing unnecessary compute resources.

The table below provides a brief overview of the various EC2 instance categories available for AWS. Each category of instance types is suitable for different use cases, and users can implement optimization tools to select the appropriate instance types.

| Instance type category | Description | Example instance types |

|---|---|---|

| General purpose | Provides a balance among compute, memory, and networking capacity. These instances are a good starting point for users without specialized use cases. | T2, T3, M4, M5, A1 |

| Compute optimized | These instances contain higher-performance processors with a large number of cores. | C4, C5, C6a |

| Memory optimized | These instances can contain massive amounts of memory: up to 24 TB. | R4, R5, High Memory, z1d, R6a |

| Accelerated computing | These instances contain GPUs, making them useful for use cases like machine learning. | P2, P3, P4, G3, G5 |

| Storage optimized | These instances contain high-performance NVME SSD storage for storage-intensive workloads. | Im4gn, Is4gen, i4i |

| HPC optimized | Instances for high performance computing (HPC) applications contain a combination of powerful processors, large memory capacity, and NVME SSD storage. | Hpc6id, hpc6a |

Implementing spot instances

Spot instances are an affordable approach to deploying EC2 compute capacity. Users can procure unused compute capacity from AWS, typically at a discount compared to regular on-demand rates. AWS offers discounts for this type of instance because it essentially represents spare data center capacity. The pricing difference can reach 90% and will vary depending on the instance type, region, and the utilization of on-demand instances.

A significant drawback of spot instances to be aware of is the possibility of instance “interruption.” This term is used to describe an event where AWS decides to reclaim its compute capacity and terminate the spot instance. AWS may do this when it needs the capacity to serve on-demand instance requests. Since on-demand instances always have a higher priority than spot instances, the user’s spot instance will terminate to free up capacity for on-demand users.

This behavior is a significant change from on-demand instances, where users can typically expect instances to run uninterrupted for extended periods (like years), only being interrupted if a hardware failure occurs. Spot instances will provide no such assurances, and users may even experience multiple interruptions per day.

To ensure that Kubernetes applications don’t experience downtime when a spot interruption occurs, users are advised to set up Pod Disruption Budgets. This Kubernetes resource enables graceful rescheduling and shutdown of pods running on an interrupted spot instance.

Users can implement spot instances in their EKS clusters to benefit from cost savings by enabling this feature in their AutoscalingGroups. AutoscalingGroups support launching mixtures of spot and on-demand instances, enabling users to achieve a balance between their needs for cost savings with the requirements for long-running instances.

Note that the challenges described in the previous section about selecting the correct node size apply as much to spot instances as to reserved instances or those priced at the standard rate.

AWS reserved instances and savings plans

There are two approaches AWS offers to reduce the cost of EC2 instances by committing to long-term

contracts.

Reserved instances

Reserved instances are an AWS feature allowing users to obtain a discount for EC2 capacity in exchange for committing to long-term usage (one year or three years). This feature is not specific to EKS but can assist EKS users in achieving discounts on EC2 worker node capacity when the use case fits.

Reserved instances are available in two classes:

- Standard: A standard reservation will commit the user to a specific instance family (such as T2 instances). The instance type within the same family can be modified (such as from T2.small to T2.medium), but the family cannot change (such as T2 to M5). Standard reservations provide the highest discount while also being the most restrictive. Users should implement this reservation type if they're confident that they will not need to switch instance families in the long term. These reservations can be bought and sold on the reserved instance marketplace.

- Convertible: A convertible reservation also involves committing to long-term instance utilization, but unlike standard reservations, convertible reservations allow users to change the instance family. This allows more flexibility for users who are unsure of their instance type needs, in exchange for a smaller discount than standard reservations. They cannot be bought and sold on the reserved instance marketplace.

Reserved instances are beneficial for users who understand their long-term EC2 instance requirements and are comfortable with committing to a one-year or three-year contract. The discounts for reserved instances can reach 72%, making this a significant element of a cost optimization strategy if instance usage is predictable.

That said, reserved instances are not suitable for use cases where workload requirements frequently change. There are also additional factors users should consider before implementing reservations, such as requirements related to term length, tenancy, and payment installments. These factors will impact the discount amount.

Savings plans

Savings plans are a feature allowing users to

commit to a certain degree of compute spending for one-year and three-year periods.

There are two classes of savings plans:

- Compute: A compute savings plan can cover compute usage for EC2, Lambda, and Fargate, which means users can move workloads between these services while still being covered by the savings plan. The discount is not as significant as when using reserved instances but provides the flexibility of savings across multiple compute services.

- EC2 instance: An EC2 instance savings plan is specific to the EC2 service only. It provides a discount based on the commitment to an instance family, similar to convertible reserved instances.

The key differences between convertible reservations and instance savings plans are their flexibility and operational overhead. When users want to change the instance type, convertible reservations require changing the reservation details to ensure the discount is in effect. Instance savings plans don't require this additional step: Changing the instance type within the same family is automatically covered by the instance savings plan. On the other hand, the flexibility of convertible reservations is higher because it allows changing the instance family, whereas an instance savings plan cannot be modified.

Pick the ideal instance type for your workload using an ML-powered visual catalog map

See how it worksFargate

Fargate is a serverless compute option provided by AWS. It is a fully managed service where users do not need to manage EC2 instance resources to run pods in their EKS clusters. AWS manages the underlying Fargate compute infrastructure, ensures that capacity is available, replaces unhealthy hosts, and applies security patches.

Fargate is more expensive to run than an equivalently sized EC2 instance (in terms of CPU and memory) and thus does not provide an immediate cost benefit. However, Fargate can be a valuable contributor to a cost optimization strategy due to the reduced operational overhead enabled by removing EC2 instances from an EKS cluster. Users no longer need to invest valuable engineering time in maintaining EC2 fleets, which typically involves operations such as scaling, monitoring, health checking, and upgrading. Since maintaining EC2 instances imposes labor costs, implementing serverless infrastructure may be a valuable option for cost optimization.

Fargate supports specific use cases and is not suitable for all types of pods. Users should carefully evaluate whether Fargate fits their needs before attempting a migration.

Optimizing pod costs

Rightsizing pods in an EKS cluster involves modifying the resources allocated to the pod to optimize the balance between cost and performance. A pod is a collection of one or more containers grouped and deployed to a worker node. All pods consume resources (such as CPU and memory) from the underlying worker node, and pods requesting excessive resources will result in accruing unnecessary node compute capacity charges.

Choosing the appropriate values for the pod resource requests and limits in the Kubernetes manifest is a challenge because measuring utilization during peak hours is a complex task, especially for short bursts. Tools like Densify are designed to help users measure utilization peaks and valleys over time and avoid bottlenecks (if under-provisioned) or financial waste (if over-provisioned). Moreover, as explained in this short video, Densify integrates with infrastructure-as-code (IaC) tools such as Ansible and Terraform, making it easy to automate the configuration process.

Pods can vertically scale by allocating more compute resources for the containerized applications to consume. They can also be scaled horizontally by increasing the number of running pod replicas, balancing the load between identical copies of the containerized application. Both approaches need to be rightsized with correct values for effective cost optimization.

Vertically rightsizing pods

Vertically rightsizing pods involves modifying the CPU and memory resources configured in a pod’s specification to match the pod’s resource requirements appropriately.

Setting the request and limit values too high will result in Kubernetes allocating excessive hardware resources to the pod and wasting the unused compute capacity. This may cause node autoscaling solutions to launch additional worker nodes in response to the excessive resource demands. Setting the request and limit values too low will result in pods encountering performance issues such as CPU throttling, out-of-memory issues, and potential eviction from the worker node. Selecting accurate request and limit values is a challenge for administrators due to the administrative overhead of manually reviewing metrics and making resource requirement estimations. Implementing automation tools will reduce human error and save time for administrators, especially when there are many pods to rightsize.

Users are advised to benchmark and load-test their pods to determine an appropriate level of resources to define in the initial pod specification. Monitoring historical utilization metrics for a pod will provide insight into the expected resource requirements, and this data can be used to experiment with request and limit values. Historical metrics should also offer insight into average versus peak utilization, which may indicate the resource allocation needs to dynamically scale up/down over time. Optimization tools are recommended for accurately optimizing request and limit values in EKS clusters rather than relying on manually selected values that may be error-prone and cause operational overhead.

For ongoing optimization, users should consider implementing tools like Densify that can continuously analyze pod metrics and provide recommendations for configuring pod resource allocation as the load on the application changes. This will reduce the operational overhead associated with manually reviewing metrics to determine appropriate pod rightsizing values and improve the accuracy of the rightsizing recommendations by using a wide range of metric data to determine appropriate values.

The example below displays how requests and limits are defined for a pod. These values should be carefully evaluated based on metrics data to ensure pods have enough resources to perform without overallocating resources.

apiVersion: v1

kind: Pod

metadata:

name: requests-limits-example

spec:

containers:

- name: my-container

image: nginx

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 200m

memory: 256Mi

Configuring requests and limits correctly in an EKS cluster will significantly impact worker node costs. Ensuring that these values are set accurately via automation tools will benefit cost optimization strategies and maintain application performance.

Identify under/over-provisioned K8s resources and use Terraform to auto-optimize

WATCH 3-MIN VIDEOHorizontally rightsizing pod replicas

Horizontally rightsizing pods in an EKS cluster involves adjusting the number of pod replicas to accurately match the requirements of the user’s application. Rightsizing will help optimize resource utilization and improve cost efficiency.

Users can manually configure the pod replica values (for example, in a Kubernetes Deployment object). Selecting a value correctly will require understanding the application’s resource requirements. Analyzing historical metrics data for the pod’s resource utilization will provide insight into whether additional replicas are necessary to help handle the high load or whether the replica count can be reduced to avoid waste.

Pod replica values can be set automatically with the proper tooling to optimize replica counts based on current requirements. For example, pod replicas in a web application may need to be increased when receiving high traffic, causing resource utilization to increase. Implementing tooling to automate the replica values will help users optimize costs by mitigating unnecessary replicas when possible while still enabling high performance by scaling up when required.

Optimizing data transfer costs

Data

transfer charges represent a significant “hidden” cost of AWS. Users often don’t consider these

costs until they receive an unexpected bill. Careful consideration of an EKS cluster’s network setup

will help contribute to cost optimization efforts by mitigating unnecessary data transfer charges.

Minimize cross-zone traffic

Data transfers between EC2 instances within the same availability zone are free, but cross-zone traffic has a cost. Users are advised to keep traffic between pods in the same zone if possible, especially if traffic volume is high.

For example, a cluster may be running two applications as two separate pods that require high-volume communication with each other, like a web application and a backend cache. Users can implement the Kubernetes node affinity and pod affinity features to ensure that these pods are placed within the same availability zone or even the same worker nodes. This not only reduces cross-zone traffic costs but also improves network latency. Users requiring high availability would need to deploy separate pods in other availability zones to minimize downtime during a zone failure.

Use VPC private endpoints

A VPC endpoint allows users to connect to an AWS service directly through their VPCs without traffic traversing the Internet. This feature is helpful because Internet traffic is charged at a high rate by AWS, so mitigating unnecessary communication is recommended for cost optimization. VPC endpoints can also improve performance because they offer lower network latency.

A per-hour cost is associated with creating a VPC endpoint, so it’s only worthwhile to create for services that users heavily use. Analyzing the user’s data transfer charges by service will provide insight into whether creating a VPC endpoint will be cost-effective.

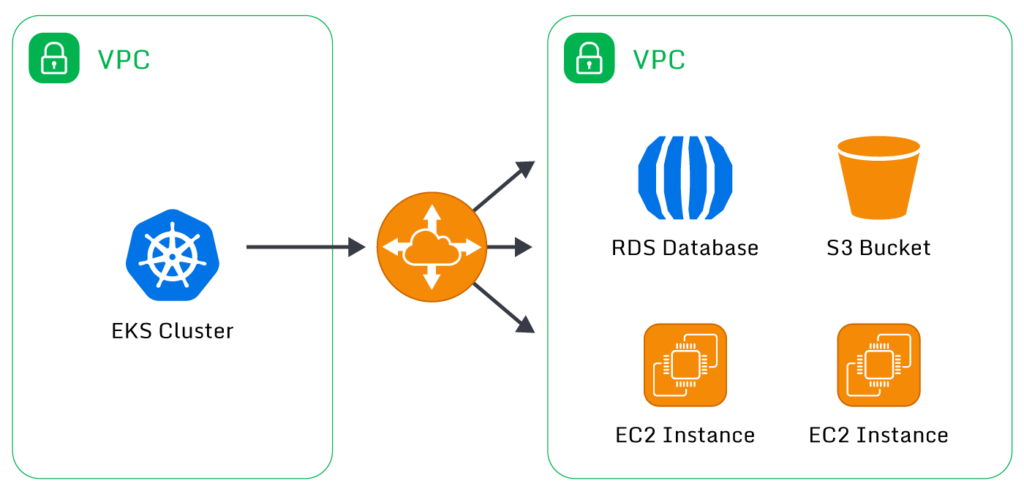

Use VPC peering or transit gateways

If the user’s EKS cluster requires communication with resources in other VPCs, setting up VPC peering or transit gateways will help with cost optimization. Implementing inter-VPC connectivity will ensure that traffic stays within the AWS network rather than traversing the Internet. As mentioned above, Internet traffic carries a much higher data transfer cost, so implementing these connectivity features can lead to significant cost savings.

Implement a caching strategy

Setting up appropriate caching, where possible, can significantly reduce network traffic and data transfer costs. Caching in this context means storing and reusing data for time-consuming operations to improve performance or mitigate unnecessary network activity. A caching strategy for containerized applications will need to be designed based on the application’s architecture, but common approaches include the following:

- Caching data within the pod itself using volumes on the worker node: For example, data downloaded from S3 for analysis could be temporarily stored locally for processing rather than making repeated calls to S3 for the same object.

- Caching database results with a tool like Redis: Redis is an open-source tool that allows objects to be stored in an in-memory cache, allowing faster reuse than repeatedly querying a database. Redis supports deployment as a Kubernetes resource, allowing other pods to cache data within the cluster to reduce queries to external databases like RDS.

- Caching with a content delivery network (CDN) like AWS CloudFront or CloudFlare: This type of caching involves storing objects like web pages at edge compute platforms to reduce traffic to the backend applications on the EKS cluster. Reducing traffic to the cluster by reusing results from previous requests will reduce data transfer costs for web applications. There are separate charges associated with CDN platforms, so a cost/benefit analysis is required to determine whether this approach will be beneficial.

Monitor data transfer usage

Monitoring data transfer charges via AWS Cost Explorer or other monitoring tools will help users understand where traffic costs can be optimized. Regularly analyzing these metrics may help identify other cost-saving opportunities. Data transfer is an often overlooked cost that leads to unwanted surprises for users who aren’t carefully monitoring their utilization levels and optimizing based on best practices.

Leveraging observability for cost optimization

Observability data, which is collected using tools such as AWS CloudWatch or Prometheus, is crucial for EKS cost optimization because it offers insights into the performance and resource usage of cluster applications and infrastructure. This data enables users to identify areas for cost optimization, performance enhancement, and budget adherence. Implementing observability tools for EKS resources (such as pods and nodes) and AWS infrastructure provides a comprehensive view of cluster operations and their impact on costs.

Kubernetes observability tools assist with cost optimization by delivering valuable information on cluster performance and resource utilization. Users need to be able to rightsize resources, detect overallocated resources, and resolve performance bottlenecks based on cluster metrics. Additionally, observability data can identify cost-related anomalies, such as sudden spikes in resource usage due to misconfigured pods, enabling users to address potentially expensive issues early on.

Applying labels to Kubernetes objects allows for categorization by use case, team, organization, etc., making it easier to analyze costs by breaking them down into separate sections.

Observability data also reveals long-term trends that aid in cost projections. This information helps users understand cluster growth, resource utilization patterns, and future cost estimates, knowledge of which is essential for budget planning and ensuring that costs remain within expectations.

That said, observability tools provide a great deal of metrics data, which may be overwhelming for administrators to manually interpret and process into actionable cost optimization improvements. Clusters with a large number of pods and nodes will produce significant quantities of observability data (logs, metrics, and traces). High-volume observability data will be challenging for administrators to interpret into accurate rightsizing recommendations, and the operational overhead will be significant. There are also skill and expertise requirements to make accurate recommendations.

Implementing resource optimization tooling based on machine learning technologies such as Densify can help provide actionable cost optimization recommendations in ways that are more accurate, repeatable, and time-efficient than manual approaches. In addition, the implementation of these recommendations can be automated. Optimization tools can evaluate a broad range of historical metrics data against customer-defined rules, identify patterns, and provide recommendations more accurately and quickly than a human administrator can. When it comes to leveraging observability data to implement node and pod rightsizing changes, optimization tools are a useful asset for the cluster administrator to reduce operational overhead and improve cost efficiency.

Incorporating observability tools for AWS services helps administrators track all cloud infrastructure costs associated with an EKS cluster. Since cloud billing can be complex, users may need help to pinpoint the sources of their expenses, particularly in intricate EKS clusters with numerous components. Gaining insights into AWS service utilization, long-term trends, and potential anomalies is beneficial for cost optimization efforts.

Free Proof of Concept implementation if you run more than 5,000 containers

REQUEST SESSIONSummary

Users can follow the best practices above to optimize their EKS clusters and AWS infrastructure to

balance cost and performance. Determining optimal cluster configuration details can be done by

implementing the appropriate resource optimization tools to provide recommendations for more

cost-effective cluster designs, enabling administrators to maintain cost efficiency while meeting

workload performance requirements.