A Free Guide to EKS Architecture

Chapter 3- Introduction: EKS Best Practices: A Free, Comprehensive Guide

- Chapter 1: EKS Fargate: Features and Best Practices

- Chapter 2: AWS ECS vs. EKS: Comparison and Recommendations

- Chapter 3: A Free Guide to EKS Architecture

- Chapter 4: A Comprehensive Guide to Using eksctl

- Chapter 5: EKS Storage - A Comprehensive Guide

- Chapter 6: A Free Guide to Using EKS Anywhere

- Chapter 7: Fundamentals of EKS Logging

- Chapter 8: EKS Security: Concepts and Best Practices

- Chapter 9: How the EKS Control Plane Works

- Chapter 10: EKS Blueprints: Deployment and Best Practices

- Chapter 11: EKS Cost Optimization: Tutorial & Best Practices

AWS offers a managed Kubernetes solution called Amazon Elastic Kubernetes Service (EKS). The essence of AWS’s offering is that it manages the Kubernetes control plane for you. AWS ensures that it is highly available and will scale out and in based on the workload imposed on it.

The data plane can consist of either EC2 instances or Fargate pods. The EC2 instances can also be optionally managed by AWS for you in what is called a managed node group. In addition, you have the option to run a cluster on-premises using a product called Amazon EKS Anywhere.

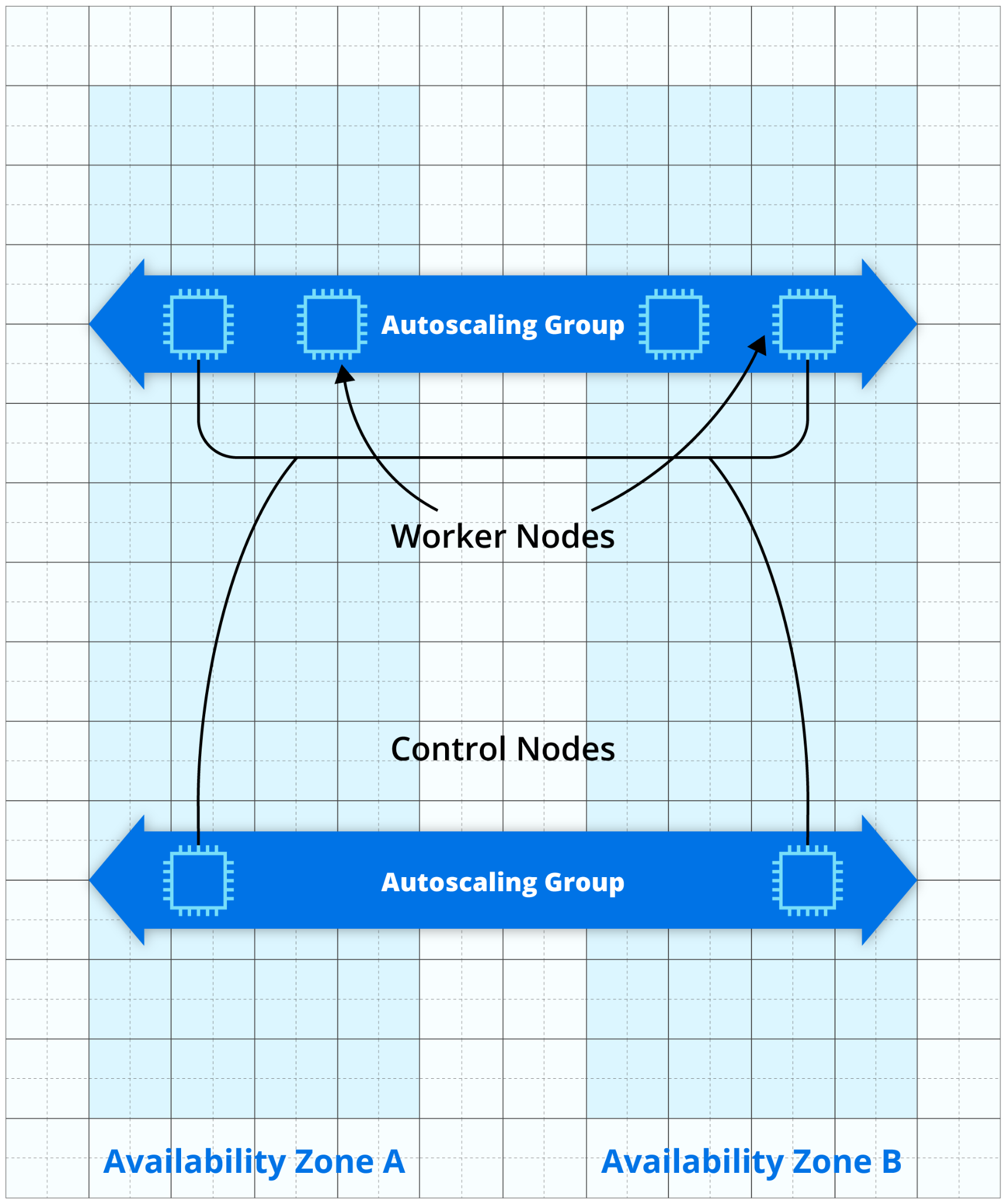

Here is a high-level diagram of a traditional approach leveraging EKS with EC2 instances:

This article will provide you with a solid overview of EKS architecture. It assumes that you are already familiar with the basics of both Kubernetes and AWS (especially EC2).

Please note that unless explicitly specified, we will cover EKS running inside the AWS cloud. There is a section on EKS Anywhere toward the end of the article, but cloud-based EKS, not on-premises, is our primary focus here.

Executive summary

The table below provides a concise summary of the information detailed later in the article.

| Differences between EKS and custom-built Kubernetes | With EKS, AWS will make virtually all the decisions related to the control plane. In exchange for giving up fine control in this area, you get a control plane with automated maintenance, scaling, and high availability. |

| EKS backed by EC2 instances | This arrangement mirrors traditional Kubernetes setups, with servers acting as worker nodes. The worker nodes can be either self-managed or managed automatically by AWS for you. |

| EKS backed by Fargate | This AWS-specific setup runs pods on Fargate, which is a serverless solution for running containers. The main advantage is that you don’t have worker nodes in this setup, so you are free from needing to deal with maintenance or scalability issues. |

| Elastic Container Registry (ECR) | ECR is not directly related to EKS, but EKS can use it to download container images. ECR offers both public and private registries, and it integrates scanners to check for common vulnerabilities in container images. |

| Interaction with the AWS infrastructure | AWS offers the necessary plugins so that Kubernetes integrates seamlessly with the AWS infrastructure. These plugins chiefly cover storage (access to EBS and EFS) and networking (CNI and load balancers). |

| EKS Anywhere | This recent AWS product allows you to run an EKS cluster on your own servers. It uses the same components as EKS but runs them on-premises. It does, however, have requirements and limitations. |

| Gotchas | There are a couple of gotchas to avoid when moving from Kubernetes to EKS. First, the entity that created the EKS cluster is initially the only one that can access the Kubernetes API. Second, EBS volumes are bound to a given availability zone, so you need to be careful when designing a cluster with apps that require persistent storage. |

Differences between EKS and custom-built Kubernetes

The main difference between EKS and a custom-built Kubernetes cluster is that you give up control over the control plane in exchange for AWS managing it for you. EKS is “opinionated,” and AWS will make choices for you over which you have very little or no control.

An example is that EKS will spin up a minimum of three instances just for etcd plus at least two instances for the Kubernetes control components. AWS will automatically scale out and in those instances; again, you have no control over how AWS does it.

Another example involves logs from the control plane, which are sent to CloudWatch Logs. You can’t choose another destination, although you could create Lambda functions that will ship the logs received by CloudWatch Logs to another destination as a workaround.

If you use Fargate, the only supported Container Network Interface (CNI) plugin is the one provided by AWS, so you don’t have a choice there.

Generally speaking, EKS’s choices and limitations make sense and should be acceptable for most projects. Don’t let the limits imposed by EKS turn you off — they could even be a good thing. The value of having a fully functional cluster with a highly available control plane at the click of a button should not be underestimated.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 60-day TrialEKS backed by EC2 instances

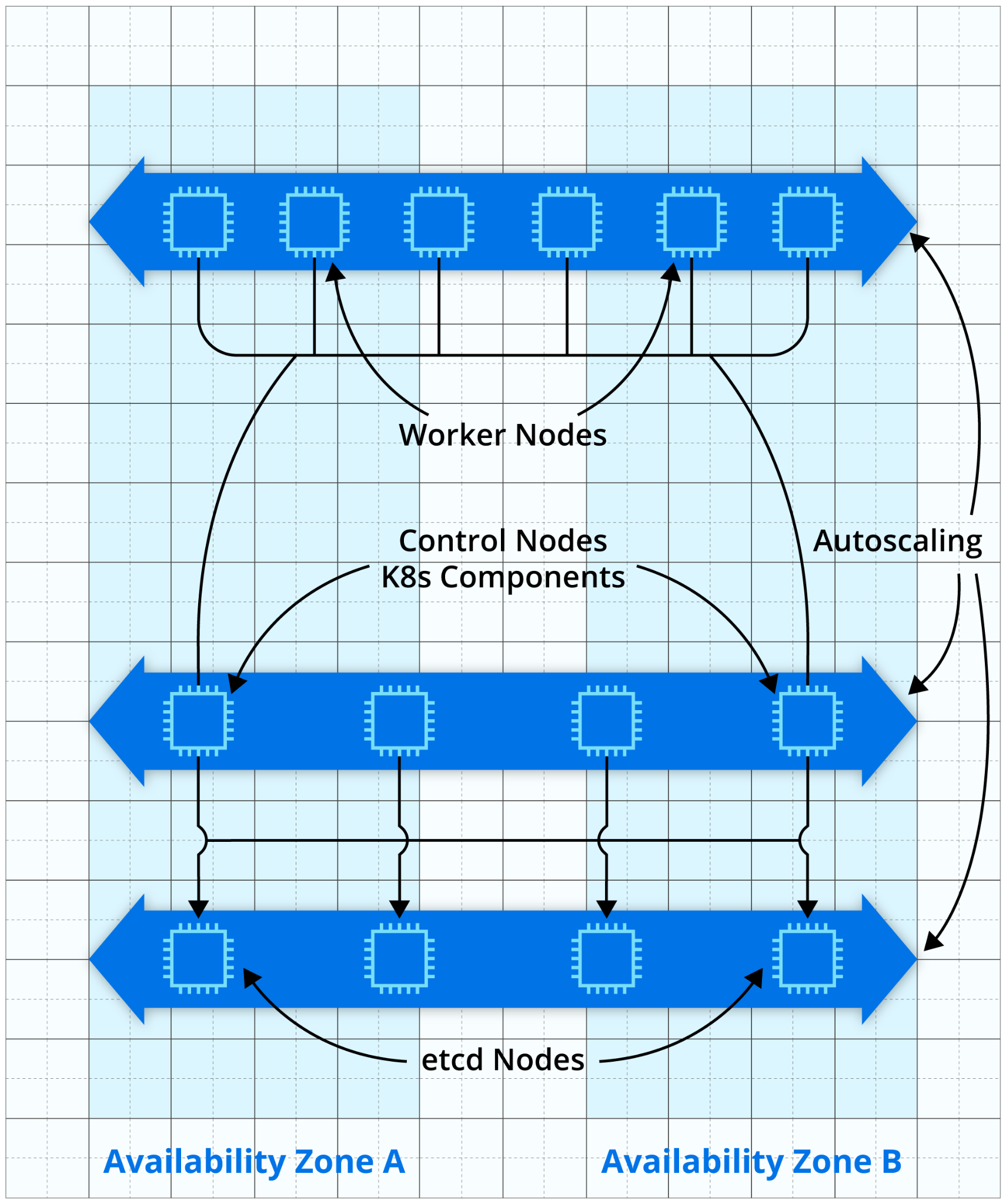

This is the more traditional setup, where Kubernetes nodes are actual EC2 instances. This configuration would look something like the following:

Control plane

When using EKS, AWS manages the control plane for you. As detailed previously, you have virtually no control over how it is managed, but you can rest assured that AWS will ensure that it is highly available, and it will scale out and in based on the workload.

The Kubernetes components (such as the API server, scheduler, etc.) will be hosted on a minimum of two EC2 instances spread over at least two availability zones (AZs). AWS runs etcd on a minimum of three EC2 instances, again spread over many availability zones. It is necessary to have at least three instances for etcd because that is the minimum number required to provide a strong consistency quorum. The etcd data store will be encrypted using Key Management Service (KMS), so its data is encrypted at rest.

AWS will also automatically manage the security groups attached to the above instances, so only allowed traffic can reach the Kubernetes API.

Data plane

To run the EC2 instances that will comprise the nodes of your Kubernetes cluster, you can either manage them yourself (self-managed nodes), or let AWS manage them for you in what is called a managed node group. AWS even allows you to mix both types of nodes if you so wish (some self-managed and some managed for you).

Self-managed nodes

If you decide to use self-managed nodes, you will need to deploy them in auto scaling groups (ASGs). When using EKS, all nodes in a given auto scaling group must be of the same instance type, use the same Amazon Machine Image (AMI), and have the same Identity and Access Management (IAM) instance role attached. However, nothing is stopping you from using more than one ASG, such as if you need different types of nodes for different kinds of workloads: For example, you might have some workloads that need an ARM processor and others requiring a GPU.

All self-managed nodes must have a specific tag:

kubernetes.io/cluster/CLUSTER_NAME=owned

Here you will replace “CLUSTER_NAME” with the actual name of your EKS cluster.

Theoretically, you are free to use any AMI you want, although AWS recommends that you use one of its purpose-built AMIs. These AMIs have been rigorously tested and are reasonably free from defects. They will also facilitate onboarding your self-managed nodes into your EKS cluster. If you have a need for customization, you can either build your own AMI based off AWS’s recommended AMIs or leverage the user data to customize your instances when they are created.

These instances are your responsibility, so you will have to manage them yourself, such as when they require system patching or a Kubernetes upgrade.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 60-day TrialManaged node groups

The other option is to let AWS manage the worker nodes by leveraging managed node groups. Similar to self-managed nodes, managed node groups use ASGs to handle the EC2 instances. AWS will create and manage these ASGs for you; again, you can have more than one ASG if you need a heterogeneous fleet. AWS will also ensure that the EC2 instances are properly tagged, so they can be managed by the Kubernetes autoscaler.

AWS also allows you to use a custom launch template, which will provide you with a high level of customization for the EC2 instances, such as changing the AMI or leveraging the user data. With this level of customization available in managed node groups, one might ask what the purpose is of self-managed instances. In fact, the real-world use cases where such self-managed instances would be necessary are probably very rare.

AWS will manage all instances in managed node groups for you, taking care of tasks such as OS patching and Kubernetes upgrades. It should be noted that EKS will manage upgrades for the control plane and the managed nodes, but not for the self-managed nodes. AWS provides detailed instructions on how to upgrade a cluster, which might involve some manual preparatory steps to bring the cluster into a state where EKS can safely perform the upgrade. The control plane can be upgraded first, and the managed nodegroups second using the eksctl program.

EKS backed by Fargate

Fargate is an AWS proprietary product that allows you to run containers without specifying the nodes they should run on. In other words, it is a serverless solution to run containers.

The control plane remains the same whether EKS is backed by EC2 instances or by Fargate, so everything written in the previous section remains valid in the case where you use Fargate. The difference is that EKS will schedule pods to run on Fargate.

You will need to create one or more Fargate profiles, which are essentially configuration elements that tell EKS how and when to manage pods on Fargate. Then pods that match these Fargate profiles will be scheduled to run on Fargate.

There are many advantages to using Fargate. First of all, it frees you from worrying about the maintenance of worker nodes. Because Fargate is a serverless service, it also removes any requirement for supporting autoscaling at the node level, since EKS can run as few or as many pods on Fargate as required (within certain limits).

There are limitations as well. From the point of view of the Kubernetes API, each pod will appear to be running on its own node, complete with kubelet. So there is a bit of inefficiency there because each “node” will run its own kubelet compared to an EC2-based setup.

AWS only supports the Amazon VPC CNI plugin for networking, which is probably acceptable in most use cases, but it also means that you can’t install plugins such as Calico to enforce Network Policies for pods running on Fargate. An alternative would be to use Security Groups, but then you would be moving away from a purely Kubernetes setup.

Also, pods running in Fargate can’t mount EBS-backed volumes. If your pods require persistent volumes, you can still use Elastic File System (EFS), so there is an acceptable alternative here. Finally, DaemonSets are not supported: If you require DaemonSets, Fargate won’t work for you.

For more details on using EKS with Fargate, see our dedicated article on the topic.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 60-day TrialElastic Container Registry

Strictly speaking, Elastic Container Registry (ECR) is not a Kubernetes component, but your EKS cluster is likely to make use of it, so it’s worth reviewing briefly. ECR is a managed service offered by AWS to store container images. You can even store Helm charts if you so choose.

The ECR offering is actually split in two: public registries and private registries. You can control which entity (such as AWS accounts or AWS services) can access private registries through the use of IAM policies. Public registries are just that, publicly accessible and managed separately from private ones.

ECR allows you to scan uploaded container images for vulnerabilities. AWS offers two scanning options: basic (which is free) and enhanced (which has a cost). The basic scanning option uses the Clair scanning tool to scan your container images when you upload them in ECR. Unfortunately, you can’t use any other tool; if you have a requirement to do so, you will need to create your own automated scanning using Lambda functions. The enhanced option uses Amazon Inspector, and it will scan for vulnerabilities both in the operating system and in the language used by the application (e.g., package dependencies).

Interaction with the AWS infrastructure

Kubernetes has been developed to allow all sorts of plugins, which allows it to run on most cloud platforms. AWS uses this flexibility of Kubernetes to provide all the necessary plugins for Kubernetes components to interact with the AWS cloud, mainly regarding networking and storage.

On the networking side, when you create an EKS cluster, EKS will install the Amazon VPC CNI plugin for you to allow pods to use the networking facilities offered by AWS. This plugin is the only one officially supported by AWS, but if you so choose, you can use other CNI plugins. If you use Network Policies, you will need to install another plugin to enforce them; AWS recommends Calico for this purpose.

For storage, AWS offers a bespoke Container Storage Interface (CSI) plugin so that Kubernetes’ persistent volumes are backed by Elastic Block Store (EBS). This plugin is not installed by default, so you will need to install it manually or have it installed as part of your infrastructure-as-code. AWS also has a CSI plugin to leverage EFS, which can offer more flexibility than EBS. Again, you will have to explicitly install this plugin if you need it.

EKS Anywhere

EKS Anywhere is a recent product from AWS that allows you to run a Kubernetes cluster on-premises using the same components that power EKS. It uses the same control plane software components as the cloud-based EKS offering. EKS Anywhere can also optionally connect back to the AWS cloud to provide you with a dashboard view of your on-premises cluster that is similar to that used by regular EKS clusters.

With EKS Anywhere, you are responsible for managing the control plane nodes, as AWS won’t be able to access your infrastructure to do that for you. In addition, there will be no ASGs, so you will also manage all worker nodes. Another minor difference is that AWS recommends Cilium (rather than Calico) for the CNI, which can also handle Network Policies.

Note that if you need to upgrade the Kubernetes version, this will involve a number of manual steps. You might also want to have a look at this description of the main differences between EKS and EKS Anywhere.

Gotchas

There are a few issues specific to EKS that can trip you up if you are not aware, so let’s have a look at them here.

First of all, when you create an EKS cluster, only the entity that created the cluster (be it a user or a role) will initially have access to the Kubernetes API. On top of that, there is no record of this visible anywhere in the AWS console or API, so you should make a note of the entity that created the cluster and probably never delete it. This entity has system:master permissions, in other words, full access. You can also give access to the Kubernetes API to more users/roles.

If your workload is stateful and backed by EBS volumes, you must ensure that each ASG is limited to one AZ. EBS volumes are constrained to a given AZ, and if a pod were scheduled on a different AZ, it wouldn’t be able to mount its EBS volume. To ensure that your workload remains highly available, you just need to create at least one ASG per AZ you intend to use.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 60-day TrialConclusion

EKS provides you with a fully managed Kubernetes cluster, with one exception: You will have to manage the worker nodes in the data planes yourself if you choose to use self-managed nodes. AWS added all the necessary plugins to ensure that Kubernetes integrates seamlessly with the AWS cloud.

EKS is a proven technology in terms of stability, robustness, and security, and it has the full backing of AWS. If you are considering running Kubernetes on AWS, evaluating EKS should be on your roadmap.

EKS makes a lot of choices for you, which are usually acceptable. Some large organizations might, however, decide to implement a Kubernetes cluster themselves for regulatory or other reasons. Such organizations usually have the manpower and financial backing required to take on such endeavors.

Instant access to Sandbox

Experience automated Kubernetes resource optimization in action with preloaded demo data.