How the EKS Control Plane Works

Chapter 9- Introduction: EKS Best Practices: A Free, Comprehensive Guide

- Chapter 1: EKS Fargate: Features and Best Practices

- Chapter 2: AWS ECS vs. EKS: Comparison and Recommendations

- Chapter 3: A Free Guide to EKS Architecture

- Chapter 4: A Comprehensive Guide to Using eksctl

- Chapter 5: EKS Storage - A Comprehensive Guide

- Chapter 6: A Free Guide to Using EKS Anywhere

- Chapter 7: Fundamentals of EKS Logging

- Chapter 8: EKS Security: Concepts and Best Practices

- Chapter 9: How the EKS Control Plane Works

- Chapter 10: EKS Blueprints: Deployment and Best Practices

- Chapter 11: EKS Cost Optimization: Tutorial & Best Practices

Amazon Web Services (AWS) offers a managed solution for Kubernetes clusters called Elastic Kubernetes

Service (EKS). Any Kubernetes cluster, whether running on EKS or something else, is composed of two

separate planes: the control plane and the data plane. The data plane is made up of worker nodes that

run your workloads, while the control plane runs the Kubernetes components that control the cluster.

This article will focus on the control plane of EKS and its details.

Summary of EKS control plane key concepts

The way the EKS control plane is structured is quite rigid because AWS makes a lot of decisions for you,

such as ensuring that the control plane is highly available and scales in and out depending on its load.

However, there are still a few important areas you can influence.

| Kubernetes version | You can upgrade (but not downgrade) the version of Kubernetes running on the control plane. It is highly recommended that you keep up with the Kubernetes releases, for security and compatibility purposes. EKS supports four recent versions of Kubernetes, although the latest version might not be immediately supported as soon as it comes out. |

| Addons | You can choose which addons to enable (or disable) in your cluster. AWS addons are typically used to allow Kubernetes access to AWS features (such as EBS). There are a handful of third-party addons that can help with security, monitoring, and other capabilities. |

| Logging | By default, the EKS control plane does not produce any logs. You can enable logging individually for various components of the EKS control plane, such as the Kubernetes API server or the audit subsystem. |

| Security | There are a few security aspects where you can have some input or must make decisions. You will need to decide on the security group that will be used for the control plane nodes, whether to enable secret encryption or not, and the visibility of the Kubernetes API server (public and/or private). |

Main characteristics of the EKS control plane

The main point to understand about the EKS control plane is that AWS makes a lot of choices for you, and

you have very little control over them. It is therefore considered opinionated, even though AWS

does have some good reasons to make these choices for you.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

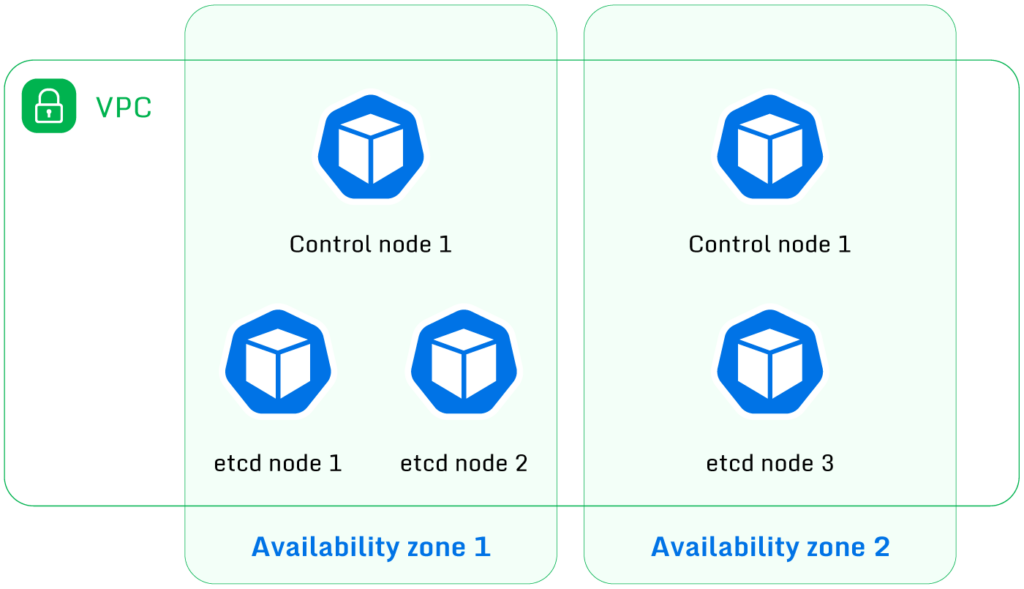

Free 60-day TrialAWS ensures that the control plane is highly available; to achieve this important goal, AWS runs the

following in the control plane:

- A minimum of three etcd instances (etcd requires an odd number of instances in order to be able to

elect a leader) - A minimum of two instances of the rest of the Kubernetes components, such as the API server and the

various controllers

So the control plane will look like the following, with the minimum number of instances shown.

two controller instances

What you cannot control in the EKS control

plane

AWS automatically scales the instances in the control plane in and out based on the load imposed on it,

but the number of instances will never go lower than three for etcd and two for the Kubernetes API. AWS

also automatically patches the instances so they are always up to date with respect to security

issues.

By the way, those instances are EC2 instances, but they are hidden from you. You can’t see them in the

EC2 console, and they are all managed automatically by AWS.

You can configure EKS so that pods run in Fargate instead of EC2 instances in the data plane. However,

even in such a configuration, the control plane components still run on EC2 instances. It is not

possible to run the control plane components on Fargate.

Another useful tidbit of information is that EKS automatically turns on encryption for EBS volumes, and

it is not possible for you to turn it off.

Finally, please be aware that the EKS control plane is single-tenant. In other words, you can’t use the

same EKS control plane for another Kubernetes cluster.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 60-day Trial

What you can control in the EKS control

plane

So, at this stage, you might wonder what you actually can control in the EKS control plane. As it

happens, there are a number of areas that are very important.

Kubernetes version and updates

First, you can control the version of Kubernetes running inside the cluster. You can select which version

of Kubernetes you want when creating the cluster; AWS generally supports the four most recent versions,

although the latest one might take a bit of time to be added. You can also update the Kubernetes version

of an existing cluster.

You are not obliged to update your Kubernetes version, so your cluster might fall behind, and AWS won’t

force you to update. Please note that you are still very strongly encouraged to keep up to date.

Here is a summary of the steps used to update

the Kubernetes version:

- Ensure that the cluster is in a state where it is ready to be updated, which mostly involves

ensuring that the various nodes are running the same Kubernetes version as the control plane. - Update the control plane using the eksctl command line.

- Update the data plane nodes to the new Kubernetes version.

- Update the cluster autoscaler (if it is installed).

- Update the EKS addons that are enabled on the cluster.

Please note that you can update the Kubernetes version only one minor revision at a time. For example,

you can’t update straight from 1.22 to 1.24: You need to update to 1.23 first and then to 1.24.

One nice aspect of updating the version is that it does not require downtime.

Which addons to enable

EKS offers a number of addons, both from AWS itself and also from third-party vendors. Kubernetes is very

configurable and uses plugins to interact with the platform it is running on. The AWS addons mostly

implement such interfaces, typically for networking and storage.

The third-party addons are very limited as of the time of writing, but some do provide useful features,

such as ones related to security and monitoring.

Enabling and managing

addons can be done using the EKS console, the AWS command-line tool, or eksctl. Most likely, you

will need to enable addons out of necessity—for example, if you want to use EBS volumes as persistent

volumes—or perhaps because there is a third-party addon that provides value to your setup.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 60-day Trial

Logging

The control plane can produce quite a large amount of logs for various subsystems, such as the API or the

auditing subsystem. By default, none of these logs are enabled, so it’s up to you to enable the EKS logging you need. The control

plane components for which you can enable logs include the following:

- API server: Enable this to collect logs from the Kubernetes API server.

- Authenticator: These logs are specific to AWS, as Kubernetes does not have an

authentication system; these will log authentication activities performed by IAM roles and users

with respect to this cluster. - Audit: This is the audit trail of what users and system accounts have done to the

cluster over time. - Controller manager: These are logs from the various Kubernetes control loops.

- Scheduler: Logs from the scheduler, which is the component that decides when and

where to run pods.

You will typically enable these logs to debug issues, although the audit log is more for security and/or

compliance purposes.

The logs are sent to CloudWatch Logs and, unfortunately, you can’t change the destination. You can easily

copy those logs to a different destination by attaching a Lambda function to the log streams and

configuring it to be triggered whenever a log entry is added. The Lambda function can then forward the

logs to a different location. If you don’t need real-time logs, you can simply export the logs to S3 on

a regular basis.

Security

There are some security considerations that are in your control as well.

First, you will need to create a security group that will be used by the control plane instances. It’s

worth knowing that if you use eksctl to create the cluster, it will do this for you.

You can turn on at-rest encryption of secrets,

which is highly recommended, especially in a production cluster.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 60-day TrialFinally, you need to decide on the level of visibility of the Kubernetes API, which can be one of the

following:

- Public: You can access the Kubernetes API from the Internet.

- Private: You can access the Kubernetes API only from within the VPC, which would

typically require you to use a VPN. - Both: The Kubernetes API is accessible both from the Internet and the VPC.

The right choice depends on the level of security required for your project. Obviously, having the

Kubernetes API accessible from the Internet will make it more vulnerable, but this could somewhat be

mitigated by the fact that you can restrict the range of IP addresses that can access it.

Recommendations

The number one recommendation is to keep the Kubernetes version up to date in order to stay current with

the latest security patches. In addition, you should not be tempted to fall into the trap of “if it

works, don’t touch it”: If you do, later on, you will find yourself unable to do anything with your

cluster because all the tools and third-party software require a more recent version of Kubernetes.

Also, the longer you wait to update your Kubernetes version, the more painful it will be to do so.

Another recommendation high on the list is to avoid using IAM users to allow your pods to access AWS

resources. Creating an IAM user with access keys and storing the keys as secrets in Kubernetes is easy,

but it is not considered best practice. Instead, use IAM roles for service accounts (IRSA), which allows

you to tie an IAM role to a Kubernetes service account. This is a much cleaner way to let your pods

access AWS resources.

Please note that after creating an EKS cluster, only the IAM role (or user) that created the cluster can

access its Kubernetes API—and it has administrator access, by the way. If you lose access to this IAM

user/role, you will lose access to the cluster entirely. There is no way for you to know which IAM

user/role created the EKS cluster in the first place, so you should make a note of this information.

To avoid this risk (and also as a best practice), you should create Kubernetes users shortly after the

cluster is created. You can manually add users by editing the aws-auth

config map, which is a good enough way to add named users to the Kubernetes cluster. A more advanced

method is to use OpenID Connect. This is an advanced topic that would require its own article, but in

short, it would allow users in your organization to access the Kubernetes API, with permissions

restricted by the Kubernetes administrators.

Finally, although this is not directly related to the EKS control plane, it is important that you

correctly size CPU and memory resources for your pods. For each pod (or higher-level object that

controls pods, such as a Deployment or a StatefulSet), you can specify the minimum and maximum CPU and

memory. It is important that you set correct values for your pods in the context of your overall

workload. If you set values too high, you will waste resources and pay too much for your workload. If

you set values too low, your pods might be arbitrarily terminated (in case memory is exceeded) or

starved of CPU (if the CPU limit is reached).

Finding good limits takes time and experimentation, as your workload is unique. The same app can require

very different resources depending on how it is used, and that’s why it’s hard to anticipate the correct

limits and you must adapt them to the way your workload runs. Fortunately, a tool like Densify

automates this optimization process using machine learning technology and presents the recommendations

as JSON files that tools like Terraform and Ansible can implement. This three-minute

video shows how it works.

Conclusion

The EKS control plane is opinionated: AWS makes choices for you, and you have very little control over

the internal structure of the control plane. Those choices should be acceptable for most projects and,

generally speaking, make a lot of sense because AWS ensures that the control plane is highly available

and can scale in and out depending on its load.

It is very important that you keep the Kubernetes version current. Letting the Kubernetes version become

obsolete will increase the probability that you run into all sorts of problems, and it may even open you

up to security issues.

Overall, EKS is a robust and well-tested solution that is used with great success by many organizations.

If you intend to run a Kubernetes cluster on AWS, EKS is a logical choice.

Please keep in mind that because EKS will maintain high availability for all the components of the

control plane, as well as scaling them in and out depending on its load, EKS will not be the cheapest

solution around. If you just want to run some experiments or are on a budget, EKS might be too expensive

for you.

Instant access to Sandbox

Experience automated Kubernetes resource optimization in action with preloaded demo data.