Guide to K8s Workloads

Chapter 6- Introduction: Kubernetes Autoscaling

- Chapter 1: Vertical Pod Autoscaler (VPA)

- Chapter 2: Kubernetes HPA

- Chapter 3: K8s Cluster Autoscaler

- Chapter 4: K8s ResourceQuota Object

- Chapter 5: Kubernetes Taints & Tolerations

- Chapter 6: Guide to K8s Workloads

- Chapter 7: Kubernetes Service Load Balancer

- Chapter 8: Kubernetes Namespace

- Chapter 9: Kubernetes Affinity

- Chapter 10: Kubernetes Node Capacity

- Chapter 11: Kubernetes Service Discovery

- Chapter 12: Kubernetes Labels

A workload is an application running in one or more Kubernetes (K8s) pods. Pods are logical groupings of containers running in a Kubernetes cluster that controllers manage as a control loop (in the same way that a thermostat regulates a room’s temperature). A controller monitors the current state of a Kubernetes resource and makes the requests necessary to change its state to the desired state. Workload resources (to use the appropriate Kubernetes terminology) configure controllers that ensure the correct pods are running to match the desired state that you have defined for your application.

| Workload Resource | Common Use Cases |

|---|---|

| Deployment and ReplicaSet | ReplicaSets are managed via declarative statements in a Deployment. Deployments are used for stateless applications such as API gateways. |

| StatefulSet | As the name implies, StatefulSets are often used for stateful applications. StatefulSets can also be used for highly available applications and applications that need multiple pods and server leaders. For example, a highly available RabbitMQ messaging service. |

| DaemonSet | DaemonSets are often used for log collection or node monitoring. For example, the Elasticsearch, Fluentd, and Kibana (EFK) stack can be used for log collection. |

| Job and CronJob | CronJobs and Jobs are used to run pods that only need to run at specific times, such as creating a database backup. |

| Custom Resource | Custom resources are often used for multiple purposes, such as top-level kubectl support or adding Kubernetes libraries and CLIs to create and update new resources. An example of a new resource is a Certificate Manager, which enables HTTPS and TLS support. |

Kubernetes Workload Deployment

A deployment provisions a ReplicaSet, which in turn provisions a pod according to its desired state. When a deployment is changed or updated, a new ReplicaSet is provisioned and replaces the previous ReplicaSet. This process is so seamless that deployment updates can occur with no downtime. Stateless applications, which do not save information about previous operations, are a common use for deployments. “Stateless” means operations always start from scratch. For example, if a search operation starts but stops before it completes, it will have to start again from the beginning. Here is an example of a deployment workload:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: grafana

name: grafana

spec:

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

securityContext:

fsGroup: 472

supplementalGroups:

- 0

containers:

- name: grafana

image: grafana/grafana:7.5.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: http-grafana

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /robots.txt

port: 3000

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 30

successThreshold: 1

timeoutSeconds: 2

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 3000

timeoutSeconds: 1

resources:

requests:

cpu: 250m

memory: 750Mi

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-pv

volumes:

- name: grafana-pv

persistentVolumeClaim:

claimName: grafana-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1GiDeployment workloads commonly connect to databases that keep user and application while workloads remain stateless. Hence, provisioning of applications like Grafana in K8s happens as a Deployment.

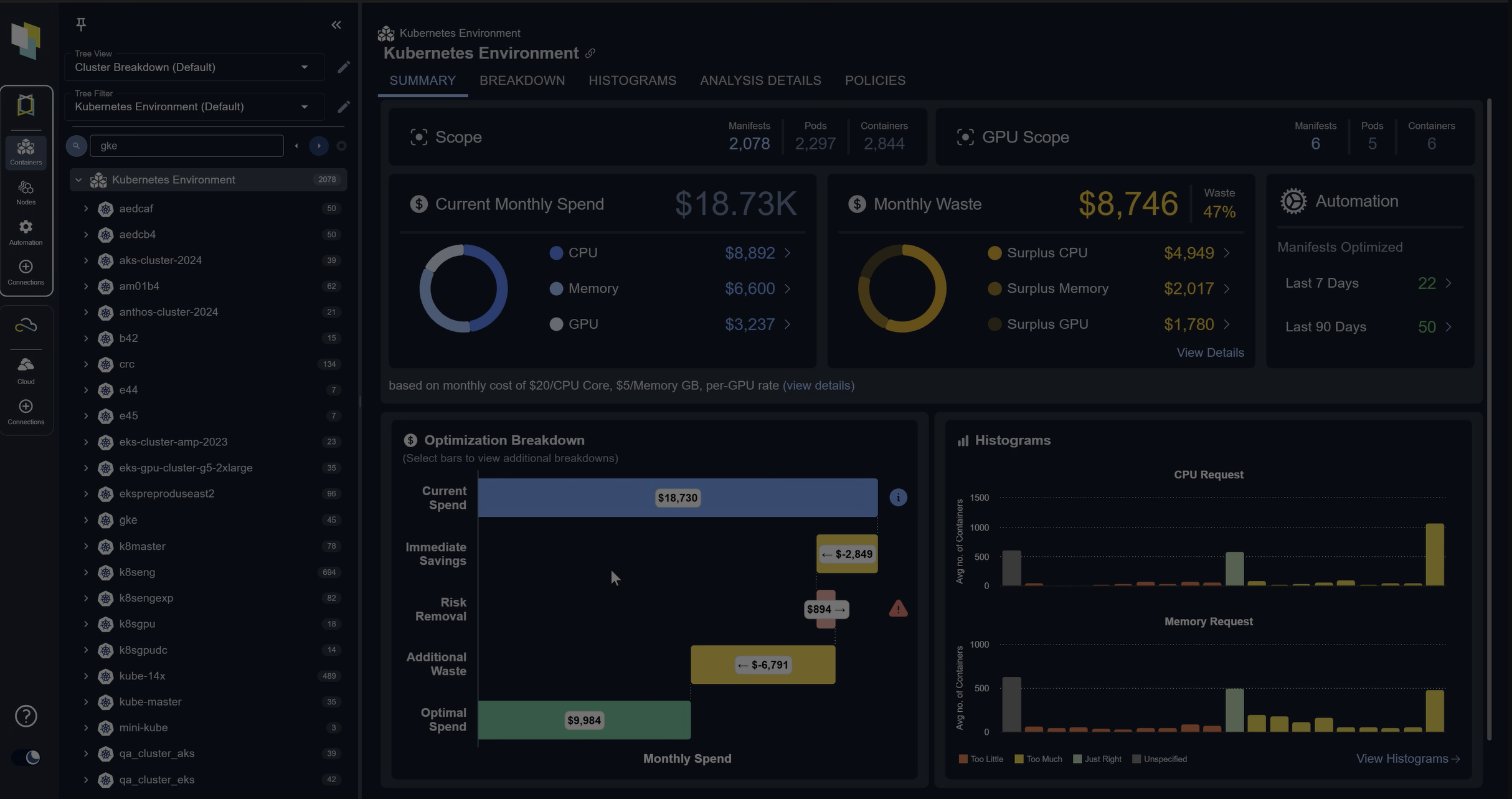

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialStatefulSet

StatefulSets run stateful applications. StatefulSets provision pods with unique identifiers and maintain an identity for each pod. The pods are created from the same specifications but are not interchangeable, as identifiers persist across rescheduling. Volumes can be provisioned and matched with pod identifiers even as pods are restarted, rescheduled, or in the event of failure.

StatefulSets also deploy in ordered states and require graceful starts. StatefulSets deploy, delete, and scale in an ordered manner. Deleting a StatefulSet does not delete its volumes to ensure data safety. Additionally, sometimes pods are not terminated if a StatefulSet is deleted.

Here is an example of a StatefulSet workload resource:

---

apiVersion: v1

kind: Service

metadata:

# Expose the management HTTP port on each node

name: rabbitmq-management

labels:

app: rabbitmq

spec:

ports:

- port: 15672

name: http

selector:

app: rabbitmq

type: NodePort # Or LoadBalancer in production w/ proper security

---

apiVersion: v1

kind: Service

metadata:

# The required headless service for StatefulSets

name: rabbitmq

labels:

app: rabbitmq

spec:

ports:

- port: 5672

name: amqp

- port: 4369

name: epmd

- port: 25672

name: rabbitmq-dist

clusterIP: None

selector:

app: rabbitmq

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: rabbitmq

spec:

serviceName: "rabbitmq"

replicas: 3

selector:

matchLabels:

app: rabbitmq

template:

metadata:

labels:

app: rabbitmq

spec:

containers:

- name: rabbitmq

image: rabbitmq:3.6.6-management-alpine

lifecycle:

postStart:

exec:

command:

- /bin/sh

- -c

- >

if [ -z "$(grep rabbitmq /etc/resolv.conf)" ]; then

sed "s/^search ([^ ]+)/search rabbitmq.1 1/" /etc/resolv.conf > /etc/resolv.conf.new;

cat /etc/resolv.conf.new > /etc/resolv.conf;

rm /etc/resolv.conf.new;

fi;

until rabbitmqctl node_health_check; do sleep 1; done;

if [[ "$HOSTNAME" != "rabbitmq-0" && -z "$(rabbitmqctl cluster_status | grep rabbitmq-0)" ]]; then

rabbitmqctl stop_app;

rabbitmqctl join_cluster rabbit@rabbitmq-0;

rabbitmqctl start_app;

fi;

rabbitmqctl set_policy ha-all "." '{"ha-mode":"exactly","ha-params":3,"ha-sync-mode":"automatic"}'

env:

- name: RABBITMQ_ERLANG_COOKIE

valueFrom:

secretKeyRef:

name: rabbitmq-config

key: erlang-cookie

ports:

- containerPort: 5672

name: amqp

volumeMounts:

- name: rabbitmq

mountPath: /var/lib/rabbitmq

volumeClaimTemplates:

- metadata:

name: rabbitmq

annotations:

volume.beta.kubernetes.io/storage-class: openebs-jiva-default

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 5GStateful applications store user information and actions. StatefulSets can also be used to form clusters, whereas Deployments cannot. The unique identifiers of the pods allow cluster formation. There are multiple mechanisms capable of forming clusters. Detailing the different mechanisms for cluster formation is beyond the scope of this article. Benefits of cluster formation include synchronization of data across nodes which increases data safety and the application’s resilience. Another advantage is that a cluster can self-heal if a single pod is deleted, provided that the application supports self-healing. RabbitMQ is an example of an application that can form a cluster and has these features when configured correctly.

RabbitMQ is a messaging service that streamlines communication between services within a Kubernetes cluster. RabbitMQ can run as a single pod, but doing that results in having a single point of failure. Hence, it is recommended that RabbitMQ runs as a cluster in production. StatefulSets enable RabbitMQ and other applications to be run as stable stateful clusters and increase the stability and resilience of applications.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialDaemonSet

A DaemonSet provisions a copy of a pod onto all (or some) nodes depending on the taints and labels. Pod provisioning occurs on new nodes added to the cluster. Similarly, pods are garbage collected from nodes removed from the cluster.

The deletion of a DaemonSet results in the deletion of all pods. DeamonSets are particularly useful for log and metrics collection across a cluster.

Below is an example of DaemonSet workload:

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

name: node-exporter

namespace: monitoring

spec:

selector:

matchLabels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 2

template:

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

annotations:

prometheus.io/scrape: "true"

prometheus.io/path: '/metrics'

prometheus.io/port: "9100"

spec:

hostPID: true

hostIPC: true

hostNetwork: true

enableServiceLinks: false

containers:

- name: node-exporter

image: prom/node-exporter

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

args:

- '--path.sysfs=/host/sys'

- '--path.rootfs=/root'

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+|var/lib/kubelet/pods/.+)($|/)

- --collector.netclass.ignored-devices=^(veth.*)$

ports:

- containerPort: 9100

protocol: TCP

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 50m

memory: 50Mi

volumeMounts:

- name: sys

mountPath: /host/sys

mountPropagation: HostToContainer

- name: root

mountPath: /root

mountPropagation: HostToContainer

tolerations:

- operator: Exists

effect: NoSchedule

volumes:

- name: sys

hostPath:

path: /sys

- name: root

hostPath:

path: /The node exporter DaemonSet collects and exposes metrics such as CPU utilization, memory usage, disk IOPS, etc.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialJob and CronJob

A Job creates one or more Pods and runs them until completion. It will retry until the required number has successfully run. When this occurs, the Job is complete. A CronJob, on the other hand, creates a job on a repeating schedule. You can write schedules in Cron format. An example of a CronJob workload resource is below:

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: database-backup-job

namespace: default

spec:

schedule: "0 0 * * *"

jobTemplate:

spec:

template:

spec:

restartPolicy: OnFailure

containers:

- name: db-backup

image: "ghcr.io/omegion/db-backup:v0.9.0"

imagePullPolicy: IfNotPresent

args:

- s3

- export

- --host=$DB_HOST

- --port=$DB_PORT

- --databases=$DATABASE_NAMES

- --username=$DB_USER

- --password=$DB_PASS

- --bucket=$BUCKET_NAME

env:

- name: DB_HOST

value: ""

- name: DB_PORT

value: ""

- name: DB_USER

value: ""

- name: DB_PASS

value: ""

- name: DATABASE_NAMES

value: ""

- name: BUCKET_NAME

value: ""

- name: AWS_ACCESS_KEY_ID

value: ""

- name: AWS_SECRET_ACCESS_KEY

value: ""

- name: AWS_REGION

value: ""This CronJob effectively backs up a database.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day Trial

Custom Resources

Custom Resources extend the Kubernetes API. The Kubernetes API stores API objects, such as DaemonSets. A custom resource adds extensions to the Kubernetes API that is not available in its default setup. Custom Resources can be added and deleted from a cluster.

Once installed, Custom Resources are accessible via kubectl. For example, after installing Certificate Manager, provisioned certificates can be viewed via ‘kubectl get certificates‘. An example of a Custom Resource, such as the Certificate Manager, can be installed from the following link:

kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.4.0/cert-manager.yamlUsers can also add custom controllers through custom resource definitions. Adding definitions allows custom resources to be combined with customer controllers, creating a declarative API similar to existing K8s workloads such as Deployments and DaemonSets. This combination will enable users to specify their desired state, and the control loop would continuously assess the actual state and provision resources as required to maintain the desired state. Custom controllers can also be added or deleted independently of a cluster’s lifecycle. Custom controllers tend to be more effective with custom resources but work with any type of resource.

Kubernetes Workloads Best Practices

Here are some of the most important K8s workloads best practices:

1. Use the Latest K8s Version

Always download the latest version because there tend to be security patches and new features.

2. Maintain & Use Smaller Container Images

Using Linux Alpine images where possible is recommended because the Alpine linux distribution tends to create container images that are up to 80% smaller than those from mainstream linux distributions. If you need to, you can start from a smaller Alpine image and add additional packages to suit your application requirements.

3. Always Set Resource Requests and Limits

You can set resource limits as follows:

Containers:

- name: elasticsearch

image: elasticsearch

resources:

limits:

cpu: 4000m

memory: 10000Mi

requests:

cpu: 2500m

memory: 1000MiIt is advantageous because it limits the amount of CPU and memory resources a container can use and the maximum at which it restarts, effectively preventing a domino effect across containers when node resources are depleted. Kubernetes offers a simplistic mechanism to dynamically allocate requests and limits using the Vertical Pod Autoscaler (VPA) ; however the best practice is to use machine learning and sophisticated algorithms to measure container resource usage and avoid allocation mistakes that can lead to outages or financial waste.

4. readinessProbe & livenessProbe

Use readinessProbe and livenessProbe. These probes ensure that pods are ready to start accepting traffic and determine whether it is healthy enough to keep accepting traffic, respectively.

5. Role-Based Access Controls (RBAC)

Use Role-Based Access Controls (RBAC) to determine access policies. RBAC defines what a Kubernetes resource can access within the Kubernetes cluster.

Conclusion

Kubernetes workloads are the backbone of K8s. For example, the workload methodology for updating Deployments enables zero downtime updates, which increases application availability. Additionally, DaemonSets allow effective monitoring of applications as well, among other use cases. Because it is such a fundamental aspect of K8s, understanding Kubernetes Workloads is essential to learning how to use Kubernetes in a production environment.

FAQs

What is a Kubernetes Workload?

A Kubernetes Workload is an application running on one or more pods managed by controllers. Workloads define the desired state of applications, and controllers ensure the correct pods are running to maintain that state.

What are the main types of Kubernetes Workload resources?

The key Kubernetes Workload resources include Deployments, StatefulSets, DaemonSets, Jobs, CronJobs, and Custom Resources. Each serves different use cases such as stateless apps, stateful clusters, monitoring, scheduled jobs, and custom APIs.

When should I use a Deployment vs. a StatefulSet in Kubernetes Workload management?

Use a Deployment for stateless applications like APIs or front-end services. Use a StatefulSet for stateful applications that require persistent identity and storage, such as databases or messaging systems like RabbitMQ.

What role do DaemonSets play in Kubernetes Workloads?

DaemonSets ensure that a copy of a pod runs on every node (or specific nodes) in a cluster. They’re commonly used for monitoring, log collection, or node-level services such as Prometheus exporters.

What are best practices for Kubernetes Workload configuration?

Best practices include using small container images, setting resource requests and limits, applying readiness and liveness probes, and enforcing RBAC policies. These practices improve reliability, security, and efficiency.

Try us

Experience automated K8s, GPU & AI workload resource optimization in action.