Kubernetes HPA

Chapter 2- Introduction: Kubernetes Autoscaling

- Chapter 1: Vertical Pod Autoscaler (VPA)

- Chapter 2: Kubernetes HPA

- Chapter 3: K8s Cluster Autoscaler

- Chapter 4: K8s ResourceQuota Object

- Chapter 5: Kubernetes Taints & Tolerations

- Chapter 6: Guide to K8s Workloads

- Chapter 7: Kubernetes Service Load Balancer

- Chapter 8: Kubernetes Namespace

- Chapter 9: Kubernetes Affinity

- Chapter 10: Kubernetes Node Capacity

- Chapter 11: Kubernetes Service Discovery

- Chapter 12: Kubernetes Labels

Today, application deployments often use multiple container instances in parallel. Kubernetes (K8s) is the most popular platform for orchestrating and managing these container clusters at scale.

One of the main advantages of using Kubernetes as a container orchestrator is the dynamic scaling of container pods. To enable dynamic scaling, Kubernetes supports three primary forms of autoscaling:

- Horizontal Pod Autoscaling (HPA)

- A Kubernetes functionality to scale out (increase) or scale in (decrease) the number of pod replicas automatically based on defined metrics.

- Vertical Pod Autoscaling (VPA)

- A Kubernetes functionality to right-size the deployment pods and avoid resource usage problems on the cluster. VPA is more related to capacity planning than other forms of K8s autoscaling.

- Cluster Autoscaling

- A type of autoscaling commonly supported on cloud provider versions of Kubernetes. Cluster Autoscaling can dynamically add and remove worker nodes when a pod is pending scheduling or if the cluster needs to shrink to fit the current number of pods.

In this article, we’ll take an in-depth look at the first of these three, HPA. Specifically, we’ll explore what horizontal pod autoscaling is, how it works, and provide some examples of using HPA for autoscaling application pods on Kubernetes clusters.

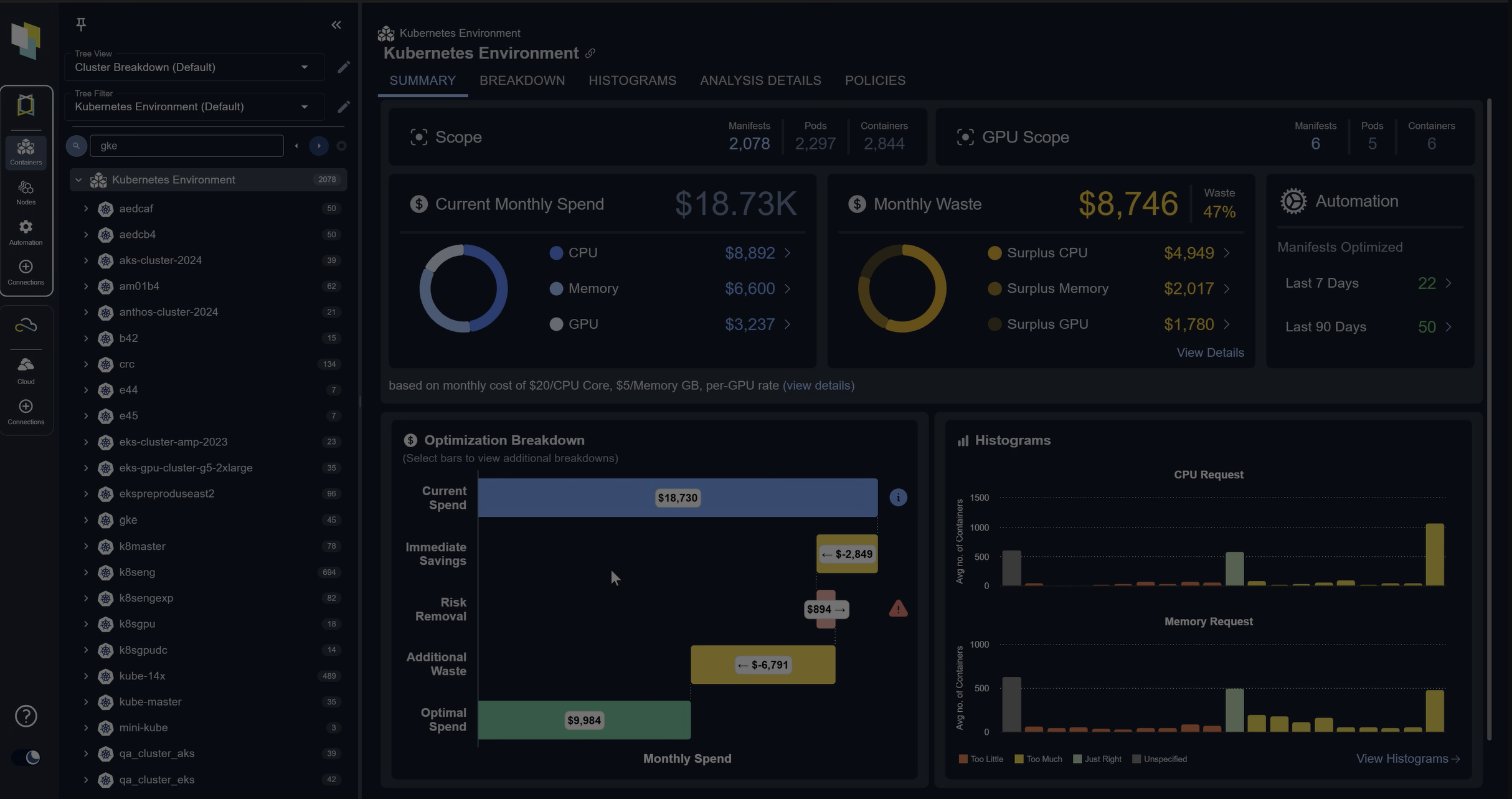

We cover the Kubernetes autoscaling limitations in the last section of this article. For now, it’s worth noting that Kubernetes autoscaling can still result in resource waste. One reason is that users often request (or reserve) more CPU and memory resources for individual containers than they use. A second reason is that the Kubernetes autoscaling functionality does not account for disk I/O and network and storage usage resulting in poor allocation. Another reason is that accurate resource optimization requires advanced data aggregation methods and machine learning technology provided by advanced commercial software not yet available in open source form.

Horizontal Pod Autoscaler (HPA) Overview

In Kubernetes, you can run multiple replicas of application pods using ReplicaSet (Replication Controller) by configuring the number of replica counts in the Deployment object. Manually setting the number of pod replicas to a fixed predetermined number might not meet the application workload demand over time. Therefore, to optimize and automate this process, Kubernetes provides a new resource called horizontal pod autoscaler HPA.

HPA can increase or decrease pod replicas based on a metric like pod CPU utilization or pod Memory utilization or other custom metrics like API calls. In short, HPA provides an automated way to add and remove pods at runtime to meet demand. Note that HPA works for the pods that are either stateless or support autoscaling out of the box. Workloads that can’t run as multiple instances/pods cannot use HPA.

How HPA Works

In every Kubernetes installation, there is support for an HPA resource and associated controller by default.

The HPA control loop continuously monitors the configured metric, compares it with the target value of that metric, and then decides to increase or decrease the number of replica pods to achieve the target value.

The diagram shows that the HPA resource works with the deployment resource and updates it based on the target metric value. The pod controller (Deployment) will then either increase or decrease the number of replica pods running.

Without a contingency, one problem that can occur in these scenarios is thrashing. Thrashing is a situation in which the HPA performs subsequent autoscaling actions before the workload finishes responding to prior autoscaling actions. The HPA control loop avoids thrashing by choosing the largest pod count recommendation in the last five minutes.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialHow to Use HPA

This section will walk through an example code showing how HPA can be configured to auto-scale application pods based on target CPU utilization. There are two ways to create an HPA resource:

- The kubectl autoscale command

- The HPA YAML resource file

This code snippet shows creating a Kubernetes deployment and HPA object to auto-scale the pods of that deployment based on CPU load. This is shown step by step along with comments.

Create a namespace for HPA testing

kubectl create ns hpa-test

namespace/hpa-test createdCreate a deployment for HPA testing

cat example-app.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

namespace: hpa-test

spec:

selector:

matchLabels:

run: php-apache

replicas: 1

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: k8s.gcr.io/hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

namespace: hpa-test

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

kubectl create -f example-app.yaml

deployment.apps/php-apache created

service/php-apache createdMake sure the deployment is created and the pod is running

kubectl get deploy -n hpa-test

NAME READY UP-TO-DATE AVAILABLE AGE

php-apache 1/1 1 1 22sAfter the deployment is up and running, create the HPA using kubectl autoscale command. This HPA will maintain minimum 1 and max 5 replica pods of the deployment to keep the overall CPU usage to 50%

kubectl -n hpa-test autoscale deployment php-apache --cpu-percent=50 --min=1 --max=5

horizontalpodautoscaler.autoscaling/php-apache autoscaledThe declarative form of the same command would be to create the following Kubernetes resource

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

namespace: hpa-test

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 50Examine the current state of HPA

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day Trial

kubectl -n hpa-test get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 5 1 17sCurrently there is no load on the running application so the current and desired pods are equal to the initial number which is 1

kubectl -n hpa-test get hpa php-apache -o yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

namespace: hpa-test

resourceVersion: "402396524"

selfLink: /apis/autoscaling/v1/namespaces/hpa-test/horizontalpodautoscalers/php-apache

uid: 6040eea9-0c2b-47de-9725-cfb78f17fe32

spec:

maxReplicas: 5

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

targetCPUUtilizationPercentage: 50

status:

currentCPUUtilizationPercentage: 0

currentReplicas: 1

desiredReplicas: 1Now run the load test and see the HPA status again

kubectl -n hpa-test run -i --tty load-generator --rm --image=busybox --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"If you don’t see a command prompt, try pressing enter.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialOK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!

kubectl -n hpa-test get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 211%/50% 1 5 4 10mStop the load by pressing CTRL-C and then get the hpa status again , this will show things get back to normal and one replica running

kubectl -n hpa-test get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 5 1 20h

kubectl -n hpa-test get hpa php-apache -o yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

namespace: hpa-test

resourceVersion: "402402364"

selfLink: /apis/autoscaling/v1/namespaces/hpa-test/horizontalpodautoscalers/php-apache

uid: 6040eea9-0c2b-47de-9725-cfb78f17fe32

spec:

maxReplicas: 5

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

targetCPUUtilizationPercentage: 50

status:

currentCPUUtilizationPercentage: 0

currentReplicas: 1

desiredReplicas: 1

lastScaleTime: "2021-07-04T08:22:54Z"Clean up the resources

kubectl delete ns hpa-test --cascade

namespace "hpa-test" deletedKubernetes HPA Best Practices

- To take advantage of Kubernetes features like HPA, you need to develop the application with horizontal scaling in mind. Doing so requires using a microservice architecture to add native support for running parallel pods.

- Use the HPA resource on a Deployment object rather than directly attaching it to a ReplicaSet controller or Replication controller.

- Use the declarative form to create HPA resources so that they can be version-controlled. This approach helps better track configuration changes over time.

- Make sure to define the resource requests for pods when using HPA. Resource requests will enable HPA to make optimal decisions when scaling pods.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialKubernetes HPA Limitations

- HPA can’t be used along with Vertical Pod Autoscaler based on CPU or Memory metrics. VPA can only scale based on CPU and memory values, so when VPA is enabled, HPA must use one or more custom metrics to avoid a scaling conflict with VPA. Each cloud provider has a custom metrics adapter to enable HPA to use custom metrics.

- HPA only works for stateless applications that support running multiple instances in parallel. Additionally, HPA can be used with stateful sets that rely on replica pods. For applications that can’t be run as multiple pods, HPA cannot be used.

- HPA (and VPA) don’t consider IOPS, network, and storage in their calculations, exposing applications to the risk of slowdowns and outages.

- HPA still leaves the administrators with the burden of identifying waste in the Kubernetes cluster created by the reserved but unused requested resources at the container level. Detecting container usage inefficiency is not addressed by Kubernetes and requires third-party tooling powered by machine learning.

Learn more about using machine learning and state-of-the-art resource optimization techniques to complement the Kubernetes autoscaling functionality.

FAQs

What is Kubernetes HPA?

Kubernetes HPA (Horizontal Pod Autoscaler) automatically adjusts the number of pod replicas in a deployment based on metrics like CPU, memory, or custom application metrics. It helps applications scale dynamically to meet demand.

How does Kubernetes HPA work?

The HPA controller monitors defined metrics and compares them to target values. If utilization exceeds or falls below thresholds, it increases or decreases pod replicas accordingly, ensuring resources align with workload needs.

What workloads are best suited for Kubernetes HPA?

Kubernetes HPA works best with stateless applications or services designed for horizontal scaling, such as microservices and containerized web applications. Stateful applications that can’t run in parallel aren’t good candidates.

What are the limitations of Kubernetes HPA?

Kubernetes HPA doesn’t account for storage I/O, networking, or other non-CPU/memory metrics by default. It also can’t be used with Vertical Pod Autoscaler on the same CPU/memory metrics, and it doesn’t prevent overprovisioned container requests.

What are best practices for using Kubernetes HPA?

Best practices include defining pod resource requests, applying HPA to Deployments instead of ReplicaSets, using declarative YAML for version control, and designing applications with microservice architecture to support scaling.

Try us

Experience automated K8s, GPU & AI workload resource optimization in action.