In our last blog post in this series, we discussed how to overcome the “bump-up loop” in which you continually increase CPU resource allocations in public cloud instances, even when it isn’t needed. In this blog post, we’ll look at another cloud resource management challenge: memory.

Sizing memory appropriately is extremely important because memory is a major driver of cost, and tends to be what we see as the most commonly constrained resource in virtual and cloud environments. Optimizing memory size often yields significant cost savings, whether your application is running in the cloud or on-premises.

As with CPU capacity, sizing memory resources can lead you down the slippery slope of the bump-up loop. One reason for this is that people often focus on the wrong stats. That’s because the data provided by the cloud providers — and the limited analysis tools many people rely on — can be misleading. Let’s look at why that is.

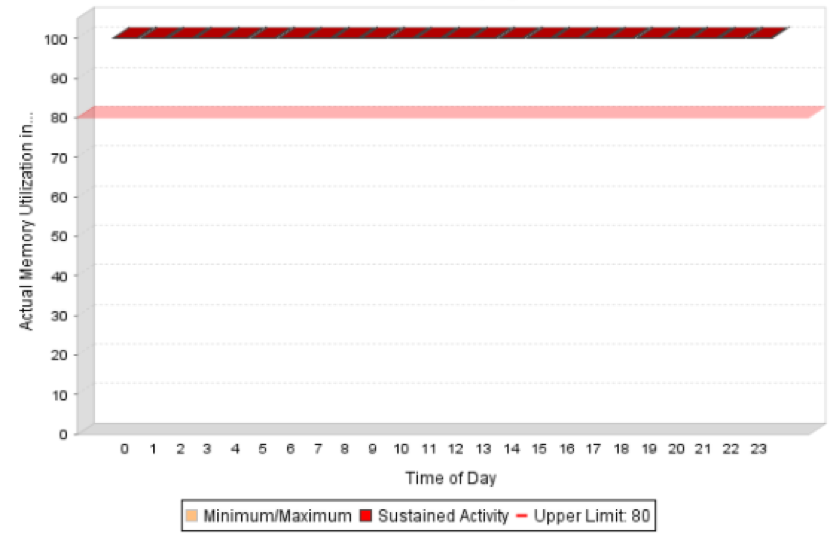

Take the example of a Linux application running on a physical server to which you allocate 16GB of memory. The application itself might only be using 5GB, but Linux will use the rest of the available memory for I/O “buffer caches” to speed things up. So when you go to measure usage, it will appear that your application is consuming 100% of available memory all the time (see figure below).

off url_new_window=off use_overlay=off animation=left sticky=off align=center force_fullwidth=off always_center_on_mobile=on use_border_color=on border_color=#d8d8d8″ border_style=solid custom_margin=40px|40px|40px|40px] [/et_pb_image][et_pb_text admin_label=Text background_layout=light text_orientation=left use_border_color=off border_color=#ffffff border_style=solid]

What this simplified view isn’t showing you is whether there is actual pressure on the memory. The operating system is consuming all the available memory, but this is not apparent “from the outside.” So you allocate more memory, which the OS gobbles up again. And the costly cycle continues.

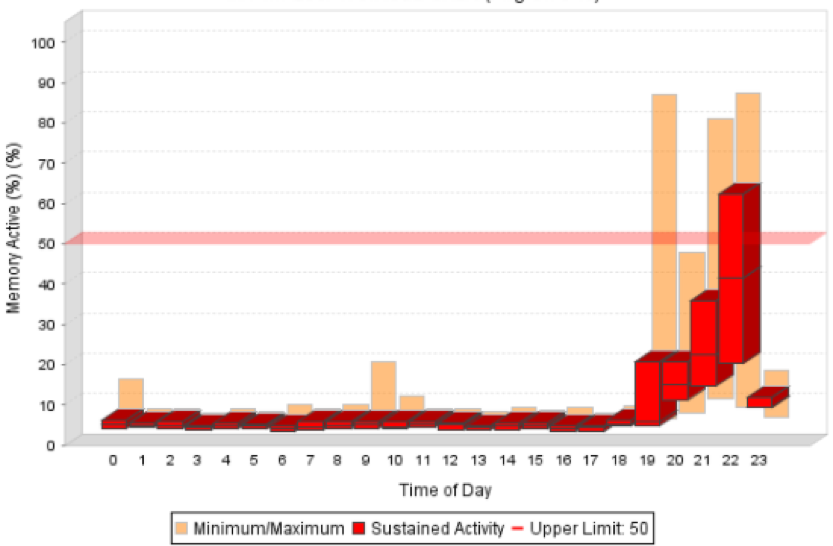

Because of this, it’s not enough just to look at how much memory being used; you need to analyze how it is being used. This means looking at whether the memory is being actively used by the systems, which is often referred to as active memory and resident set size. This is the actual working set being used by the application or operating system, and this is where we want to look to see if there is any pressure or waste. Even if memory usage is sitting at 100%, the active memory use might look something like this:

Having this kind of visibility is critical for making decisions about memory sizing that meet your application requirements in the most cost-efficient way.

Most cloud services provide some sort of tools for analyzing memory use, but they have limitations — and can incur extra costs. Many monitoring stats provided by cloud providers do not include memory stats by default. For example Amazon Web Services (AWS) CloudWatch monitoring service charges extra for access to memory data. And even when companies pay for this additional data set, they may not actually be able to use the data without analytics that know what to do with it to appropriately recommend memory sizing.

To avoid costly mistakes in sizing memory, a one-dimensional analysis is not enough. Best practices dictate that the actual required memory for a cloud instance must be a function of both consumed memory and active memory, and policy must be used in this calculation to ensure that enough extra memory is earmarked to the operating system to allow it to do a reasonable amount of caching, without becoming bloated. This provides the optimal balance of cost efficiency and application performance. When the analytics are used on an ongoing basis, changes can be made in incremental steps to minimize risk and disruption.

In the next blog post, we’ll discuss some tips for modernizing cloud instances as a way to generate more savings on top of right-sizing allocations.

More in this Series

This post is part of a series of five articles on how to reduce public cloud costs by choosing the right instances from public cloud vendors such as AWS, Azure, IBM, and Google.