OpenShift Service Mesh

Chapter 4- Introduction: OpenShift Tutorial

- Chapter 1: OpenShift Architecture

- Chapter 2: OpenShift Route

- Chapter 3: Openshift Alternatives

- Chapter 4: OpenShift Service Mesh

- Chapter 5: Understanding OpenShift Container Storage

- Chapter 6: Using Azure OpenShift

- Chapter 7: Rancher vs. Openshift: The Guide

- Chapter 8: OpenShift Serverless: Guide & Tutorial

- Chapter 9: Anti-Affinity OpenShift: Tutorial & Instructions

- Chapter 10: OpenShift Operators: Tutorial & Instructions

Many DevOps teams use a service mesh to improve visibility and streamline management within complex microservices-based systems. A service mesh is a design pattern for a transparent infrastructure layer that can extend the functionality of a network and improve observability.

In practice, a service mesh usually includes a control plane that dictates how the services communicate and a data plane that directs the traffic and enforces policies. One of the most popular enterprise-grade service mesh implementations is Red Hat OpenShift’s Service Mesh.

To help you better understand the topic, this article will review Red Hat OpenShift use cases and how OpenShift implements a service mesh using the open-source projects Istio, Kiali, and Jaeger.

Red Hat OpenShift Service Mesh Use Cases

There are several use cases for service meshes, and the particulars vary by use case. Here are some of the most common use cases which we’ll explore in this article.

Red Hat OpenShift Service Mesh Use Cases

| Use Case | Description |

|---|---|

| A/B Testing | Test multiple versions of a feature to see which one users respond to more favorably. |

| Canary Deployments | Systematically phase out an older version of an application by gradually adjusting the ratio of traffic being sent from an old version to a new version. |

| Rate Limitations | Control the rate at which an application receives requests, ensuring any individual instance is not overloaded. |

| Access Control | Control who and when your application can be accessed. |

Understanding Red Hat OpenShift Service Mesh

Red Hat’s service mesh implementation is a combination of the following open-source projects:

- Istio – Traffic control

- Kiali – Traffic visualization

- Jaeger – Request tracing

This section will review each component and explain how they fit into the service mesh.

As a reminder, OpenShift Operators are the preferred way to install and manage applications. Operators help manage the deployment and updating of application pods. These components can be installed via operators in the OperatorHub.

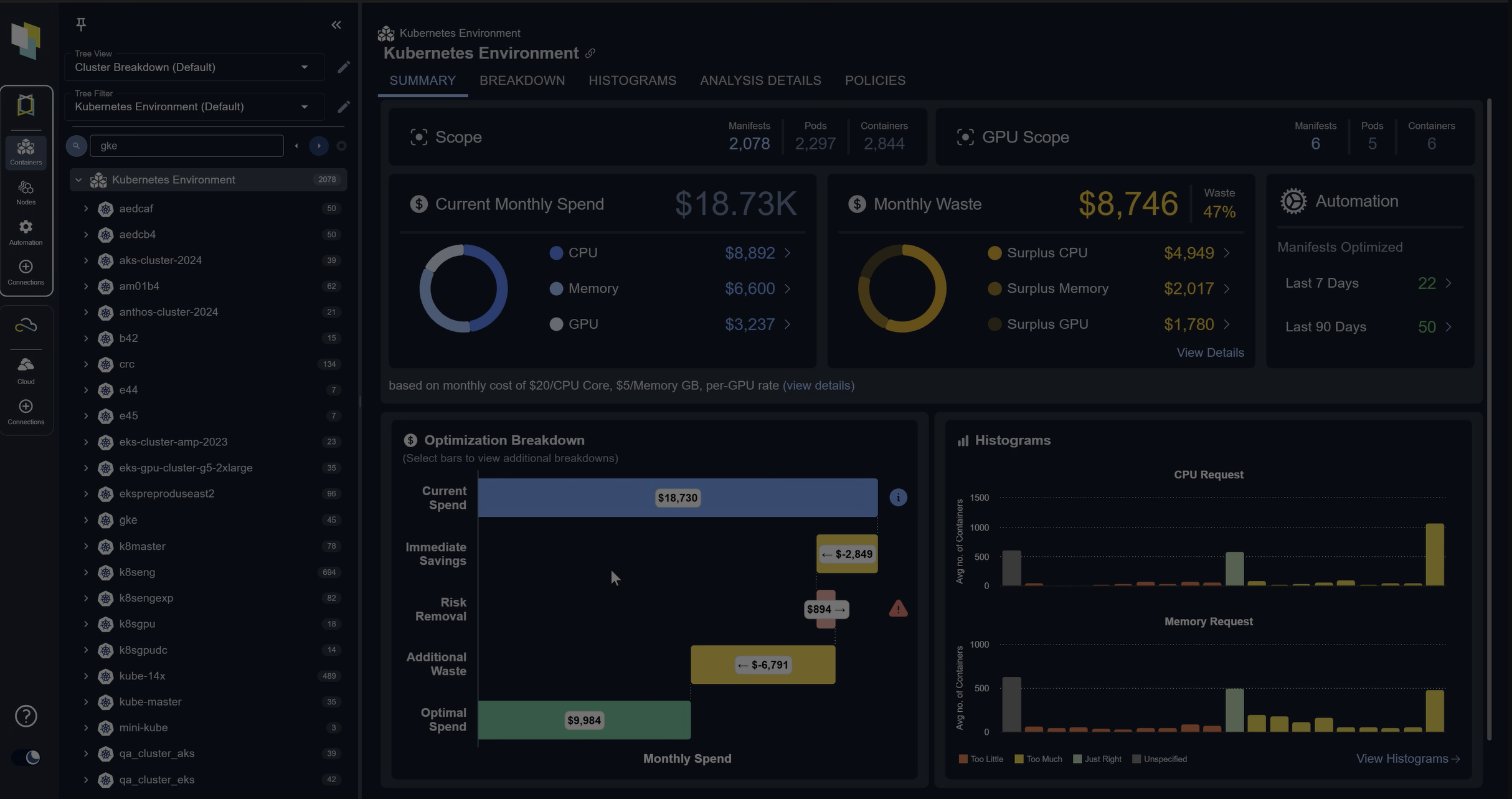

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialIstio

Istio provides features such as:

- Security

- Traffic management

- Traffic flow visualizations

Isito enables all of this functionality without developing and maintaining all of the functions within the application itself. This makes development simpler because teams have standard libraries and functions to implement instead of designing solutions from scratch.

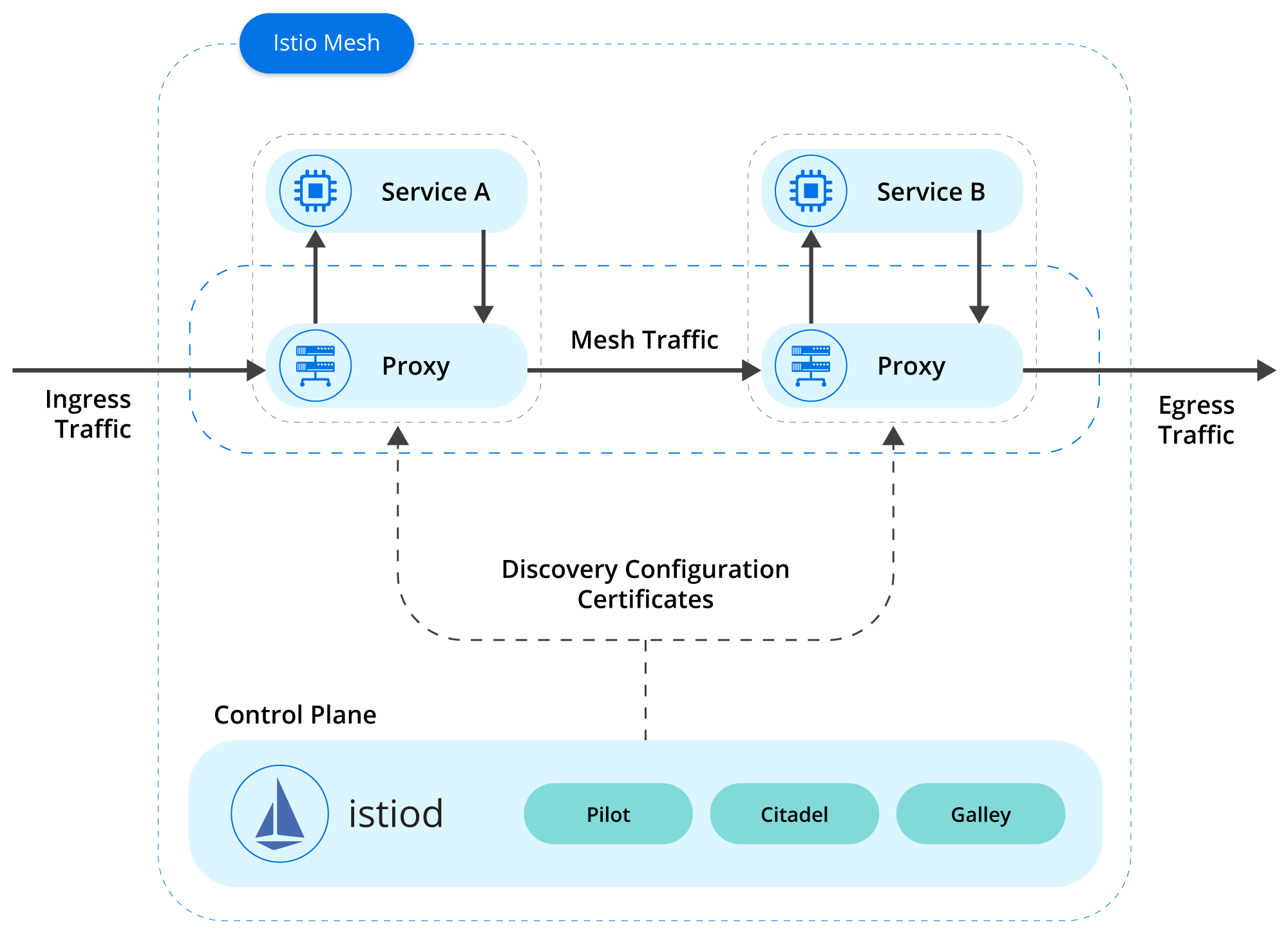

Istio consists of a control and data plane. The control plane takes the desired configuration and lays out the environment creating virtual services and destination rules. It also programs and deploys proxy sidecars alongside application pods. The data plane, which is made up of the sidecars, handles the communication between these services. Without a service mesh, this functionality would need to be built into every application.

As part of the Istio configuration, Istio deploys an Envoy proxy sidecar alongside each pod and configures all non-local communication to go through the proxy. The sidecars make up the Isito data plane, representing the communication between the microservices.

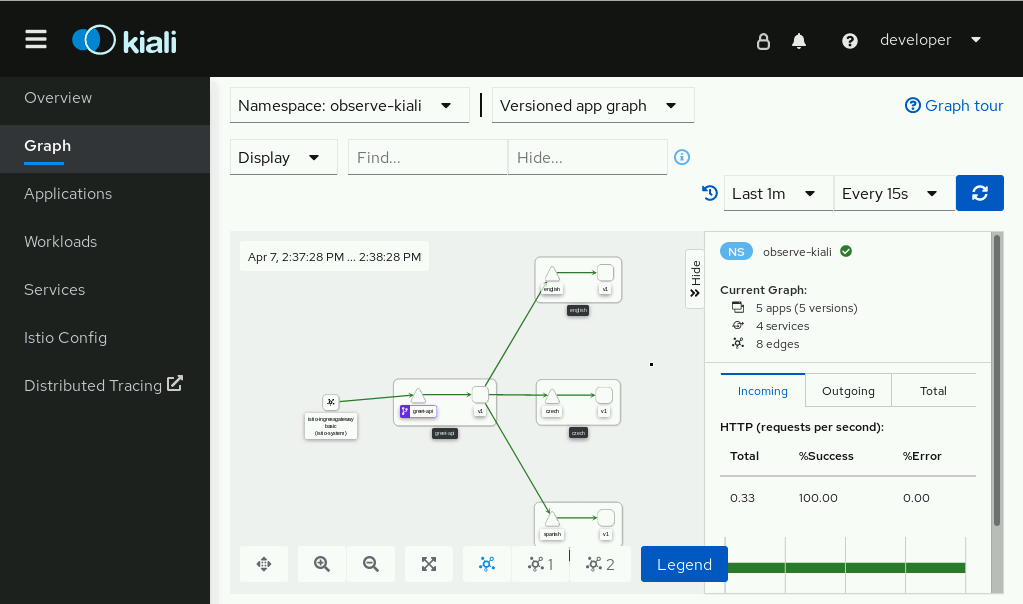

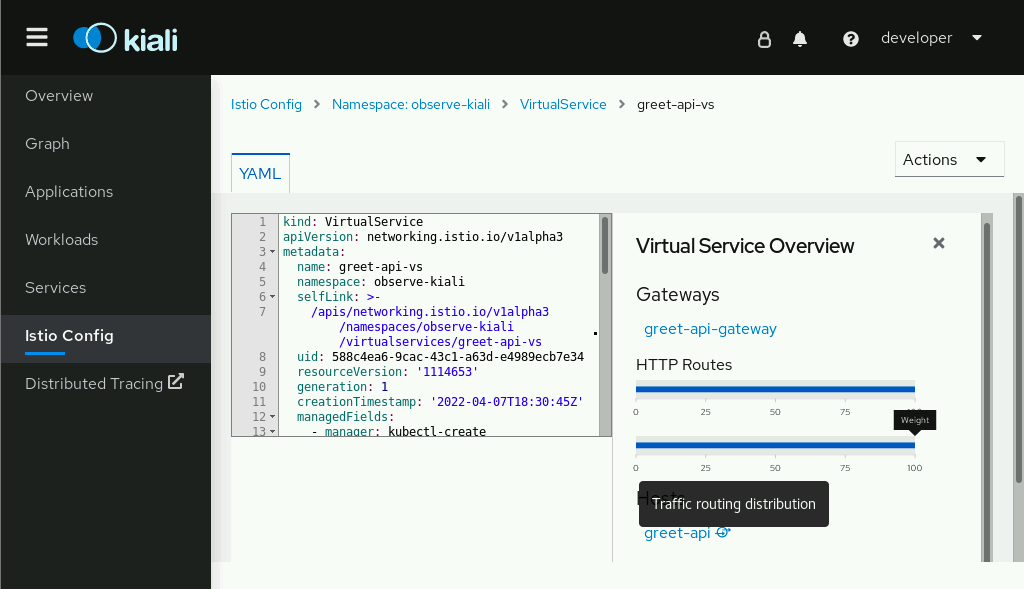

Kiali

Kiali provides a visual management console for the Istio service mesh. It makes it easier to visualize the topology, see how traffic flows from service to service, and modify the Isitio config.

The table below details Kiali’s key features

Kiali Features

| Feature | Description |

|---|---|

| Topology visualizations | Once traffic is flowing, Kiali can visually represent all of the microservices reporting to it. |

| Tracing visualizations | As traffic flows, Kiali shows an animated path as a request makes its way through the environment. |

| Health visualizations | By looking at the visual representation of the environment, |

Jaeger

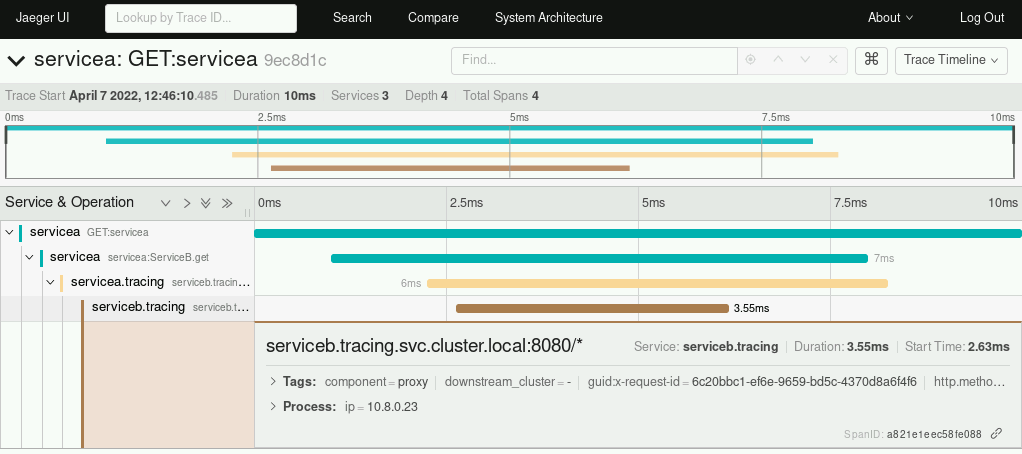

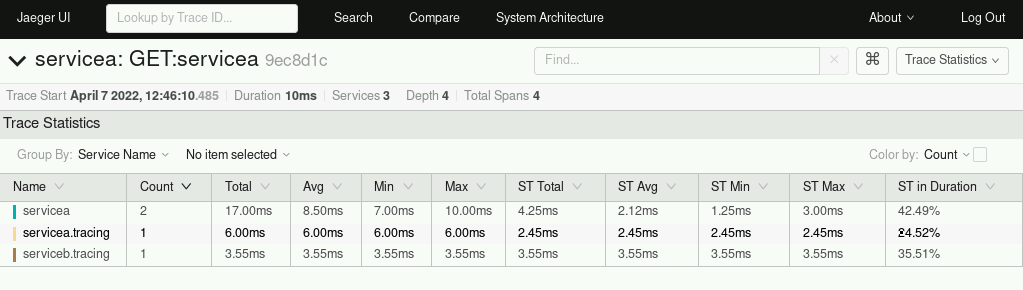

As part of the OpenShift Service Mesh, Jaeger — which was created by Uber — provides distributed request tracing within an application. It is OpenTracing compliant and inspired by projects like Dapper and OpenZipkin.

Jaeger allows tracing of a request through an application using traces and spans to track the beginning and the end of a request.

With this information, it is easier for a developer to understand traffic flows, identify bottlenecks, and determine the root cause of issues within an application.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialOpenShift Service Mesh Functions

Understanding the main components or the “how” of Red Hat OpenShift Service Mesh enables the discussion of the “why”. Now that we know how OpenShift Service Mesh works, we can explore the use cases it enables.

A/B Testing

A/B testing compares two distinct versions of an application to see which version produces more of the desired outcome. Depending on the metrics, the outcome could be which version performs faster or which version leads to more sales conversions.

Istio’s traffic management capabilities allow a developer to configure routes to one or more versions of an application and give a weighted value to each. Below is a sample of a routing rule.

apiVersion: config.istio.io/v1alpha2

kind: RouteRule

metadata:

name: reviews-default

spec:

destination:

name: reviews

precedence: 1

route:

- labels:

version: v1

weight: 50

- labels:

version: v3

weight: 50

Canary Deployments

Automic-scaling is built into OpenShift and Kubernetes, but traffic and scaling are closely tied together.

In standard OpenShift, if a developer wanted to test a new version of an application, they could update a deployment with a new image and allow the system to tear down the old version and roll out new versions. This works great for a well-tested and vetted version of an application

What about the testing before that stage?

With Istio canary deployments, teams can have two different versions of an application deployed simultaneously. In this configuration, a virtual service load balances the traffic between deployments based on predefined weights.

For example, a v1 deployment of an application can be deployed with 80% of the traffic going to it. The remaining 20% can be routed to v2 for testing.

Couple this routing with a horizontal pod autoscaler, and the deployment can scale based on traffic. As confidence in v2 grows, traffic to it can be increased. Eventually, v2 will reach 100% and phase out v1.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialRate Limitations

Rate limitations enable DevOps teams to control the amount of traffic to each instance of the application, protecting underlying infrastructure against possible overloads.

For example, If there are ten application instances, each rate limits the amount of traffic, and teams gain a better understanding of overall utilization. In turn, this information enables better decisions about infrastructure growth.

apiVersion: v1

kind: ConfigMap

metadata:

name: ratelimit-config

data:

config.yaml: |

domain: productpage-ratelimit

descriptors:

- key: PATH

value: "/productpage"

rate_limit:

unit: minute

requests_per_unit: 1

- key: PATH

rate_limit:

unit: minute

requests_per_unit: 100

Access Control

In OpenShift / Kubernetes there are NetworkPolicies which outline how pods communicate with each other. In Istio there are AuthorizationPolicies.

The configurations in Istio’s control plane are turned into Envoy proxy configurations and operate as a layer 7 proxy. This can be a very flexible method of controlling traffic to applications.

Two key benefits of AuthorizationPolicies are that they:

- Allow both ALLOW and DENY rules

- Allow for distinction between HTTP GET and POST requests.

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: httpbin

namespace: foo

spec:

action: DENY

rules:

- from:

- source:

namespaces: ["dev"]

to:

- operation:

methods: ["POST"]

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialConclusion

It’s often said that “what is measured, improves”. A service mesh is an important aspect of measuring your cloud-native application’s performance

In this article, we reviewed how Red Hat OpenShift Service Mesh uses Istio, Kiali, and Jaeger to implement a service mesh with built-in traffic controls, observability, and tracing. With OpenShift Service Mesh, developers gain a more complete view of their applications. As a result, they can optimize performance and proactively address minor issues before they become major problems.

FAQs

What is OpenShift Service Mesh?

OpenShift Service Mesh is Red Hat’s enterprise-grade service mesh built on Istio, Kiali, and Jaeger. It provides traffic control, observability, and request tracing for microservices running in OpenShift clusters.

What are common use cases for OpenShift Service Mesh?

Typical use cases include A/B testing, canary deployments, rate limiting, and fine-grained access control. These help teams improve release strategies, protect applications, and manage traffic effectively.

How does Istio fit into OpenShift Service Mesh?

Istio provides the core traffic management layer, deploying Envoy proxy sidecars alongside pods. It handles routing, load balancing, TLS encryption, and enforces traffic policies across services.

What role do Kiali and Jaeger play in OpenShift Service Mesh?

Kiali provides a visual interface for service topology, traffic flow, and health monitoring. Jaeger enables distributed tracing to diagnose performance bottlenecks and trace requests across microservices.

Why should organizations adopt OpenShift Service Mesh?

Adopting OpenShift Service Mesh gives DevOps teams observability, reliability, and security without embedding these functions into each application. This reduces developer overhead and accelerates safe, scalable deployments.

Try us

Experience automated K8s, GPU & AI workload resource optimization in action.