Understanding OpenShift Container Storage

Chapter 5- Introduction: OpenShift Tutorial

- Chapter 1: OpenShift Architecture

- Chapter 2: OpenShift Route

- Chapter 3: Openshift Alternatives

- Chapter 4: OpenShift Service Mesh

- Chapter 5: Understanding OpenShift Container Storage

- Chapter 6: Using Azure OpenShift

- Chapter 7: Rancher vs. Openshift: The Guide

- Chapter 8: OpenShift Serverless: Guide & Tutorial

- Chapter 9: Anti-Affinity OpenShift: Tutorial & Instructions

- Chapter 10: OpenShift Operators: Tutorial & Instructions

Red Hat OpenShift Container Storage is an integrated collection of cloud storage and data services for the Red Hat OpenShift Container Platform. Packaged as an OpenShift operator, OpenShift Container Storage facilitates simple deployment and management of storage resources.

With OpenShift Container Storage, organizations can address a key challenge of container administration:

What happens if a container running a stateful application fails?

Without persistent storage, applications can lose data, cause revenue loss, and reduce customer satisfaction. Persistent storage solves this problem, but teams still need to answer questions like:

- Does your container storage provide uniform features and functionality regardless of the underlying infrastructure?

- Is it dynamic?

- Is it shared?

OpenShift Container Storage helps DevOps teams address these implementation questions and build more reliable applications. To help you find the right solution for your infrastructure, this article will explain the benefits, deployment models, and monitoring options for OpenShift Container Storage.

Summary of Key Benefits

Red Hat OpenShift Container Storage allows organizations to streamline and scale container storage management. The table below outlines the key benefits of OpenShift Container Storage. OpenShift Container Storage benefits

| Benefit | Description |

|---|---|

| Environment Independent | OpenShift Container Storage is not environment-dependent and can run on any underlying infrastructure. |

| High Availability and Disaster Recovery Site | OpenShift Container Storage supports HA and DR sites on all major public cloud platforms. |

| Easily Configurable | OpenShift Container Storage is easy to configure through a user-friendly GUI. When deployed using OpenShift Container Platform, logging, metrics, a configured registry (regardless of the underlying infrastructure), and a storage service for apps, all come pre-configured out of the box. |

| Scalable | OpenShift Container Storage can be configured to be fully automated meaning that it can be set to automatically scale based on changing storage requirements. |

| Customizable Deployment Options | OpenShift Container Storage is highly customizable and offers multiple deployment scenarios catering to various use cases. |

| Built-In Monitoring | OpenShift Container Storage offers in-depth monitoring capabilities that include automated correctional steps and alerts. |

Red Hat OpenShift Container Storage Overview

Red Hat OpenShift Container Storage is made available to applications through storage classes representing the following components:

- Block Storage is primarily for database workloads. Block storage is a storage technology that breaks up data into chunks (blocks) and stores it as separate pieces, each with its own unique identifier.

- Shared and distributed file systems (DFS), catering primarily to software development, messaging, and data aggregation. As the name suggests, DFS is a file system distributed on multiple file servers or locations.

- Multi-cloud object storage, which supports S3 storage using a lightweight API and can store and retrieve data from multiple cloud object stores.

- On-premises object storage features a robust S3 API endpoint that can scale to tens of petabytes, primarily targeting data-intensive applications.

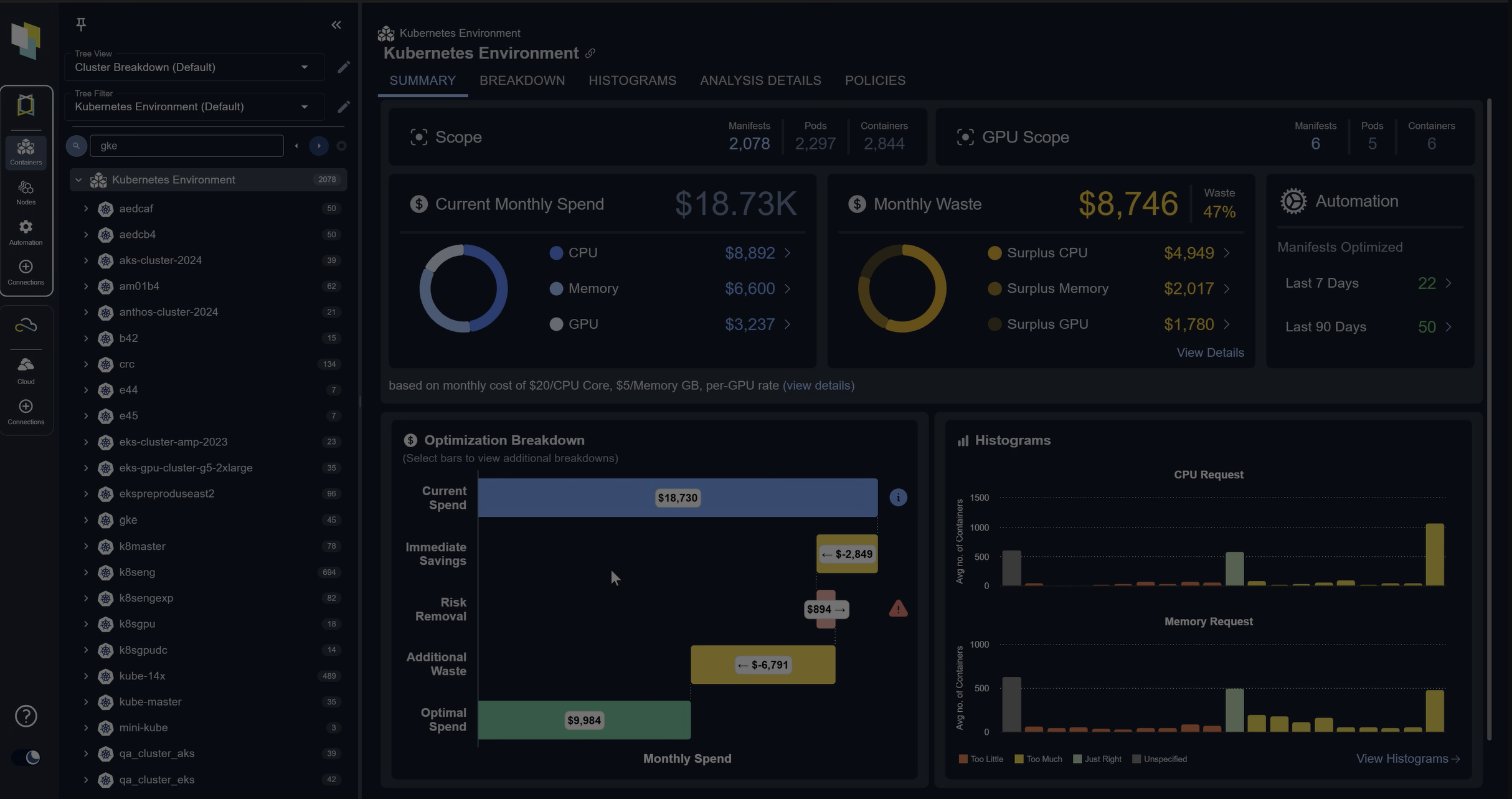

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialRed Hat OpenShift Container Storage Features

Environment Independent

Red Hat OpenShift Container Storage provides automated, scalable, and highly available persistent storage services regardless of environment, from bare metal to VMs to Hybrid cloud.

When you deploy OpenShift with OpenShift Container Storage, you get logging, metrics, and a configured registry regardless of the underlying infrastructure. OpenShift Container Storage also provides a storage service for apps out of the box and HA storage for each OpenShift infrastructure type.

High availability

To understand the business value of OpenShift Container Storage’s high availability, let’s compare it to another persistent container solution: AWS Elastic Block Store (EBS). EBS does not provide OpenShift with storage across multiple AWS availability zones. It’s deployed in only one availability zone, so a single failure means you’ve lost your storage and your stateful application.

On the other hand, OpenShift Container Storage is available across availability zones. If one zone fails, the stateful application continues to run because the data is replicated in availability zones. Red Hat delivers this in a cloud provider agnostic fashion and includes the same feature and user experience wherever OpenShift is deployed.

Easily Configurable

Another advantage of OpenShift Container Storage is it’s fully automated and self-service. Unlike other backend storage options that require pre-provisioning based on the set volume sizes, OpenShift Container Storage is dynamically provisioned upon user request with the storage needed. Once provisioned, users can dynamically extend the storage volume, which saves administrative overhead and reduces downtime.

Scalable

OpenShift Container Storage also simplifies application scalability. A common way to scale out container-based apps is through shared storage, but most storage for containers today is not shared, including the well-known storage options available like AWD EBS, Azure VDisk, GCP, and VMware VSAN. Shared storage allows apps and multiple containers to write to the shared storage simultaneously.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialRed Hat OpenShift Container Storage Deployment Options

There are two deployment approaches for the Red Hat OpenShift Container Storage: internal and external.

Internal Approach

OpenShift storage containers can be entirely deployed within the OpenShift container platform. This model is called an internal deployment approach. The internal approach offers all the “out-of-the-box deployment” benefits discussed earlier in this article. With the internal approach, there are two different deployment options: simple and optimized.

Simple deployment

In simple deployment, the Storage Container co-exists with applications running on the container platform. This type of deployment is best when

- Storage requirements are not clear

- OpenShift Container Storage services will use the same infrastructure as the applications

- Creating a dedicated node for Container services is not ideal (test or small deployments)

OpenShift Container Storage service is available internally to the OpenShift Container Platform running on the following infrastructure:

- AWS – Deployment instructions for OpenShift Container Storage on AWS can be found here.

- Bare Metal – Deployment instructions for OpenShift Container Storage on a Bare Metal system can be found here.

- GCP – Deployment instructions for OpenShift Container Storage on GCP can be found here.

- Azure – Deployment instructions for OpenShift Container Storage on Azure can be found here.

- VMware vSphere – Deployment instructions for OpenShift Container Storage on VMWare vSphere can be found here.

Optimized deployment

OpenShift Storage Container services can be configured to run on their own dedicated infrastructure. This is known as an optimized deployment. An optimized approach is best for cases where:

- OpenShift Container services running on dedicated infrastructure

- A new node dedicated to OpenShift container services is easy to deploy (virtualized environment)

- Additional cost for a dedicated node makes sense (enterprise deployments)

- Request volume is high and a node dedicated to handling OpenShift container services is required to meet the performance demands.

- Storage Requirements are clear

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialExternal Approach

OpenShift Container Storage can make Ceph Storage services available outside of the OpenShift Container Platform cluster. This is known as an external approach because the storage cluster is exposed externally.

The external approach is best when:

- A significant amount of storage is required.

- A storage container is already configured and available to be used.

- Multiple container platforms require access to common storage infrastructure.

OpenShift Container Storage can make services from an external Ceph storage cluster running on the following platforms:

- Bare Metal – Deployment instructions for OpenShift Container Storage on a Bare Metal system can be found here.

- VMWare vSphere – Deployment instructions for OpenShift Container Storage on VMWare vSphere can be found here.

OpenShift Container Storage Monitoring

This section will discuss four critical points to monitoring OpenShift Container Storage.

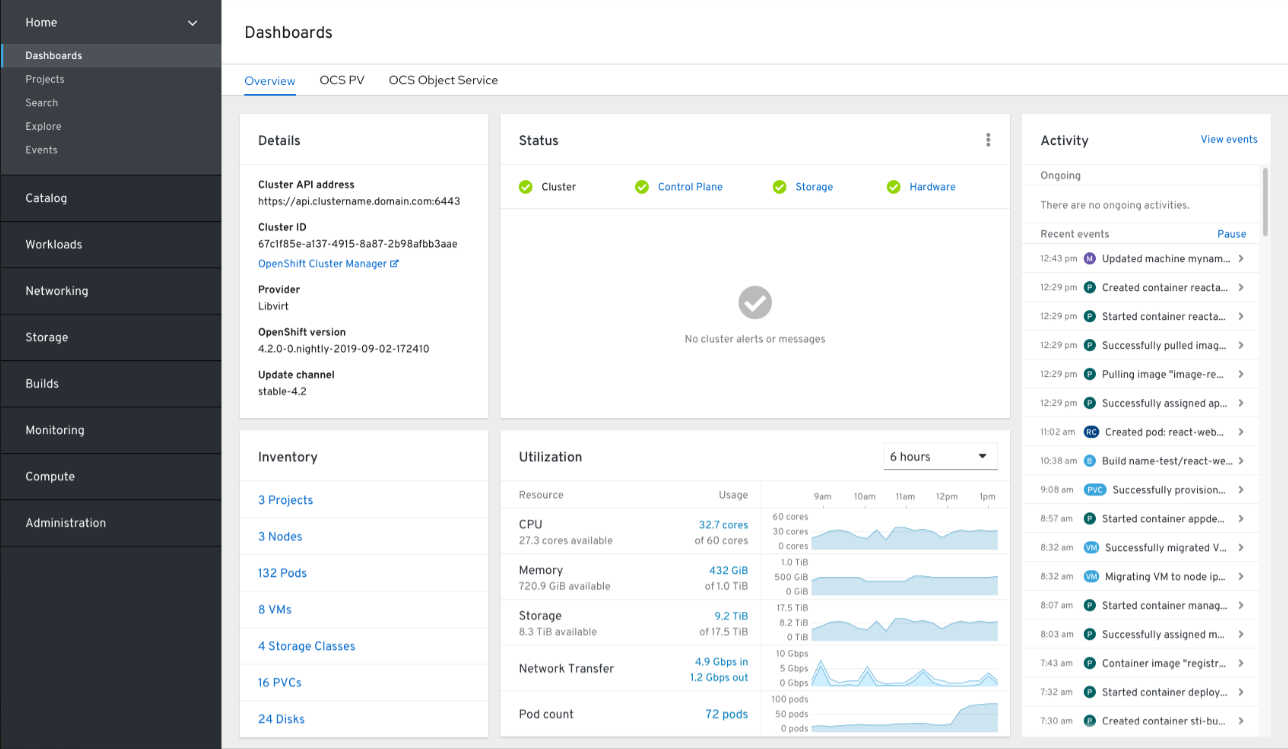

Health

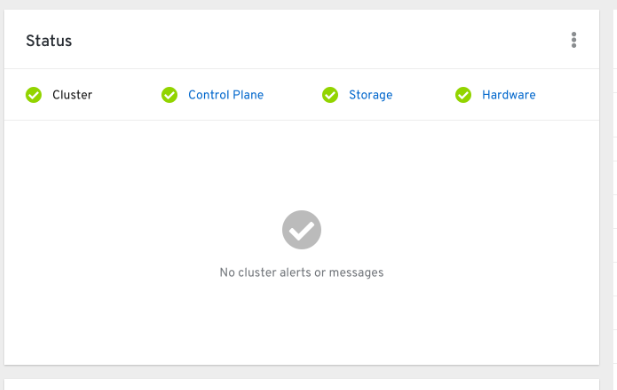

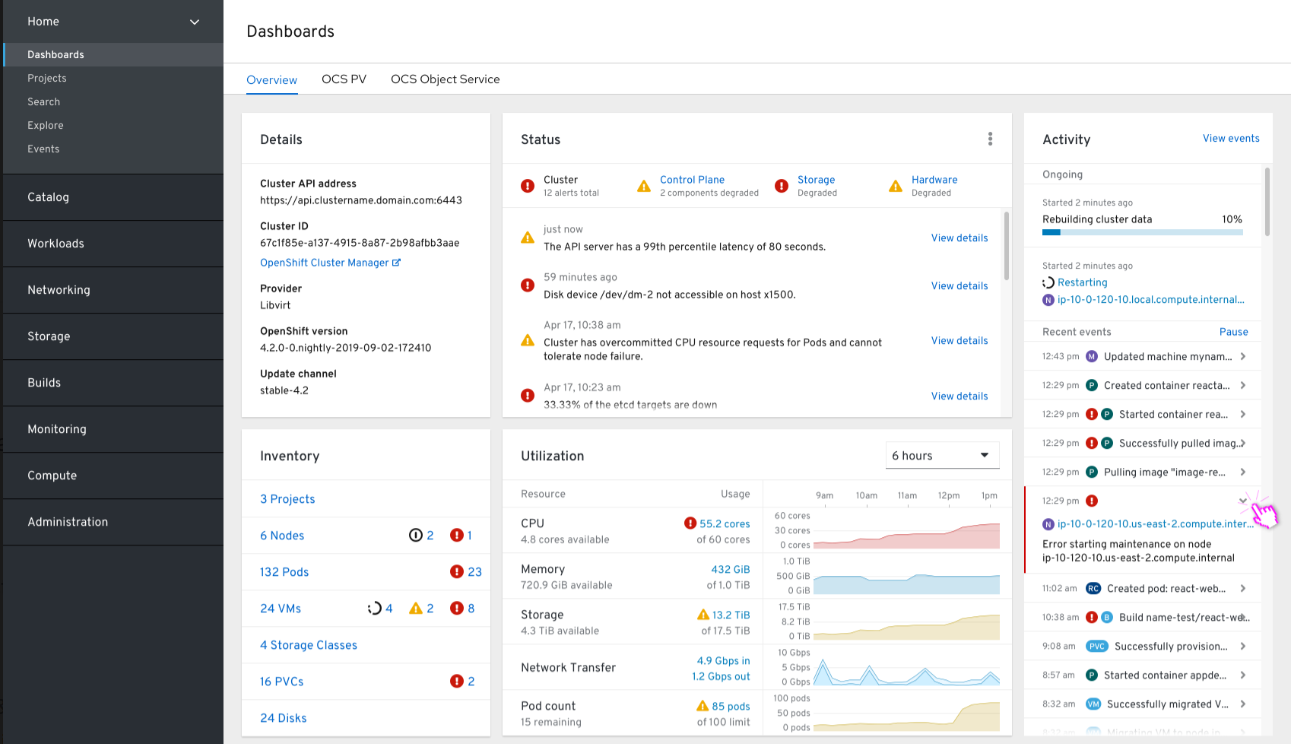

Storage cluster health is a vital aspect of OpenShift Container Storage monitoring. Storage health is visible on the Block and File and Object dashboard in the OpenShift Web Console. Administrators can check health status in the overview pane for both Block and File and Object.

A component is healthy if the health status shows a “Green Tick” like in the screenshot above.

If the cluster is showing a warning or an error status, as shown in the screenshot above, refer to this OpenShift official document for further information.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialMetrics

Metrics are found under the Overview section of the Block and File dashboard. Individual dashboard cards provide cluster information from multiple categories. The available cards are:

- Details Card – Provides a brief overview of the cluster details. It shows the service name, cluster name, deployment mode, operator version, etc. It also shows the status of the cluster and if the cluster has any errors.

- Cluster Inventory Card – Shows the inventory information of the cluster. It includes information like the number of nodes, pods, persistent storage volume claims, VMs, and the number of hosts in the cluster. The number of nodes will be 0 by default for external deployment mode since there are no dedicated nodes for Container Storage.

- Cluster Health Card – Presents an overview of cluster health. It includes all the relevant alerts and their descriptions to aid in resolving any health issues.

- Cluster Capacity Card – Displays the current capacity and is useful for future deployment or understanding the current performance considerations. It includes information like CPU time, memory allocation, storage consumed, network resources consumed, etc.

- Cluster Utilization Card – Shows the resource consumption of the various resources over a period of time for an administrator to understand the utilization trend.

- Event Card – Lists recent events related to the cluster. It provides an overview of any activities performed on the cluster at a quick glance.

- The “Top Consumers Card” – Shows the pods and nodes consuming the most resources.

Alerts

Administrators can configure alerts in the Alerting UI, which lets you manage alerts, silences, and alerting rules.

Alerting rules are conditions. An alert is triggered when the condition is true. For example, a rule could be to generate an alert when the storage capacity for a cluster hits a certain percentage or if the cluster goes down.

An alert is generated as soon as the condition is true and is delivered to a user or group of administrators depending on the configuration. Users can also view alerts on their corresponding cards.

Administrators can apply silences to prevent alerting rules from generating further alerts. For example, if the cluster is down and the administrator is already working to resolve the issue, they may want to silence the rule to prevent further alert generation. Silences can also be helpful when performing scheduled maintenance. To learn more about Alerts, visit this OpenShift official documentation.

Telemetry

OpenShift Container Storage collects a carefully chosen subset of the cluster monitoring metric to Red Hat via an integrated “Telemetry” component. Telemetry data is sent in real-time, allowing Red Hat to quickly respond to issues that may impact customers.

A cluster that sends data to Red Hat through Telemetry is called a connected cluster. Please visit this link to learn more about Telemetry and the type of information collected by Telemetry, please visit this link.

Conclusion

Like a resilient, dynamic, and automated persistent storage on any infrastructure, OpenShift Container Storage saves time and money while reducing the risk of human error, which makes it an ideal option for versatile, multi-purpose storage for containerized applications.

The ease of deployment and management, coupled with extensive deployment options independent of the underlying infrastructure, makes it one of the best storage technologies available in the market right now.

When deployed with OpenShift, OpenShift Container Storage comes with many valuable features preconfigured, which makes it an ideal choice for teams using the OpenShift Container Platform.

With multiple deployment options to choose from, it is essential to consider the pros and cons of each. The information we’ve covered here will help you make the right choices. For more details on information on planning, deploying, and managing OpenShift Container Storage, check out this OpenShift knowledge base article.

FAQs

What is OpenShift Container Storage?

OpenShift Container Storage is Red Hat’s integrated storage solution for OpenShift clusters. It provides persistent, scalable, and environment-independent storage for containerized applications running on any infrastructure.

Why is OpenShift Container Storage important for stateful applications?

Without persistent storage, stateful applications risk losing data if containers fail. OpenShift Container Storage ensures data durability, high availability, and disaster recovery across availability zones.

What deployment options exist for OpenShift Container Storage?

OpenShift Container Storage supports two main deployment options. Internal deployments run storage inside the OpenShift cluster, either in a simple or optimized configuration. External deployments connect OpenShift to external Ceph clusters, offering scalable and multi-platform storage for larger environments.

What types of storage does OpenShift Container Storage support?

It supports block storage for databases, distributed file systems for collaboration, and S3-compatible object storage for both multi-cloud and on-premises environments.

What monitoring features are included in OpenShift Container Storage?

It offers dashboards for health, metrics, capacity, and utilization, along with configurable alerts and telemetry. These features provide proactive monitoring and automated responses to storage issues.

Try us

Experience automated K8s, GPU & AI workload resource optimization in action.