Anti-Affinity OpenShift: Tutorial & Instructions

Chapter 9- Introduction: OpenShift Tutorial

- Chapter 1: OpenShift Architecture

- Chapter 2: OpenShift Route

- Chapter 3: Openshift Alternatives

- Chapter 4: OpenShift Service Mesh

- Chapter 5: Understanding OpenShift Container Storage

- Chapter 6: Using Azure OpenShift

- Chapter 7: Rancher vs. Openshift: The Guide

- Chapter 8: OpenShift Serverless: Guide & Tutorial

- Chapter 9: Anti-Affinity OpenShift: Tutorial & Instructions

- Chapter 10: OpenShift Operators: Tutorial & Instructions

In OpenShift, part of deploying a pod is deciding where to schedule the pod. Pod scheduling is handled by the openshift-kube-scheduler pods running on each controller node. By default, any workload can be placed on any worker node. However, administrators may want to control pod placement.

There are three common techniques to solve this problem: node affinity, pod affinity, and pod anti-affinity.

OpenShift Pod Placement Techniques

| Technique | Description |

|---|---|

| Node Affinity | Schedule pods where the node matches specific labels. |

| Pod Affinity | Schedule pods on nodes where matching pod labels already exist. |

| Pod Anti-Affinity | Avoid scheduling pods where matching pod labels already exist. |

This article will cover pod anti-affinity, which is the practice of scheduling pods while avoiding other pods based on labels. We will review the most common anti-affinity OpenShift use cases and the pod specs required to configure pod anti-affinity. We will also review what happens when a pod fails to schedule because of pod anti-affinity rules.

Anti-affinity OpenShift Use Cases

Before discussing how to configure pod anti-affinity, let us discuss why you might want such specific control over pod scheduling. While specific requirements vary depending on infrastructure, available resources, and workload needs, the two most common anti-affinity OpenShift use cases are high availability and pod pairing.

Anti-affinity OpenShift Use Cases

| Use Case | Description |

|---|---|

| High Availability | Ensure multiple pods are not running on the same node. |

| Pod Pairing | In some cases, pod anti-affinity rules can be combined with pod-affinity rules to pair specific sets of pods together and still distribute them across the cluster. |

High Availability

Pods may need to be on different nodes to ensure high availability for databases or caching applications. In these cases, the anti-affinity rules would be configured to avoid pods where the application is already running. One example is configuring one elastic search pod per OpenShift cluster node. Another example is Percona including anti-affinity in its operator.

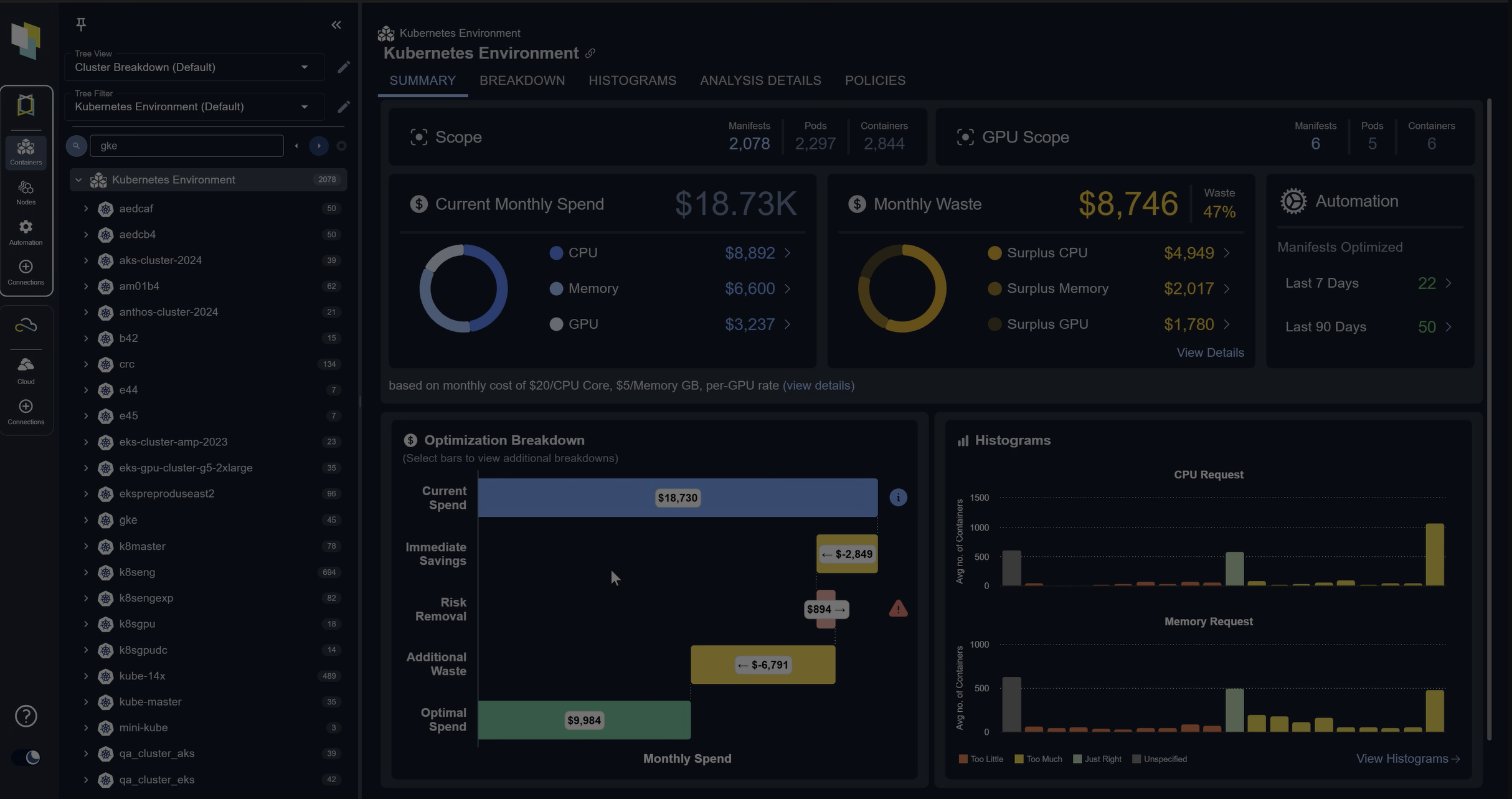

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialPod Pairing

Sometimes, it is beneficial to pair pods. For example, you may want your Python application paired with a Redis cache, but you only want one cache pod and one application pod per node. In that case, using both pod affinity and pod anti-affinity is ideal.

Configuring Pod Anti-Affinity in OpenShift

Now that we know the why, let’s move on to the how. This section will review the files required to configure pod anti-affinity in OpenShift/

We will use Deployments to configure and launch our pods for our examples. If you are unfamiliar with Deployments, consult the OpenShift documentation.

This first example is the configuration lines that specify the pod anti-affinity section.

[1] affinity:

[2] podAntiAffinity:

[3] requiredDuringSchedulingIgnoredDuringExecution:

[4] - labelSelector:

[5] matchExpressions:

[6] - key: app

[7] operator: In

[8] values:

[9] - hello-openshift

[10] topologyKey: "kubernetes.io/hostname"

| Line # | Content | Description |

|---|---|---|

| 1 | affinity: |

This is the start of the affinity section. Part of the pod specs. |

| 2 | podAntiAffinity: |

Specifying we are configuring pod anti-affinity |

| 3 | requiredDuringSchedulingIgnoredDuringExecution: |

The option “requiredDuringSchedulingIgnoredDuringExecution” is long but breaks down to the following two points. 1. Do not schedule the pod unless the rules are met 2. If the node labels change after the pod is running, the pod can still run. The option “preferredDuringSchedulingIgnoredDuringExecution” will try to match the rules, but it will still be scheduled if no node matches. |

| 4 | - labelSelector: |

Configure label selection. |

| 5 | matchExpressions: |

Setup the labels we need to match |

| 6 | - key: app |

The label we want to compare, in this case, app |

| 7 | operator: In |

How we want to compare the label, in this case, match the value. |

| 8 | values: |

List of values to match. |

| 9 | - hello-openshift |

The value to match. In this case, “hello-openshif”t. For multiple values, any of them can match. |

| 10 | topologyKey: "kubernetes.io/hostname" |

Similar to nodeSelector, the topologyKey matches node labels. In this case, we are picking a label that should be on all nodes by default. This ensures all the nodes are in scope for selection. |

So to review, this configuration section states that the pod should not be scheduled on a node where a pod has the label app=hello-openshift.

Below is an example of the deployment as a complete file. This file creates a deployment and launches pods with the app=hello-openshift label.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialThe podAntiAffinity rules will dictate that if a pod with the app=hello-openshift label is already on the node, it will not place another pod on that node.

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-openshift

spec:

replicas: 1

selector:

matchLabels:

app: hello-openshift

template:

metadata:

labels:

app: hello-openshift

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- hello-openshift

topologyKey: "kubernetes.io/hostname"

containers:

- name: hello-openshift

image: openshift/hello-openshift:latest

ports:

- containerPort: 80

Notice here we only have one replica configured. Our configuration will only allow one pod per node. If we increase the replicas to four, one pod will not be scheduled due to the rules. We’ll review what happens in that case in the When a Pod Cannot Be Scheduled section below.

This next example combines pod affinity and pod anti-affinity together to pair pods together.

This Deployment file launches three Redis caching pods on nodes where the key/label app=cache does not already exist. Effectively one pod per node.

apiVersion: apps/v1

kind: Deployment

metadata:

name: cache

spec:

selector:

matchLabels:

app: cache

replicas: 3

template:

metadata:

labels:

app: cache

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- cache

topologyKey: "kubernetes.io/hostname"

containers:

- name: redis-server

image: redis:3.2-alpine

This next example uses the podAffinity specs to try to align the pods with pods that have the app=cache label. This will ensure one cache pod and web pod are scheduled per node.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialapiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

selector:

matchLabels:

app: web

replicas: 3

template:

metadata:

labels:

app: web

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- web

topologyKey: "kubernetes.io/hostname"

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- cache

topologyKey: "kubernetes.io/hostname"

containers:

- name: web

image: nginx:1.16-alpine

When a Pod Cannot Be Scheduled

When configuring pod anti-affinity rules, no nodes may meet the requirements for scheduling. In this case, the pod enters a pending state. This section will review identifying these “pending” pods using the OpenShift command-line client oc. If you are unfamiliar with oc, review the documentation here.

When a pod is not schedulable, it will show a pending status, as shown below. In our example, we increased the replicas to four from three, so one of the pods could not be placed on a node.

$ oc get pods

NAME READY STATUS RESTARTS AGE

hello-openshift-7c669458bc-bf65x 0/1 Pending 0 4m5s

hello-openshift-7c669458bc-cqllf 1/1 Running 0 4m5s

hello-openshift-7c669458bc-gnbgd 1/1 Running 0 4m5s

hello-openshift-7c669458bc-gv5lh 1/1 Running 0 4m5sRunning the command oc describe POD provides more information about the pod. The output will look similar to the output below.

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 7m44s default-scheduler 0/6 nodes are available: 3 node(s) didn't match pod anti-affinity rules, 3 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate.

Warning FailedScheduling 6m8s (x1 over 7m8s) default-scheduler 0/6 nodes are available: 3 node(s) didn't match pod anti-affinity rules, 3 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate.

We can see why the pod cannot be scheduled in the output. Notice the result shows 0/6 nodes available. It notes three are the controller nodes (where workloads cannot be scheduled), and three do not match the pod anti-affinity rules.

If we need to view the podAntiAffinity rules in place, we look at the pod definition.

$oc get pod hello-openshift-7c669458bc-bf65x -o yamlThe output is long, but you want to look for the affinity section similar to the snippet below.

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- hello-openshift

topologyKey: kubernetes.io/hostname

For our example, our pods will not be placed on a pod where the app=hello-openshift label is already present. We can view the pod labels as shown below.

$ oc get pods -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

hello-openshift-7c669458bc-bf65x 0/1 Pending 0 3h2m app=hello-openshift,pod-template-hash=7c669458bc

hello-openshift-7c669458bc-cqllf 1/1 Running 0 3h2m 10.120.1.36 worker-2 app=hello-openshift,pod-template-hash=7c669458bc

hello-openshift-7c669458bc-gnbgd 1/1 Running 0 3h2m 10.120.1.27 worker-1 app=hello-openshift,pod-template-hash=7c669458bc

hello-openshift-7c669458bc-gv5lh 1/1 Running 0 3h2m 10.120.1.93 worker-0 app=hello-openshift,pod-template-hash=7c669458bc

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialConclusion

Controlling where a pod is placed is a powerful OpenShift practice. Node affinity, pod affinity, and pod anti-affinity are three techniques for controlling pod placement. This article covered the use cases and configuration for pod anti-affinity.

The use cases and configuration examples here are a great starting point for OpenShift anti-affinity, but your needs may vary from cluster to cluster. You can use the YAML files from our examples as a baseline to customize your pod anti-affinity rules to meet advanced requirements.

FAQs

What is anti-affinity in OpenShift?

Anti-affinity in OpenShift is a scheduling rule that prevents multiple pods with the same label from being placed on the same node. It ensures better workload distribution and reduces the risk of downtime.

What are common use cases for anti-affinity OpenShift rules?

The two main use cases are high availability (distributing replicas across nodes for resiliency) and pod pairing (combining affinity and anti-affinity rules to run complementary pods together while spreading them across the cluster).

How is anti-affinity configured in OpenShift?

Anti-affinity is defined in a pod’s affinity section of the deployment spec. Administrators specify label selectors and a topology key (commonly kubernetes.io/hostname) to control scheduling rules.

What happens if no nodes meet anti-affinity OpenShift rules?

If rules cannot be satisfied, pods enter a Pending state. Using oc describe pod reveals which rules prevented scheduling, allowing administrators to adjust replica counts or affinity settings.

What is the difference between required and preferred anti-affinity rules in OpenShift?

RequiredDuringSchedulingIgnoredDuringExecution means pods will not be scheduled unless the anti-affinity rules are strictly met. In contrast, PreferredDuringSchedulingIgnoredDuringExecution tells the scheduler to try to follow the rules but still allows pod placement if no matching conditions are found.

Try us

Experience automated K8s, GPU & AI workload resource optimization in action.