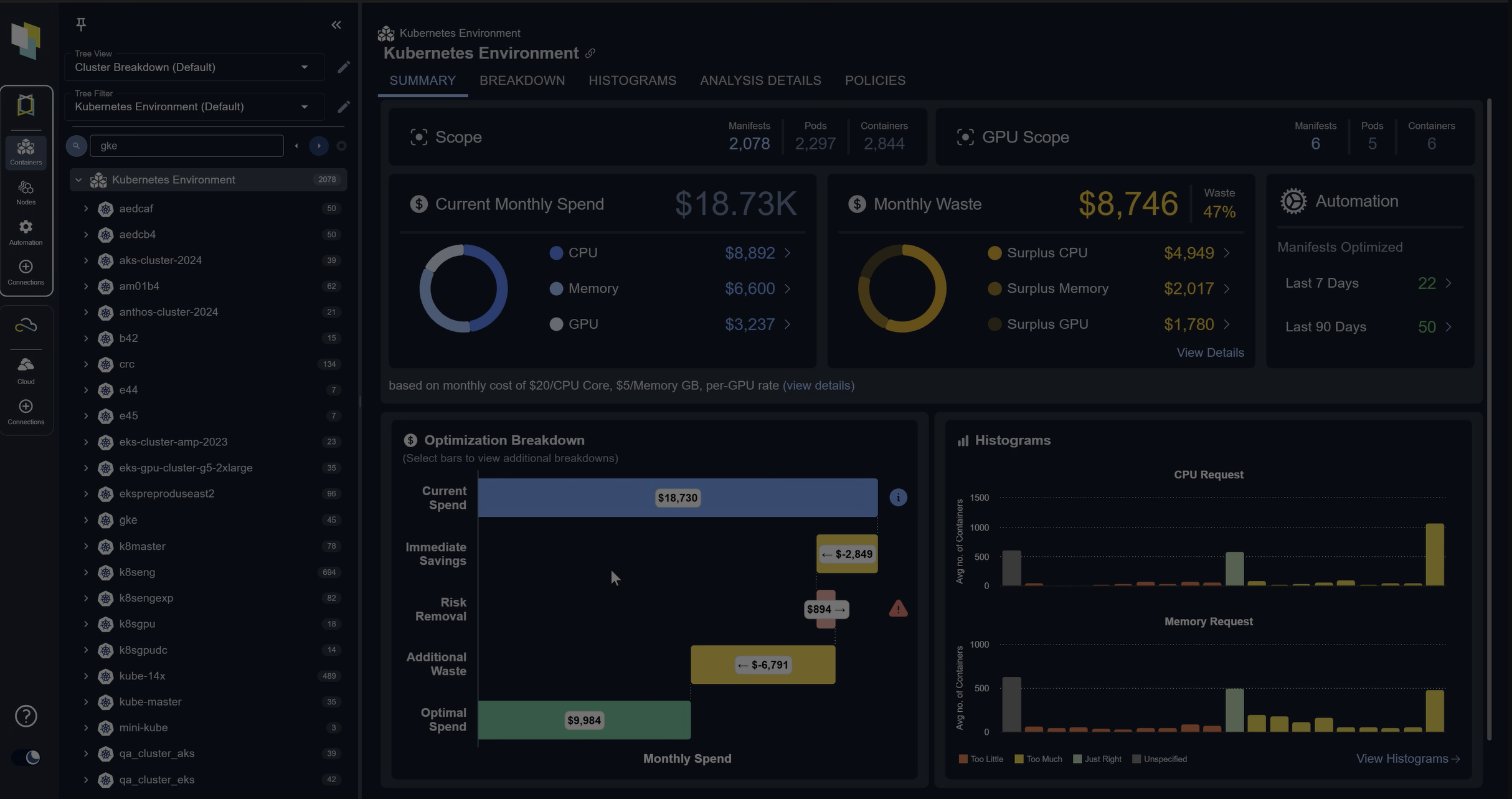

As enterprises push more application workloads into public clouds like Amazon Web Services (AWS), Microsoft Azure and Google Cloud, high cost is emerging as a major pain point. Purchasing separate cloud instances for each workload takes the efficiencies created by workload stacking away from the end customer. Optimizing and right-sizing instances based on workload patterns are the main levers cloud customers are left with to control costs and increase efficiency. But there’s an advanced strategy that delivers pretty dramatic savings: container stacking within cloud instances.

Container technologies, like the open source offerings from Docker, provide a “lightweight” virtual environment that enables multiple applications share an operating system but isolates the individual processes of each app. Stacking multiple containers within a single cloud instance enables multiple applications to share the same OS and instance without interfering with each other. Container stacking can be an effective way to maximize your application density and optimize your utilization of purchased cloud resources.

Using containers allows you to leverage a key feature of virtualization: overcommit. As the term suggests, this means assigning more resource to the workloads in an environment than the physical infrastructure can support simultaneously. This is only possible if the workloads are not active at the same time, which allows high VM-to-host stacking ratios that translate into significant cloud cost savings.

The concept of overcommit is familiar to anyone who regularly flies on commercial airlines. Based on experience, the airline knows that not every passenger who has booked the flight will show up. So they book more passengers than they have seats to ensure every seat is filled on every flight.

Of course, anyone who regularly flies also knows that sometimes all the booked passengers do show up—bumping someone off the flight. That’s because the airlines make their booking predictions based on averages for each route, rather than an in-depth predictive analysis of each passenger’s schedule based on their individual travel patterns. But that’s exactly the kind of analysis you’ll want to perform to avoid any of your apps getting “bumped.” Having an in-depth understanding of each containerized workload over time is essential to stacking them for greatest efficiency, while ensuring you have adequate resources available at all times.

Critical to this is ensuring you take an analytics approach to “stacking” containers. The key to safely maximizing utilization and leveraging over-commit of resources is “dovetailing” containers based on their detailed intra-day workload patterns. Visualize a Tetris game, with each tile representing the workload patterns of an application within each container. Stacking them so that every space is filled will assure the optimum use of the instance. When stacking containers, you might pair an application that is busy in the morning with another that is busy at night or one that is CPU intensive might be paired with another that is memory intensive. This is no easy task and requires analytics that uses historical workload patterns to predict future requirements in order to safely dovetail the apps. If you can get it right, the payoff is significant. Densify is an analytics service that does just that. Read this case study of savings of 82% by moving from individual AWS instances for 983 workloads to a container stacking strategy.

It’s important to note that container stacking may not be appropriate for every application. Open source container technology is still maturing and doesn’t offer the robust management, security and resiliency that mission-critical applications demand. However, for non-critical apps, container stacking can be a great option. The key is being aware of the limitations of management ecosystem gaps surrounding containers today and making thoughtful decisions about which workloads belong there—and which do not.