Hey, good morning, good afternoon. It’s your favorite densify webinar presenters, Andy and Dave once again. So we’re happy to be with you guys. We were here a few weeks ago talking about EKS auto mode and how densify compliments and works with that technology. Today’s webinar is about Google, GKE Autopilot, which is a similar kind of technology, but obviously focused on the Google Cloud.

We are here today to talk to you about what that does and what it does not do, and how densify, once again, will compliment that technology if you are using it. let’s get going Dave.

first slide, just, what is this? GKE Autopilot. It is in many ways similar to EKS auto mode. It’s a mode of operation for GKE. Google manages a whole bunch of stuff and just takes away lot of manual work that you would typically do yourself, including things like configuring nodes, scaling parameters, security, and a whole bunch of other pre-configured settings.

So it just basically makes your life easier if you’re working with GKE

Google, manage the infrastructure so you can focus on building and deploying your applications. What we mean by that. That you don’t have any direct control over the actual nodes that you’re actually running your Kubernetes applications on. So Google will take care of that and manage it. They will get into a little more details of exactly what they do.

The clusters themselves have a defaulted hardened configuration. So the security settings have been set up by default. Again, one more thing that you don’t have to worry about manually. And finally, from a pricing perspective. It’s based on the pods and how you configure those. It’s not based on the nodes, so it simplifies your billing forecasts and attribution to different business units.

So essentially makes it simpler that way too. But again that doesn’t mean that you’re going to save a ton of money if you do things incorrectly. So we’ll get into that as well. Once again, I’ve done the high level stuff. You get to go and dig a little bit deeper. Fantastic. So what I’m gonna do now is we’re gonna go a little bit deeper, talk a bit more about those topics and what they mean, and then there’s a couple specific topics that we’re gonna go into in even more detail we’re gonna drill down into.

So again, from a compute management perspective, Andy mentioned that you don’t have to manage your nodes. It really is a serverless Kubernetes option. You don’t manage nodes, you don’t manage hardware, you don’t manage workload placement, bin packing. Node groups or anything like that. This is in particular for general purpose compute requirements.

So there’s a lot of things that you no longer have to worry about. That’s all handled automatically by GKE autopilot. Again, Andy mentioned that security, patching and currency. So by default, when you deploy a cluster, you’re going to have a hardened cluster that’s been deployed that’s already pre-configured for you.

That’s not something that you have to invest a ton of time and effort into. The default settings are already secure. And hardens. And also with things like patching, you’re not going to have to be doing things like rolling out os patches to nodes. You’re not going to have to be worrying about patching Kubernetes.

All that stuff is part of the managed service for you. Ease of configuration, so. In our earlier webcast, we talked about EKS auto mode and the fact that setup of a cluster is almost kind of like a wizard based activity. GKE Autopilot takes that even to another level.

It’s even simpler. You can get, you can provision a cluster in GKE autopilot in just a few clicks, just a few minutes. There’s a lot of power behind the scenes where you can customize things if you need to. But just keep in mind, if you just wanna stand up a cluster that is already hardened, already configured, already has most of the prerequisite services deployed, you can do that very, very quickly and easily.

Andy mentioned simplified billing, the fact that you pay only for the resources that you use. So CPU, memory and storage that’s something that’s very important. We’re gonna drill into that in a bit more, in a further slide as well as what serverless means. And just another thing to keep in mind is that system, so thing, so system pods that run your Kubernetes cluster. Those are things that you’re not billed for. You only billed for your actual workloads and also unscheduled pods. So if something is stuck in a pending mode, you’re not going to be paying for workloads that aren’t actually deployed and running.

Hey Dave, you’ve used both these technologies now, EKS auto mode and the Google autopilot. What are your thoughts? Is it really that simple to actually use these things? Is it, you know, preferred way to go? Yeah. What I would say is both of them but since we’re talking about GK autopilot today, GK autopilot is really simple to set up.

It takes so much of the management burden off of the customer, and the good news is it deploys in a default configuration that already has, you know, again, is very secure, already has a lot of the supporting services pre-configured for you. So if you don’t wanna be spending your time managing Kubernetes clusters, it’s absolutely fantastic.

Now some customers are going to have specific requirements where they want to be in control of all those dials and knobs. In those cases, you still have GKE standard mode. That might be a better choice for those customers who want complete control over their environment, but if you wanna be able to spend your time focusing on workloads and not spending your time on managing G key clusters, right, or autopilot is the way to go.

Cool the last thing on this slide that we’re gonna talk about was just resource management. If you deploy workloads to GT autopilot, let’s say you don’t specify or any requests or limits on your workloads, just be aware that resource management can be done automatically for you. So you’re going to have some requests that are automatically assigned to your pods.

When you deploy those, and also if you have specialized compute capacity needs, like for example, you need to deploy workloads on GPU based nodes that’s all easily managed just using your pod manifest. We’ll talk a little bit more about that, but just keep in mind it’s very, very simple. So let’s drill down a little bit more into this topic of serverless nodes.

What does it actually mean? What does it mean to you as, as someone who’s responsible for your environment? So at the, at a high level, you’re no longer managing nodes. You’re no longer managing node groups. Autoscaling not something you have to worry about. You just worry about provisioning your workloads.

Everything else happens behind the curtain. What that means is If you wanna request, for example, specialized compute capacity, so I use that example again of GPU based nodes. It’s super simple. You just specify either a node selector or a node affinity in your workload pods, you don’t have to worry about managing things like taints and tolerations and node groups or anything like that.

You just make some very minor modifications to your workload and GKE autopilot will automatically provision the capacity type. You need something to be aware of because you are not managing the actual nose themselves and you have not a ton of visibility into them. There may be some demon sets that aren’t supported.

Okay, so just be aware of that. It’s something that you’ll, you’ll wanna confirm before you start any, any migrations. I do know that a lot of the most common demon sets are supported. So, for example, things like Datadog and log collection, those are demon sets a lot of customers use today. Most of those will work.

But just be aware that there are some that aren’t going to function. So you’re gonna want to validate those upfront. And then just another thing to keep in mind, if in your workloads today you’re doing a lot of management of node affinity, be very careful. Again, you don’t have a ton of control over what nodes your workloads get allocated to other than making sure that it’s the right capacity type.

So you’ve got that flexibility to steer certain workloads to GPUs. But if your workloads have a lot of. No affinity that you’re doing behind the scenes. You might have to tweak that before you move it over. GKE autopilot.

Now per pod pricing, that’s another thing we wanna go into in a little bit more detail because that’s also something that is going to have direct impact on your workloads and on your cloud bill and how you deploy as well. So again, you only pay for the resources, your workloads request, and that word requests.

Chosen very carefully. You are literally only billed for the pod requests. You’re not billed for utilization and you’re not billed for limits in GKE autopilot. Okay, now what does that mean? You might think, well, great. So why wouldn’t I just request the smallest amount of resources needed for any workload, save a ton of money, get my compute capacity for almost three?

Well, there’s some things that, that prevent that from happening. Number one, there’s minimum amounts of CPU memory that you can allocate to a workload. So when you deploy, you do have to meet those absolute minimums. I think for CPU it varies depending on how you’re deploying, but in most cases, it’s gonna be a minimum of about 250 milli cores for CPU and for memory, I believe it’s about 512 megabytes as a minimum.

You also have to maintain a minimum CPU to memory ratio for your workloads. Okay? So you’re able, it’s, there’s a window that you can fall within. You can go from one, A one to one ratio of CPU to memory, up to, I believe the upper limit is one to 6.5 ratio. But just keep in mind, you do have to respect those ratios.

Another thing to keep in mind, and this is very important if you are under requesting resources. In your workloads in GKE Autopilot, you can still experience issues where if you under request CPU, your workload might end up being throttled. So you can have performance issues there. Or if you’re under requesting memory, you could potentially have a situation where you have an out of memory kill.

Or your pod needs to be terminated and rescheduled elsewhere. So keep in mind that there are some caveats. You still, it’s still very important to make sure that you’ve sized your pods accurately and you’re setting accurate requests and where appropriate limits for your workloads in order to make sure that you are reducing risk.

And also you also wanna make sure you’re not over provisioning capacity ’cause that ends up resulting in costs. And again, just a reminder, so some good things about the per pod, I mean per pod pricing is fantastic. It gives you a lot of predictability about your costs. And keep in mind that you don’t have to pay for things like control plane pods, so anything that’s running in queue system.

You don’t pay for that. None of that overhead. And again, any pod that’s stuck in pending doesn’t, isn’t something that’s billed. So if they’re not running, it’s not something that you have to pay for. Presumably, Dave, if you’ve got something unspecified, they’ll assign you a a setting for that and you’ll pay for whatever it is they assign to you.

Right? Exactly. It is going to auto assign something for you because, you know, if you haven’t. Specified anything, it’s not gonna give it to you for free. But really the question is, and why you wanna avoid unspecified resources is do you really want your cloud service provider making those decisions for you about what resources you’re requesting?

It could potentially introduce risk, it could potentially introduce costs. That’s something that you’d much, much rather be in control of and have an understanding of. You’re not just deploying some templates or some common. Values that are seen out there, but you’re actually deploying something that reflects your actual utilization,

Those are some of the things that are unique and interesting about GKA autopilot, but keep in mind there are some things that it doesn’t do. Okay. And this is kind of to, to what you asked about Andy. So firstly, it doesn’t entirely eliminate the need to manage nodes. It is a significant improvement, but keep in mind, you probably will still have some workloads that require specific.

Capacity types, potentially specific node types in that kind of situation. You revert back to paying for the actual nodes and the nodes will be allocated to you, and you are still responsible for making sure that you are paying. For the nodes. So you’re not doing per pod pricing if you’re specifying specific node types.

And you are also, because you’re paying for the whole node, you wanna make sure that you’re fully utilizing those node resources. So it does, it’s very good. As I mentioned, for general purpose workloads, you don’t have to worry about that, but just keep in mind, it doesn’t eliminate it entirely. It absolutely doesn’t size or resize your Kubernetes workloads.

To reduce risk and waste, you wanna make sure that you’re setting appropriate requests. Wanna make sure you’re setting appropriate limits, both to make sure that you’re not having, again, throttle workloads for CPU or out of memory kills for memory, but that you’re also not requesting more resources than you’re actually utilizing which results in waste and ballooning your cloud bill.

So you have to continue doing that in GKE. Autopilot. And then lastly, again, this is to that first bullet point. There are still some situations where you need to manage nodes in that kind of a situation. GKE autopilot is not going to be giving you recommendations about what the optimal load types or optimal node families are.

Okay? So it, it helps you a lot, but it doesn’t entirely eliminate those problems. now let’s have a look at what the differences are between the GKE autopilot architecture and a traditional Kubernetes architecture are. So first, let’s look at traditional Kubernetes. You obviously have to make sure that you’ve got your underlying base foundational services ready and configured and working to operate any Kubernetes cluster.

So we’re talking here about networking, we’re talking about storage load balancing. All of those things need to be enabled and working in order to run any Kubernetes. Cluster. You also have to manage your nodes. Now again, this is traditional Kubernetes architecture where you provision nodes, you provision node groups.

That’s gonna be part of any Kubernetes architecture. And then on top of that, that’s where you run your applications. That’s where you run your workloads. Okay? And in a traditional Kubernetes architecture. All of this is managed by you, you’re responsible for configuring and deploying. Every portion of this gives you a ton of control.

So again, this is something that for a lot of customers, this is the architecture that they appreciate. What changes in GKE autopilot is not these building blocks. Okay? So these stay the same, however. The delineation between what you manage and what GKE manages. That is what’s different. So in GKE autopilot, you are not managing the nodes.

All of the, on the bottom, the foundational services required to operate your cluster, those are configured and deployed by default. So those aren’t things that you have to worry about. They’re already there. And part of, you know, again, like I said, a wizard based, cluster deployment strategy, that’s all built in.

What you focus on is just your applications and your workloads. So that’s really the major difference in how the two are architected. Even though the building blocks remain the same, it’s really important to understand the difference in who is responsible for what. And again, GKE Autopilot offloads so much of that management to GKE itself and allows you to focus on the applications.

So at this point, Andy, I think you were gonna talk a little bit about resource optimization. Yeah. So I can blast through this slide pretty quick. I’m keeping an eye on the time. Wanna make sure that you get to your densified, QBE stuff Dave. But anyhow, when we think about resource optimization and whether or not you get that handled by GKA autopilot.

Problem is it’s not there. So obviously your app teams and your site reliability engineering teams, they care about having enough capacity and finops is making sure that hey, it’s not too much resources. We have to keep budgets in line. And platform owners care about both things. So they all have different dials and things that they can control and twist.

At the finops, or at least at the node level, the cost is traditionally incurred here. Now with GKA autopilot, as we’ve been talking about, the per pod pricing is the models that they use, but it is based on the requests not utilization. If you are specifying a whole bunch of extra requests for resources that you are not using, you are going to overpay in this model or any model.

So it is still something to be cognizant of at the container resource level, this is where, you’re defining how much resources, how much infrastructure, CPU, and memory and of course, storage is required. And there is still this highly intertwined. Model between finops and the app teams and the platform owners making sure we have the Goldilocks model, just right amount of resources and you can’t separate these things out and autopilot doesn’t solve this problem.

You still need that full stack approach and that consideration for the type of node infrastructure you’re running on. What’s most appropriate. And it’s also, the key component is setting those things at the resource level for the containers requests and limits requests, mostly here, obviously with autopilot.

And back over to you Dave. Let’s talk about how we actually do that. Staying on the topic of optimization, obviously Densify and Quebec. We’re all about optimizing workloads in Kubernetes, so I wanted to talk a little bit about how QBE can complement GKE Autopilot and how they are better together as a solution.

This touches on what I mentioned earlier in one of the previous slides around the fact that GKE autopilot does not solve the problem of allocating appropriate resources, right sizing your workloads within. Kubernetes. So again, returning to this architecture diagram, this is what a GQ autopilot configuration looks like when you add Kube to the mix.

There are a couple areas where we add significant value. First one is optimal workload sizing, making sure that you’re sizing your workloads appropriately based on historic utilization that you’re setting appropriate requests. Appropriate limits so that A, you’re mitigating risk. You’re not having CPU throttling, you’re not having memory out of memory issues, but also that you’re not allocating resources way beyond what you actually are using.

That then turns into cost, so making sure those are fully optimized and right, optimize and right size, that then in most cases will shrink the size of your workloads. That in turn shrinks the size of your cloud bill. So that’s very important. And again, we mentioned that in most cases you’re not gonna be managing nodes within GKE autopilot, but it still is something that happens from time to time.

So be aware that QX also adds a ton of value for those workloads where you are managing nodes. We can help you to understand what are the most appropriate node types. Node families in order to make sure that you are spending appropriately on those. So again, by doing this, you’re not only able to reduce the number of nodes in your cluster.

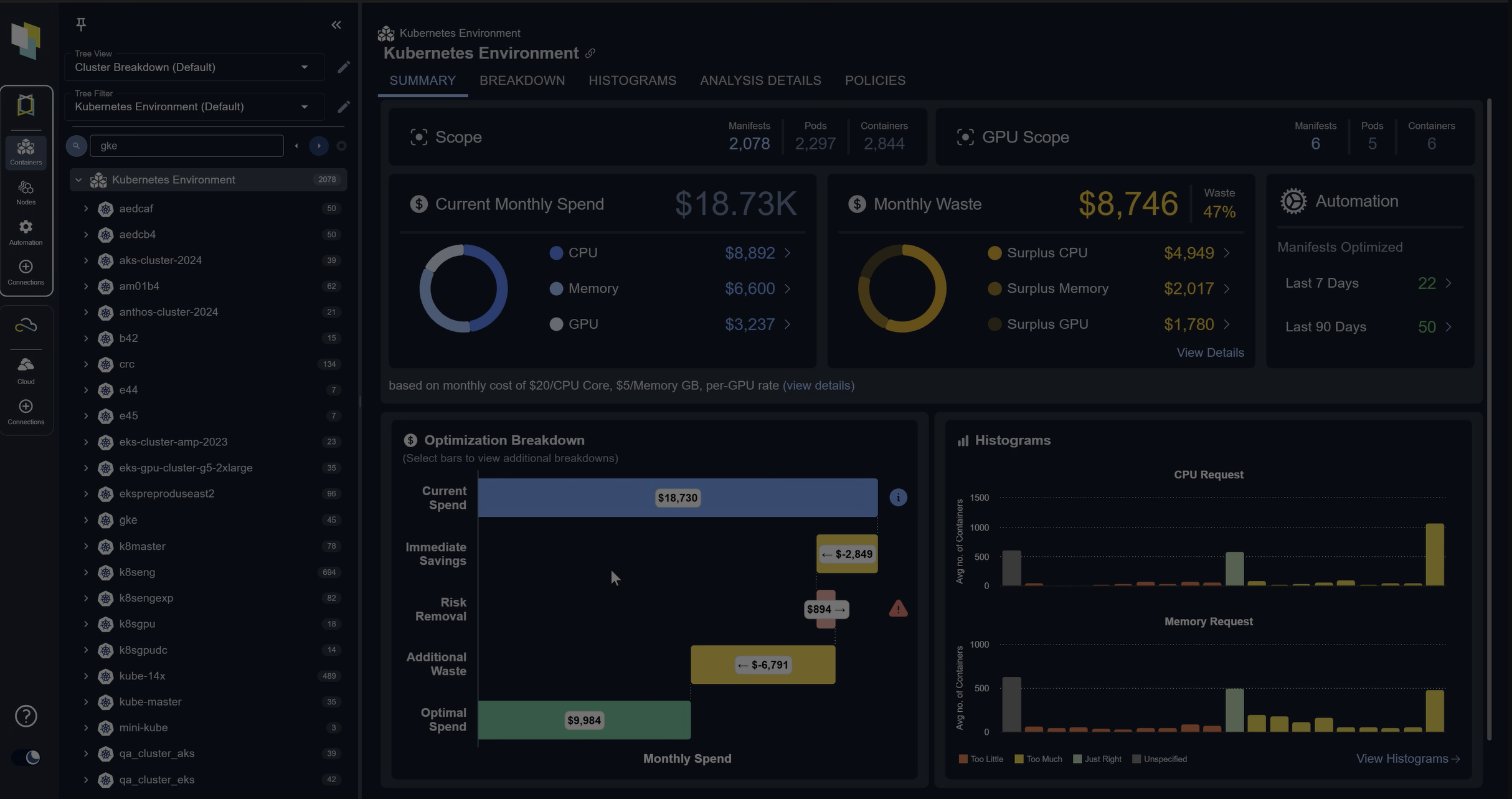

You’re also able to potentially optimize and make sure you’re using smaller and more cost effective node sizes. So at this point I wanted to switch over what we do is we analyze the historical utilization of your workloads in Kubernetes.

We collect data from Prometheus, and then we provide this in a dashboard for you. So you can see here that we’ve got an understanding of what you’re currently spending from a Kubernetes perspective, and we help surface what is the optimal spend. So what are the opportunities available to you if you were to fully optimize your environment?

You have the ability to look at your environment, either at a macro level all up, or you can drill down into individual clusters. Individual name spaces or even down to individual pods. So looking at things at a high level. One thing I wanted to show you is just this screen here, it’s called the histogram screen.

I talked about risk versus cost quite a bit. I’m not going to go into an exquisite explanation of these histograms. We’ve had a lot of podcasts where we’ve gone into great detail, so I encourage you to have a look at those. But just for the sake of things, keep in mind that for all of your resource types we’re able to analyze which ones are just right.

Which ones are yellow, which means they are over provisioned and therefore you have a potential waste situation. And which ones are at risk? And those are in the red. And again, we have, we help you identify where there are opportunities. So for example, if we click here, we’re gonna be taken to see where there’s the most egregious waste within our environment.

We see here that we have a pod that. We are allocating 4,000 Milli course four, so four CPUs for, but we’re actually using, oh, less than half of that, and it’s 30 containers. So we provide recommendations that allow you to right size those workloads automatically, apply those changes within your cluster and then achieve those savings and what’s fantastic.

And what makes Quebec such a great fit. With G Key autopilot is in a traditional Kubernetes cluster. If you were to optimize this, you’ll then have less capacity required in your node groups, but you’re not actually gonna realize instant savings until you actually scale down the number of nodes. So you may have to implement several recommendations before you actually achieve.

Tangible savings, and that works great and it’s fantastic. But in GKE autopilot, you’re actually paying for the requests. So the second you cut the requests on a workload like this in half, that is instantly reflected in your cloud bill. So it’s an instant feedback loop. So that’s just a bit of an explanation for why QBE and GKE autopilot are perfect together, returning back to the slides. Andy, I wanted to hand it back over to you. Thanks, Dave. QX by Densify what Dave was just showing. Full stack, AI driven resource optimization for your Kubernetes environment, wherever that may live, whether it’s in AWS Azure or Google Cloud, or on-prem OpenShift, what have you. We look at cutting the risk and cutting the waste in these environments.

And that’s what our analytics are designed to do. If you’d like to try it out for yourself, there’s a couple ways you can do it. You can go to our website, just click on the trial button and you have two options. You can go to our sandbox where you can play with the interface with our demo environment.

No credit card, no registration. We just need an email address from you, from your business, and you’re in ready to go if you’d like to connect it to your own environment. You can do that as well. Basically, you set it up, it goes very quickly. Use our all-in-one helm chart and it’s up and running typically within the hour.

And you can run that for 60 days, no cost. if you’ve got any kind of questions around what we do, obviously LinkedIn, X, Twitter, and YouTube as well. We’ve got our latest news events and resources up there.

So thank you very much for coming to our webinar today and we’ll look forward to the next one in the coming weeks. Dave, thanks for all your work today. Appreciate all the research into GK Autopilot. My pleasure. Thanks folks. Have a great day. Cheers. Bye-bye.