Best Practices: Cloud Optimization Savings

Chapter 2.2- Introduction: Guide to FinOps

- Chapter 1.1: How to Get Started with AWS Billing Tools

- Chapter 1.2: Best Practices for AWS Organizations

- Chapter 1.3: AWS Tagging: Best Practices for Cost Allocation

- Chapter 1.4: AWS Organizations: All You Need to Know

- Chapter 2.1: Primer: Cloud Resource Management

- Chapter 2.2: Best Practices: Cloud Optimization Savings

- Chapter 2.3: Cloud Computing Cost Savings: Best Practices

- Chapter 2.4: AWS Compute Optimizer

- Chapter 3.1: AWS Cost Management : Top 7 Challenges

- Chapter 3.2: AWS Cost Saving Steps

- Chapter 3.3: AWS Savings Plans

- Chapter 3.4: Spot Instances: A Complete Guide

- Chapter 4.1: The 4 Fundamental Concepts for Enforcing Cloud Cost Management

- Chapter 4.2: Top 10 FinOps Best Practices

- Chapter 4.3: Drilldown: AWS Budgets vs Cost Explorer

- Chapter 4.4: AWS Cost Categories

The Resource Optimization Challenge

Cloud engineers should avoid relying on manual calculations or on tooling based on a simplistic analysis when optimizing resources because the process is too complex and prone to errors that jeopardize application service performance. Relying on a simplistic analysis also results in missed opportunities for savings. We cover all aspects of this complexity in our other articles as part of this guide, so here we’ll only cover the top three reasons:

| Cloud Resource Optimization Challenges | Description |

|---|---|

| Data is seasonal and transient | Workloads change every second while DevOps teams terminate virtual machines and containers just as often. |

| Configuration options are vast | As an example, AWS offers over 1 million configuration options for its EC2s alone. |

| Providers announce new types weekly | Cloud providers change their pricing often and when they introduce new types. |

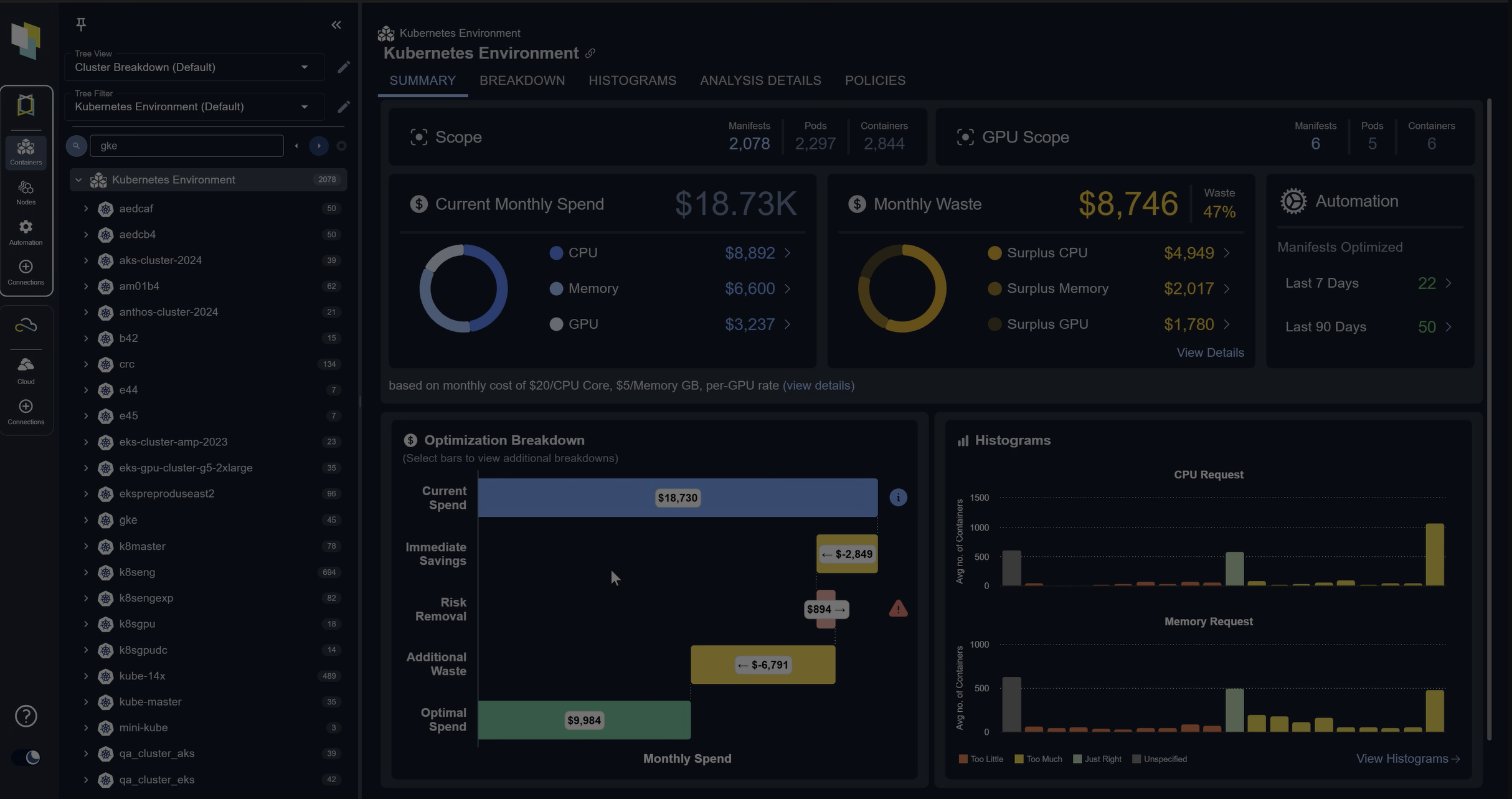

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialThe Limitations of Cloud-Native Tooling

Although cloud providers offer new and helpful native tooling, these solutions come with their own limitations. We cover some of the native resource optimization tools (such as AWS Compute Optimizer) in this guide, so here we present the top three:

| Native Cloud Optimization Tooling Limitation | Description |

|---|---|

| The workload observation window is short | The window is a 14-day sliding window at best, even though seasonality analysis requires monthly, quarterly, and annual examination |

| No control over recommendations | Recommendations controls should allow adjustments of risk tolerance based on workload type and user-defined constraints. |

| Consistency across platforms | The same policies should govern optimization across cloud providers and hybrid environments that include private clouds. |

This article explains these limitations in more depth and introduces other functionality that you must consider to safely and accurately optimize your cloud resources.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialThe Cost of Resource Optimization Mistakes

The most significant risk of a resource optimization mistake is an application outage. Suppose you have downsized a virtual machine to have fewer CPUs to save a few dollars, but what you didn’t consider is that the smaller machine doesn’t offer sufficient throughput to read and write to the disk. This mistake puts pressure on the reads and writes to the database, which causes a growing backlog of database transactions, ultimately resulting in a crash during peak usage hours. The misplaced trade-off between an outage and saving a few dollars on a critical server is a reminder to FinOps practitioners that cost savings require a thoughtful approach.

The second most significant risk is simply one of performance slow down. In this scenario, the resource bottleneck doesn’t cause an outright outage. Instead, it causes a slowdown that may take months for engineers to pinpoint– leaving end-users frustrated over a long time.

The third risk is of over-spending on resources and not meeting your financial budget and operating margins. This risk is top-of-mind for FinOps practitioners and least important to cloud engineers. That’s precisely where the functionality described below come in to balance the two opposing requirements with sophistication and accuracy.

| Misconfiguration Consequences | Description |

|---|---|

| Application outage | A recommendation for using a smaller virtual machine based on CPU usage without analyzing memory, disk, and network may result in a severe bottleneck and an outage. |

| Application slowdown | A reduction in computing resources may not result in an outage but simply a slowdown of application performance that is difficult to isolate by the operations team. |

| Missed savings | As compared to the first two, a missed opportunity for savings ranks last in terms of priority. FinOps practitioners must use advanced algorithms to avoid mistakes. |

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialImportant Cloud Optimization Functionality

Select Long Observation and Analysis Windows

When you plan for capacity, you must consider all of the workload peaks and valleys–not just the increased activity levels that typically occur around mid-morning and mid-afternoon. For retailers, workloads increase on special holidays such as Mother’s Day or Black Friday. For some business applications, the increase happens at the end of each month or quarter when financial managers close the accounting books, publish financial statements, and process payments. Whatever causes the peaks and valleys in your business applications, and whether you scale resources up and down as needed, the resource optimization process must take the ebbs and flows into account before recommending the correct sizing for cloud computing resources hosting your applications.

Get Recommendations across Families

Rightsizing should not be confused with resource optimization. Finding the right size for a workload is essential, but it is only one step of the capacity planning process. The functionality to select the right family of cloud-based instances (such as general-purpose, accelerated-computing, or storage-optimized) is important to get right before you consider sizing. Within each family, there are multiple types; for each type, there are various sizes. For example, the storage-optimized family has the I and D types and within those are the I3 or D2 (numbers indicated the generation), and finally, you get to the size expressed such as d2.4xlarge. Ensure that your chosen tooling can recommend sizing by looking across all instance families and types first to select the correct hardware platform.

Ensure Consistency across Your Public and Private Clouds

As mentioned earlier in this article, policies help consistently enforce selection constraints within a resource optimization recommendation engine across your entire environment. In a typical enterprise environment, the computing infrastructure includes more than one cloud provider (AWS, Azure, or Google Cloud) and servers in more than one data center. For some aspects of infrastructure management, best-of-breed tooling works best either by necessity or by choice, but when it comes to resource optimization, you would be much better off using a single tool across all of your computing estates. By relying on a single cross-platform tool, you can combine your risk tolerance policies to avoid omissions and disparities. More importantly, all of your workloads would use the same observation window, and your finance team would receive a single report across your entire IT infrastructure. The tool’s end-users would also appreciate not learning different user interfaces and not having to reconcile the peculiarities of multiple recommendation algorithms. As with other tasks in IT administration, choose a single pane of glass when you can.

Avoid Black-Box Analysis

Automation is good; lack of transparency is bad. Even though resource optimization’s complexity requires algorithmic automation, you don’t have to accept the lack of forthcoming information about how a recommendation engine you rely on makes its determinations. Now, you may not understand the math because it’s too complicated, but the tool’s provider must document an explanation as to the approach taken to formulate the recommendation. This knowledge helps you better understand the next area of functionality described in this article, such as using policies to influence the recommendation outcomes.

Use Policies to Influence Recommendations

Generic resource optimization recommendations only go so far. Operational capacity planning requires engineers to provide their specific requirements as input to the recommendation engine. For example, an IT organization may have standardized on a few virtual machine types that would allow them to reserve capacity in advance in a specific availability zone. Another example would be the need for certain chipsets (an Intel vs. AMD vs. ARM processor) or hypervisor (AWS Nitro) to support dependent application software. The presence of abundant, though yet-unused, ephemeral storage (the kind of storage that comes with a virtual machine but erases upon termination) may be another reason to want to influence a recommendation. However, the most crucial consideration is the need to adjust risk tolerance in the form of free headroom in CPU, memory, or I/O that you require for safety in mission-critical applications. Whichever the reason, you must control the output of a recommendation engine, and the best way to do it consistently across your environment is to rely on policies that make the process repeatable and scalable. Administrators should configure the optimization tool to stack multiple policies and simultaneously apply them to keep the configuration modular and easy to understand.

Account for Reservations When Choosing a Resource Type

All IT organizations with a sizable cloud infrastructure use a mix of Savings Plans and reservations to pay less. Since administrators make either payments or commitments one to three years in advance (in exchange for discounts up to 72%), they face workloads that substantially change while their purchasing obligations are still in place. As a result, whenever recommendation engines offer a new type and size of a virtual machine to support the changing workload, they must consider the existing reservation portfolio to avoid buying a new type and letting committed capacity to another type go to waste. For example, a workload may require much less disk throughput because the application architecture changed over time. The recommendation engine may recommend buying a new and much less expensive virtual machine type in that scenario. However, such a change would result in the original reserved commitment going unused while purchasing a new virtual machine type. In this case, the double-payment would increase spending instead of reducing it.

Rightsize Means Downsize but Also Upsize

FinOps practitioners focus on safe ways to save money. However, resource optimization is not always about spending less; sometimes, it’s also about spending more. On balance, you will find one upsize opportunity for every ten downsize opportunities in a production environment. Still, the importance of acting on upsizing far outweighs all of the savings you will ever find. That’s because an upsizing opportunity is simply a different name for a performance bottleneck. And performance bottlenecks are not easy to isolate during troubleshooting and are best identified in a capacity analysis exercise such as a rightsizing activity. However, there are nuances. For example, grid computing nodes typically run at full-throttle and should not always be upsized. So as you search for savings, make sure you raise a flag with your infrastructure engineering teams whenever you find a resource in genuine need of upsizing.

Don’t Stop At Virtual Machines When You Optimize Resources

The majority of enterprise cloud spending is on virtual machines (also known as EC2 in AWS), so FinOps practitioners spend more time discussing them. However, a resource optimization exercise should extend to relational database systems (such as AWS RDS or Azure SQL), storage volumes (both in storage space and input-output per second, or IOPS of a service, such as AWS EBS), and containers. In the future, the share of spending will increase for services such as NoSQL databases, event stream processing, auto-scaling, and serverless computing. Even though the cloud providers directly manage those services, you still have to request resources to be provisioned in a database, container, or function. For example, a short-lived AWS Lambda function would waste the same percentage of its memory resources if over-provisioned, as would a virtual machine. Still, they receive attention only when thousands or millions of them are provisioned as application architecture fully transitions. In fact, in AWS Lambda, the CPU allocation is proportional to its amount of allocated memory, so finding the optimal allocation is mathematically more complicated, not less, thereby requiring advanced algorithms.

Optimize Your Containers and Your Kubernetes Environment

If you take a peek at how your infrastructure architecture has evolved over the last few months, you’ll notice an increase in the number of containers. The most popular platform for managing containers is Kubernetes, whether hosted by a public cloud provider such as AWS EKS or deployed directly in your own data center. In its June 2020 survey of 1,324 respondents, the Cloud Native Computing Foundation (CNCF) found 83% of surveyed use Kubernetes, up from 58% in 2018. FinOps practitioners can no longer ignore Kubernetes in their resource optimization initiative, given its breakneck adoption pace.

Pay Attention to Integrations

Integrations drive automation which, in turn, increases efficiencies. As you plan a resource optimization exercise, be mindful of collecting the required data and applying recommendations to the infrastructure. You should integrate your resource optimization engine with your existing monitoring tool to collect the necessary data for the capacity usage analysis. This type of data is typically available in monitoring tools such as AWS CloudWatch or Datadog. The other kind of integration required to ensure operational efficiency is with workflow automation tools such as ServiceNow or infrastructure as code tools such as Terraform.

Spend less time optimizing Kubernetes Resources. Rely on AI-powered Kubex - an automated Kubernetes optimization platform

Free 30-day TrialConclusion

Resource optimization is a delicate balance between cost savings and service performance. The complexity of achieving this balance is not exposed in any aspect of FinOps more than in a resource optimization initiative. The days of using a spreadsheet to track resource usage and plan for capacity needs are long gone due to the risks posed to application stability. Modern cloud resource optimization requires a long list of functionality only available in specialized cross-platform tools that leverage algorithms to automate the analysis process.

FAQs

What is cloud optimization?

Cloud optimization is the practice of analyzing and adjusting resources across cloud and hybrid environments to balance cost savings with performance. It relies on automation, algorithms, and policies to avoid outages, slowdowns, or overspending.

Why are native tools insufficient for cloud optimization?

Native tools like AWS Compute Optimizer often have short data windows, limited transparency, and no cross-platform consistency. Enterprises need advanced tools that handle long-term trends, risk tolerance policies, and multi-cloud optimization.

What are the risks of poor cloud optimization?

Misconfigurations can cause outages, degraded performance, or missed savings. For example, downsizing a VM without analyzing disk throughput could crash a database, while overprovisioning wastes budget.

How do policies improve cloud optimization?

Policies let teams enforce business-specific requirements such as processor type, reservation use, or risk tolerance into optimization engines. This ensures recommendations align with both technical needs and financial goals.

Does cloud optimization apply only to VMs?

No. Cloud optimization extends to databases, storage, containers, Kubernetes environments, and serverless computing like AWS Lambda. As architectures evolve, optimizing these layers is essential for efficiency and cost control.

Try us

Experience automated K8s, GPU & AI workload resource optimization in action.