Densify is rewriting how GPUs are allocated, shared and scaled across every layer of AI infrastructure

The Problem: AI workloads are dynamic, unpredictable, and expensive. Data prep can choke your pipeline, training jobs hog GPUs without awareness, and inference, the most latency-sensitive phase, is notoriously hard to scale efficiently. Worse, traditional infrastructure tools treat GPU as a static commodity, ignoring model intent, workload shape, and sharing capabilities.

The Solution: Densify is flipping the script. It understands what your AI jobs are trying to do, how much they actually need, and when and where to run them, all while optimizing for cost, performance, and business priorities. From data ingestion to model serving, Densify will make real-time decisions using time slicing, MIG, and MPS to match the right workload to the right GPU strategy.

AI is no longer an experimental edge case. It’s core to production. Whether you’re fine-tuning a foundation model, running real-time inference in a customer-facing app, or prepping massive tabular data pipelines, one thing is constant: your infrastructure choices can make or break your AI outcomes.

The challenge? AI workloads are GPU-hungry, bursty, heterogeneous, and fundamentally hard to orchestrate well.

That’s where Densify is focusing

Densify doesn’t intend to be just another GPU dashboard or cost tool. It’s a workload-aware optimization engine that works before, during, and after your AI jobs run. It watches your jobs, understands your models, and recommends exactly what to change in real time.

And NO, we’re not Kubernetes-bound. Whether you’re running GPUs on AWS, Azure, GCP, or on-prem IaaS, Densify slots into your stack and gets to work.

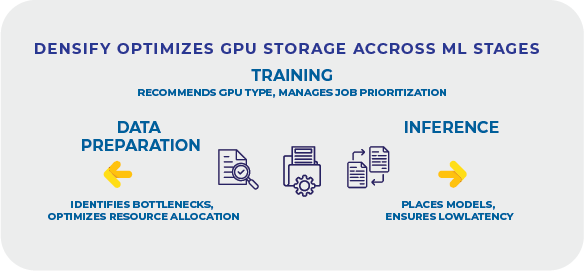

From Data Prep to Inference: One Optimization Platform to Rule Them All

1. Data Prep: The Unsung Hero

Before you hit “train,” there’s a ton of ETL, transformation, and validation. Densify doesn’t ignore this step, it optimizes it.

Densify:

- Finds hotspots in data prep clusters where CPU/GPU resources are being bottlenecked.

- Optimizes the vertical and horizontal scaling of data prep components to ensure they have the resources they need and scale up (and down) as efficiently as possible

- Helps keep non-GPU workloads off the expensive GPU nodes if they aren’t required, freeing valuable resources for the training and inference workloads

Result: Faster data availability, smoother pipelines, and fewer false alarms blaming the GPU for slow model runs.

2. Training: Heavyweight, Meet Smartweight

Training is expensive. Period. But most teams use “safe defaults” like full A100s or static node groups. Densify changes the game:

- Understands model size, batch size, and parallelism profile

- Recommends MIG slicing when your training job fits into 2g.20gb, not the whole 7g.40gb

- Flags when MPS can be used to run multiple training jobs concurrently

- Right-sizes GPU request limits to eliminate padding waste

These aren’t generic recommendations. They’re based on your job history, telemetry, and scheduling patterns. Think of it like a personal trainer for your training pipeline—but for your infrastructure.

3. Inference: Where Real-Time Optimization Really Counts

This is where Densify truly separates itself. Inference is high-stakes, high-volume, and high-variance. Static placement doesn’t cut it.

Densify recognizes the need for:

- GPU affinity rules that avoid scheduling LLM inference on oversubscribed nodes

- Smart recommendations to use MIG or MPS based on real-time telemetry

- Dynamically shifts workload placement as demand shifts

Imagine a system that sees traffic spikes and reshapes the placement of workloads before your users notice latency spikes..

Optimization Isn’t Just About Picking the Right GPU

It’s about how you use it.

Time Slicing

Great for bursty, non-latency-sensitive jobs. Densify will recommend time slicing when workloads spike briefly, allowing others to share GPUs fairly.

MIG (Multi-Instance GPU)

Perfect for parallel inferencing. Densify will select the best-fit MIG profile (e.g., 1g.10gb vs 3g.20gb) for your job.

MPS (Multi-Process Service)

Ideal when multiple jobs can share GPU memory and compute paths. Densify flags this automatically and triggers the co-location strategy.

And it doesn’t stop there. Densify uses historical patterns, real-time telemetry, and model metadata to make these decisions dynamically.

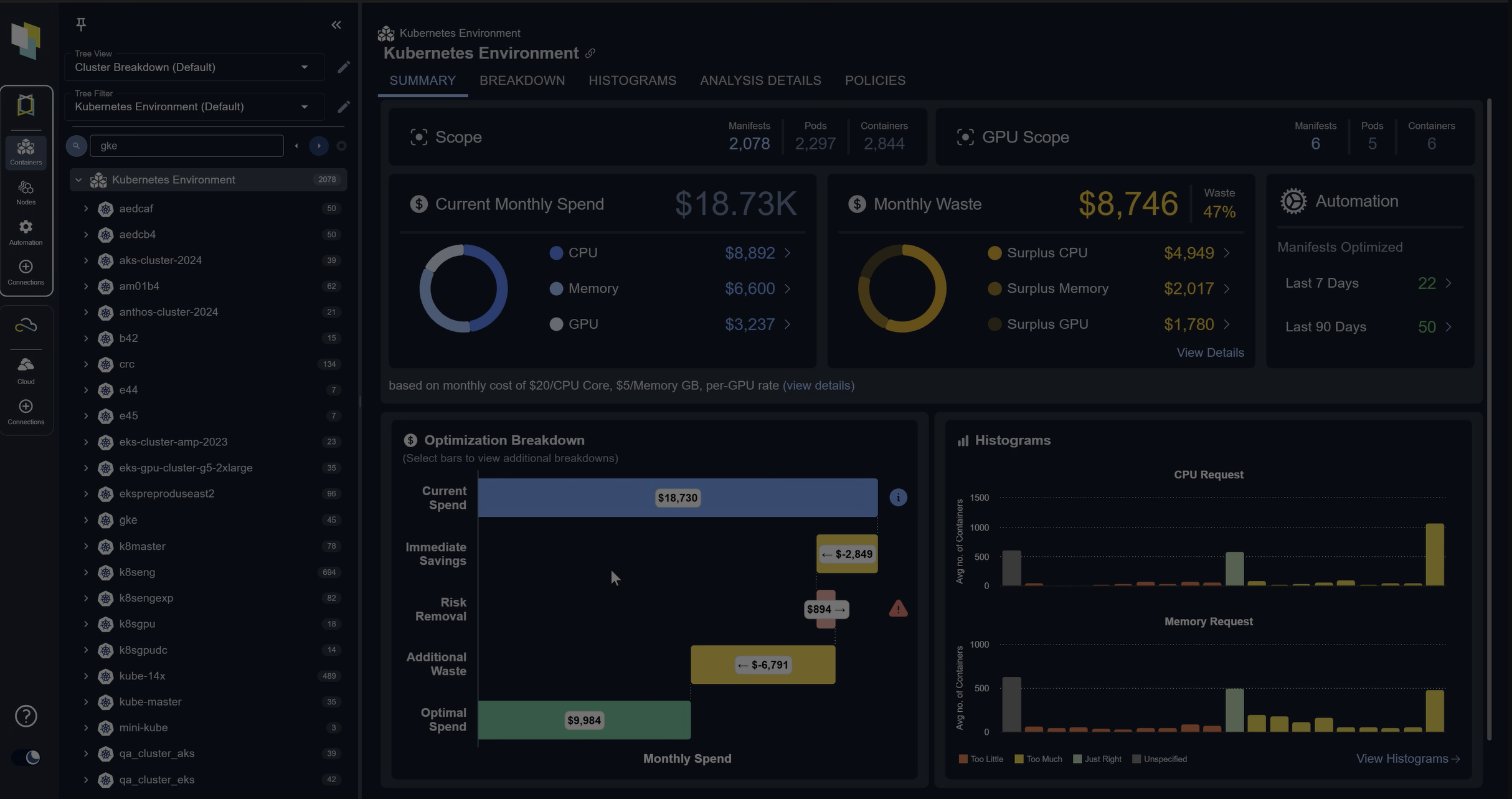

Visibility That Engineers Love, Insights That FinOps Can Use

Densify gives:

- Utilization heatmaps by job type, team, SLA

- Historical cost trends by model, namespace, or environment

- Recommendation trails with estimated ROI

For FinOps and CTOs, this means:

- Cost attribution per team/model

- Forecasting GPU demand

- Budgeting aligned with actual workload intent

Smart Allocation, Smarter Scheduling

Schedulers without context are guesswork. Densify plugs into K8s, Slurm, Ray, GCP Vertex, AWS Batch and more.

- Injects policy logic: “LLM inference goes on A100, test jobs go to T4”

- Optimizes node pool composition to avoid under/over utilization

Whether you’re in a multi-tenant cluster or federated across clouds, Densify makes your schedulers smarter.

Beyond Kubernetes: Any GPU. Any Stack. Any Cloud.

Your AI platform isn’t just Kubernetes. Densify gets that. It works with:

- AWS/GCP/Azure bare metal GPU VMs

- On-prem Nvidia clusters

- Cloud-native PaaS (Vertex, SageMaker)

- Batch workloads and autoscaling groups

It doesn’t care where your jobs run. It cares how efficiently they run.

TL;DR: What You Get with Densify

Stakeholder Value Delivered

Engineers Faster jobs, fewer errors, dynamic tuning, explainable suggestions

FinOps Leaders Real-time GPU cost visibility, savings projections, chargeback

CTOs/CIOs Cross-cloud optimization, capacity planning, SLA enforcement

The Real-World Value

- 35–60% GPU cost reduction

- 2x job throughput via better co-location and sizing

- SLA-compliant inference even during peak demand

- Infrastructure-wide optimization across clouds and schedulers

- Explainable, per-job recommendations engineers can act on

If your AI workloads are serious, your optimization strategy should be too. Let Densify make your GPUs as smart as your models.

Connect with Bijit on Medium