New integration brings real-time Kubernetes and GPU optimization insights into developers’ AI workflows

As excitement builds for KubeCon North America 2025 in Atlanta, Densify has released its latest innovation for Kubernetes and AI-driven infrastructure resource management: the Densify Model Context Protocol (MCP) Server. This new capability enables organizations to securely integrate Densify’s Kubex resource optimization intelligence directly into popular LLM-powered tools — including ChatGPT, Claude, Cursor, and Gemini CLI.

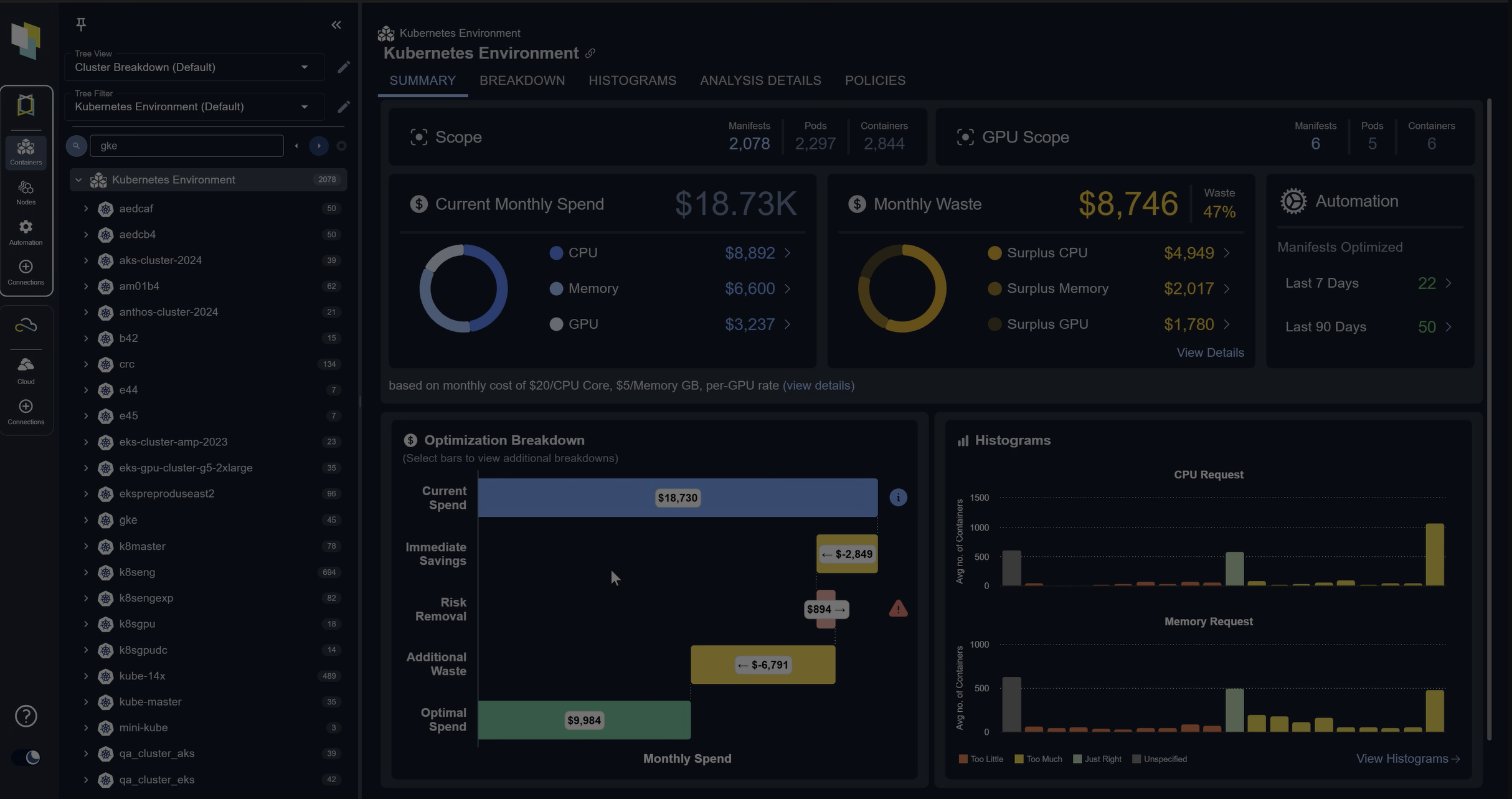

With this release, platform engineers and developers can now query their Kubernetes clusters, GPUs, and cloud compute environments in real time — all from within their AI-assisted workflows. The result: faster, data-driven optimization decisions that seamlessly integrates with Densify’s policy driven automation to reduce CPU throttling, eliminate out of memory kills and keep costs under control.

Deep Resource Optimization — Powered by AI

Modern platform teams are under immense pressure to balance performance, availability, and cost. Densify’s MCP Server bridges that gap by embedding real-time optimization insights directly into the AI tools developers already use.

By connecting to Densify’s Kubex engine, the MCP server provides:

- Instant visibility into container, node, GPU, and instance utilization

- Automated risk detection for underprovisioned workloads

- Precision recommendations for right-sizing and cost optimization

And because every data access is fully user-authorized, organizations maintain complete control and security over their infrastructure data.

“Platform teams are eager to harness LLMs, but generic assistants don’t understand the complexity of Kubernetes and GPU resources,” said Richard Bilson, Head of AI Engineering at Densify. “Our MCP server gives engineers direct access to Densify’s precision recommendations — so their AI copilots can actually make safe optimization decisions about resources.”

Connecting AI Workflows to Infrastructure Optimization

The Densify MCP Server acts as a secure, intelligent bridge between LLM client applications and Densify’s proven optimization platform. It delivers refined resource metrics, cost analysis, and actionable recommendations — allowing developers to interact naturally with their infrastructure using plain language.

Platform teams can ask questions like:

- “Which Kubernetes nodes are underutilized?”

- “What’s my potential GPU savings if I consolidate workloads?”

- “Show me the top optimization opportunities by cost impact.”

The responses come directly from Densify’s analytics engine — accurate, real-time, and context-aware.

Building Smarter, AI-Connected Platforms

As organizations embrace AI-driven engineering, Densify’s MCP Server represents a crucial step forward — bringing trusted, automated optimization intelligence into the flow of work. Whether you’re running large-scale GPU clusters for model training or optimizing containerized microservices, this integration empowers teams to act on data instantly and intelligently.

Customer Perspectives

“We’ve been testing the Densify MCP server in our Kubernetes environments, and it promises to transform how our team accesses optimization recommendations. Having Kubex’s precision available to anyone simply by asking questions is a game changer and makes its power available to a much broader set of users” said Richard Sayad, Paramount Skydance Corporation.

Stay tuned as we showcase the Densify MCP Server and Kubex innovations live at KubeCon North America 2025 in Atlanta. Visit us at booth 554.