Psst! Your Cloud Utilization Sucks! Why Automation is Critical to Cloud Optimization

Nothing personal, but your cloud utilization sucks. Of course, so does everyone else’s.

Trying to manually select the perfect EC2 instance type (or similar for clouds other than AWS) is impossible.

There are many different permutations to consider. Application demands change based on customer whims, seasons, business cycles, and other external forces—and such change is incessant.

Simply put, manual cloud planning simply doesn’t work in today’s modern, cloud-first environments. It leads to massive overprovisioning. And remember, you don’t pay for what you use in the cloud, you pay for what you provision.

There is a better way, of course—leveraging machine learning to optimize instance type selections in real time, automatically.

It’s time for your cloud resource utilization not to suck.

Why Your Cloud Utilization Needs Work

Remember the good old days of capacity planning, circa 2002? Figure out the maximum load a server might have and size said server based on the assumption that the peak load could occur at any time. Good old days for the server vendors and colocation facilities, to be sure. They loved selling you excess capacity your actual utilization would never consume. Easy money for them.

Then along came the cloud and changed everything, right? Now you could provision precisely what you needed. No more low server utilization numbers. Your cloud instances fit your traffic patterns like a thousand-dollar suit.

Only it didn’t work out like that. Sure, AWS and the other cloud providers offer Auto Scaling, but which instance type is the right one for your workload?

AWS, in all its wisdom, now offers millions of potential EC2 configurations—and the other public clouds aren’t far behind. Unless the workload in question is completely well-behaved and predictable (yeah, right), then you have no choice but to pick an instance type based on an assumption of peak load. You got it. 2002 all over again. Time to get out the DVD of Minority Report.

OK, you might be thinking—picking the right instance type for a particular workload isn’t all that difficult, at least for some of my workloads, right? Perhaps, but what if you have hundreds or even thousands of workloads you want to run in the cloud. Now which instance types are right for each workload? But wait, there’s more. What if all those workloads are changing? All of a sudden, picking the right instance type for each workload is a Sisyphean task.

Imagine yourself going into the largest Costco in the world. You are surrounded by huge aisles of goods and you don’t even know where to look for what you need. You probably don’t even know what exists. And even if you did, you couldn’t possibly match your complex 1,000-row shopping list with the myriad of different products on the shelves.

Similarly, your cloud optimization is gonna suck no matter how you cut it. There’s no way you’ll pick the right instance type for each workload, considering how many variables you would have to take into account. And you’re not alone. It’s not like anyone else has a clue how to do it either. Remember, in spite of all the hype, the cloud isn’t pay for what you use. It’s pay for what you provision.

Overprovisioning or provisioning the wrong things just runs up your bill. There’s got to be a better way.

Improve your organization’s cloud utilization—see how you can use machine learning to select better instance type matches for your workloads. Request a Demo »

The Double Whammy: Impacts to Cloud Cost & Performance

In retrospect, it’s obvious that public clouds overpromised but underdelivered utilization-based provisioning. In the early days of the cloud it looked like a huge money-saver, but as any enterprise cloud manager can tell you, the spinning wheel that is your cloud bill just keeps spinning ever faster.

Poor utilization due to selecting oversized instance, however, isn’t the worst of it. Picking the wrong instance type can also adversely impact your performance—which of course, adversely impacts your customer as well.

You may have wanted an expensive suit, but perhaps that instance type is more like a T-shirt that’s two sizes too small. Not only is it uncomfortable, but its unsightly as well. Remember, instance types vary in many ways: size, speed, and other special characteristics. Make the wrong choice, and you end up with all manner of nasty issues: performance slowdowns, out of memory issues, I/O bottlenecks, and thrashing—as resources move from VM to VM more than they should.

What do all these problems have in common? Poor customer experience. The last thing you want is your CEO knocking on your door, asking why your customers are pissed. The bottom line: manual utilization planning simply won’t work—and it’ll only get worse as time goes on.

The Hardcoded Infrastructure-as-Code Trap

If manual cloud utilization planning sucks, then the obvious choice is to automate it. So we select our infrastructure as code tool of choice (such as Terraform) and write ourselves a script that selects instance types automatically. Done and done.

Just one problem: how does your script know which instance types to select? If you hardcoded any of the parameters into your recipe, well shame on you. Hardcoded infrastructure as code always leads to operational risks and high cloud costs.

You know what they say: good code never has any numbers in it other than zero and one. The same goes for all the instance type parameters you might like. They should all be abstracted away. Welcome to next-generation infrastructure as code: an abstracted, declarative representation of infrastructure. Without such an abstraction, infrastructure as code allows for policy-based choices which still don’t provide an adequate control over dynamic environments.

But we have to get that abstraction right. What we really need: an ‘abstraction of the abstraction’ based on business intent. This level of abstraction requires machine learning-driven automation of the declarative representation.

At this level humans control the overall business parameters, and the infrastructure self-configures to meet the needs of the business. Machine-learning-based automation is the only way to do this.

To Get It Right, You Need Machine-Learning-Powered, Multidimensional Permutation Analysis

Mouthful to be sure—but the permutations we must analyze are the usual suspects: CPU memory, and I/O parameters, as well as technical and business attributes of each workload. The fact there are so many such permutations is what makes our analysis multidimensional—and the reason we need machine learning to automate the analysis is because each of these permutations is variable.

Each workload, in fact, has several dimensions of variability. In addition to the permutations listed above, policies and constraints may themselves be variable. And then there’s the variability across environments. For example, in a hybrid environment, workloads may have certain characteristics when running in an on-premises VMware environment but different characteristics once you move them to a cloud.

There is also variability from one cloud to another, as well as the ever-changing demands of fickle customers. And every cloud’s instance types, pricing models, policies, and APIs are different, so our multidimensional permutation analysis—whack-a-mole to us mere mortals—must take all of those differences into account as well.

Assuming, of course, you want to use more than one cloud. Of course, multicloud is now an increasingly common scenario, given the price differences, bursting and backup options, and simply avoiding the ‘eggs in one basket’ principle that even your CEO will understand.

Optimization as Code—The Only Way to Achieve Full Automation

With all this talk about machine learning-driven abstractions of abstractions, you didn’t think I’d leave you hanging without a way for you to take my advice and make your cloud utilization suck less, did you?

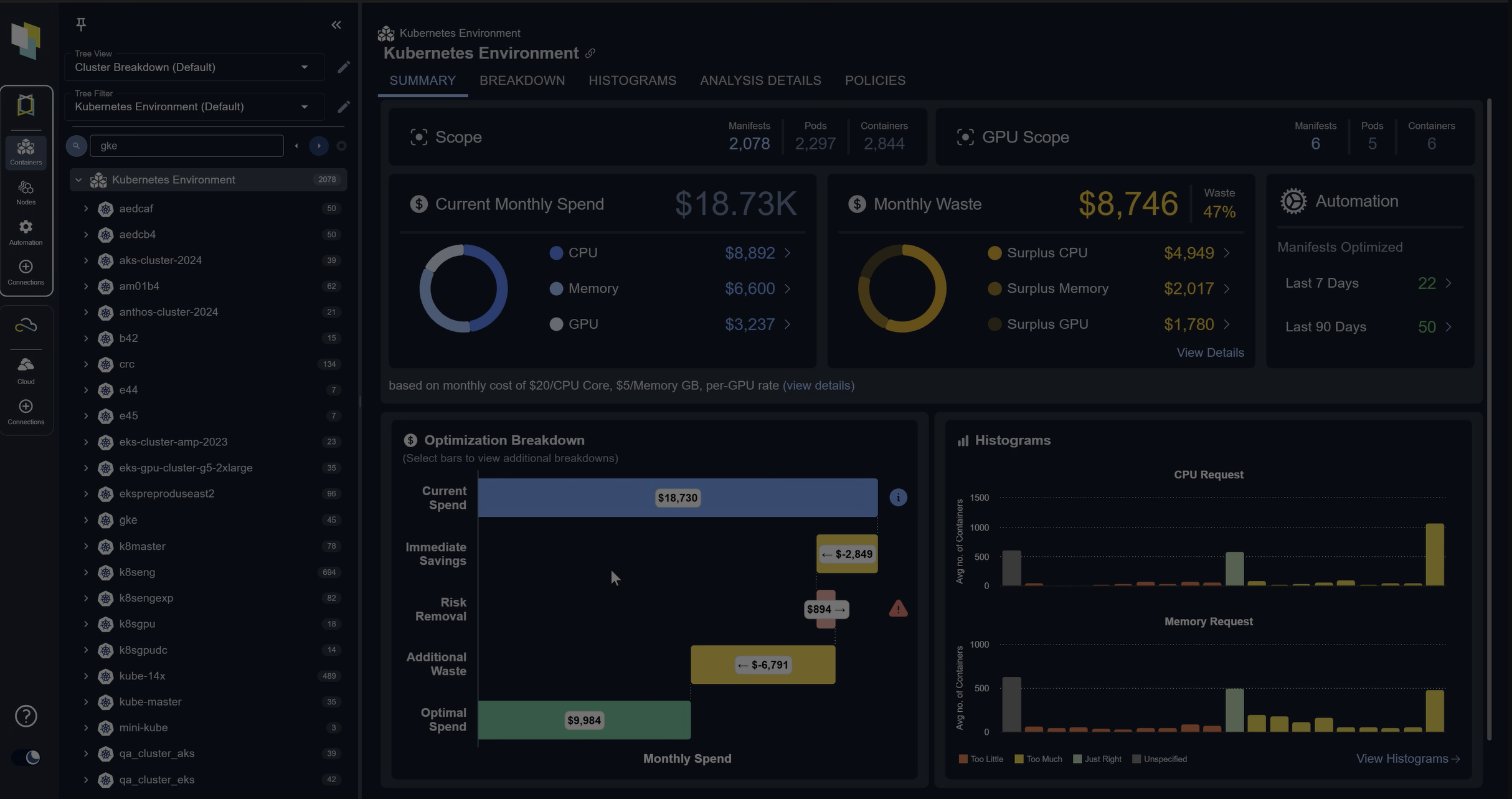

As it happens, Densify—the sponsor of this article, as luck would have it—offers just such a solution they refer to as optimization as code.

Densify enables your workloads to become self-aware of their resource requirements and to dynamically match their needs to optimal cloud supply. Yes, self-aware. Not in the sense that Skynet is self-aware (thankfully), but in the sense that Densify enables your infrastructure as code code to query Densify for the correct instance type—dynamically, and in real time.

With Densify, the information about what each workload needs appears in the form of ‘tags’ in the AWS console. In other words, the application communicates its behavior and requirements via these tags.

Not only that, but said snippet of code is dead simple. See for yourself below.

provider "aws" {

region = "${var.aws_region}"

}

resource "aws_instance" "web" {

name = "Web Server"

#instance_type = "m4.large"

instance_type = "${lookup(local.densify_spec,"appr_type") == "all"

? lookup(local.densify_spec,"rec_type")

: lookup(local.densify_spec,"cur_type")}"

ami = "${lookup(var.aws_amis, var.aws_region)}"

}

Instead of hardcoding an instance type (shame on you if you’re still doing this), drop in a lookup of the ideal instance type. Slick, eh?

Once you have Densify set up, you can sit back and let the application perfectly match itself to cloud, and take the action, without you having to do anything. Results include a better performing application, perfectly matched to the right cloud workloads, at the lowest possible price.

There’s quite a bit of secret sauce going on behind the scenes here. Densify is actually establishing predictive demand patterns, creating normalized models of cloud supply, optimizing supply and demand—and if that weren’t enough, Densify even leverages real human experts to tune and manage all the settings.

See how you can use optimization as code to improve utilization by automating workload-to-instance mapping. Request a Demo »

Densify is blazing the path for how cloud optimization has to work. After all, manual cloud optimization will always suck.

One final word. Think picking the right instance type and size is hard? What about when we start talking about enterprise deployment of containers at scale? Fuhgeddaboudit. This is the only way to go, folks.

© Intellyx LLC. Densify is an Intellyx client. At the time of writing, none of the other organizations mentioned in this article are Intellyx clients. Intellyx retains full editorial control over the content of this article.