Solving the Cloud Placement Puzzle Requires Science – Not Guesswork

The rush to the cloud is well underway. According to a 2016 IDG Enterprise Cloud Computing Survey of IT leaders, 45% of their IT environments was hosted in a private, public, or hybrid cloud environment. Cloud vendors are responding to the demand with a dizzying array of options which routinely lead to costly over-provisioning and sub-optimal configurations. Assessing options even within a single cloud provider can be complex business, let alone comparing alternatives across providers.

The key to doing it right is to move beyond the surface-level assessment, rules of thumb, or plain old guesswork that most organizations employ. Using scientific analysis of workload requirements, crossed against the various hosting and service offerings from different cloud providers, can result in substantial savings of up to 80%, while dramatically reducing application risk.

By “scientific analysis” we are referring to advanced analytics that look at two critical aspects of application workloads:

1. Fit for purpose analysis. Is the workload a good candidate for the cloud and, if so, what are its requirements? Answering these questions requires a detailed analysis of each workload’s business and operational requirements, and governance policies. Most applications have some form of technical, data security, privacy, or regulatory policies dictate where it can be hosting, and in some cases, even exclude it as a cloud candidate. In some cases, policies may dictate that data remain in a particular jurisdiction. Performing a detailed fit-for-purpose analysis enables informed placement decisions that are compliant with business rules and application requirements.

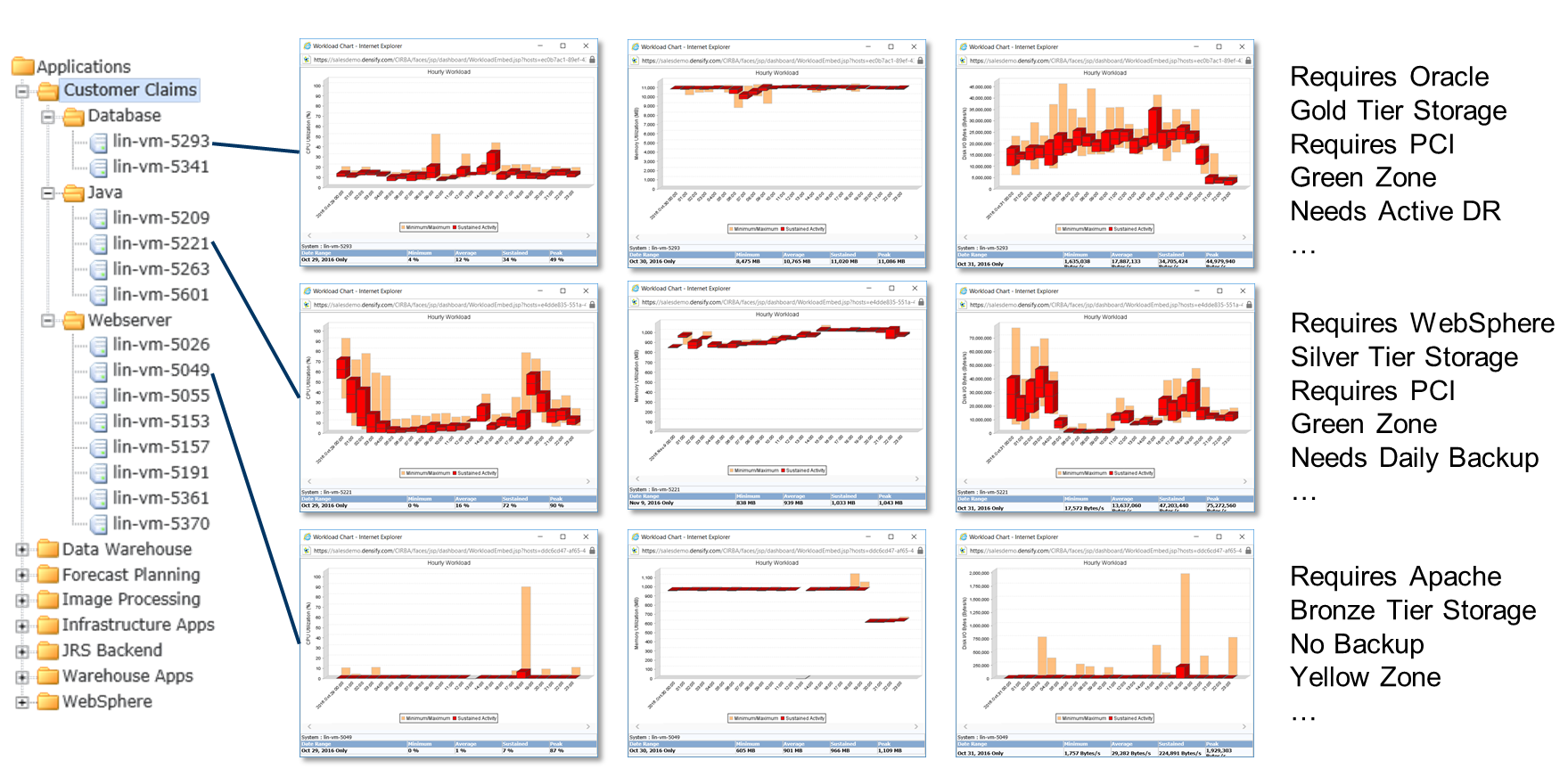

How do this approach look in practice? Consider the example below, where a detailed model of an application’s requirements indicates that its database servers need Oracle, Gold Tier Storage, PCI, Green Zone, and Active DR. Based on these requirements, as well as the requirements of their peer application components, an ideal cloud hosting strategy can be scientifically determined, and opinions or guesswork can be eliminated from the process.

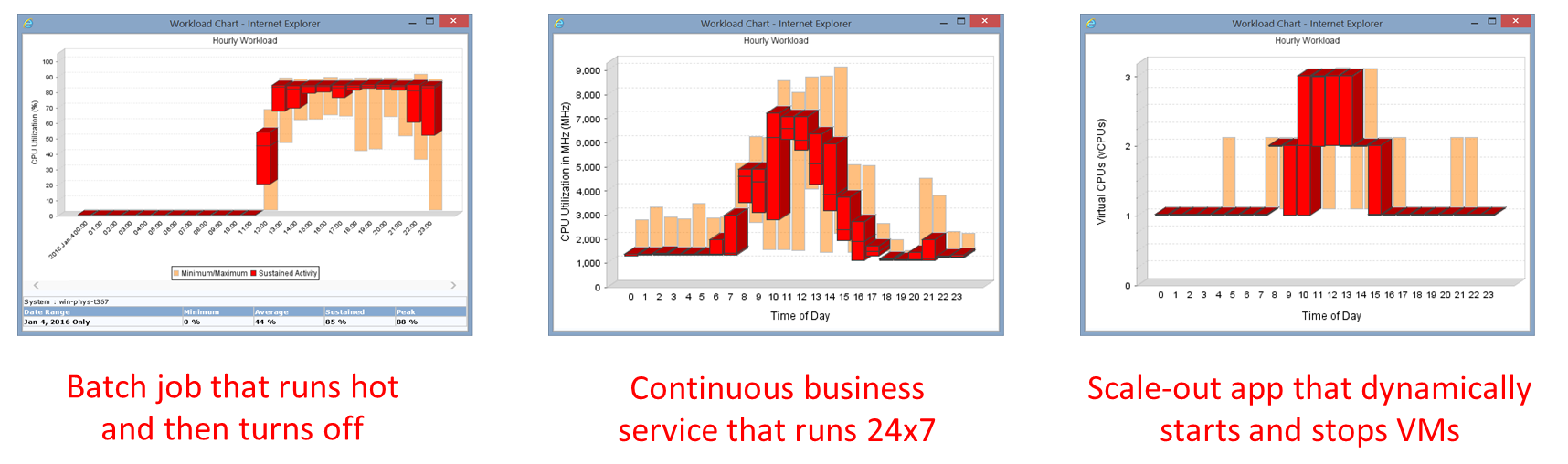

- Workload pattern analysis. Another critical factor in determining the optimal host environment and required resources for an application is the nature of the workload patterns — including CPU, memory, and I/O patterns — on a daily basis and over a business cycle. In the three examples below, the nature of the workload patterns will dictate the optimal hosting strategy.

In the first example, the workload will make very high use of resources for a defined period of time, and then can be turned off. This “time boxed” pattern means that public cloud IaaS will likely provide a cost benefit, as you only pay when the instance turned on. The second example, however, may be more expensive in IaaS, as it cannot make as good use of the resources allocated to it, and the cloud instances must remain running even at low periods of utilization. In this case, a virtual or containerized environment that supports resource overcommit may be a more cost effective hosting option. And finally, if that same application were to be rewritten to scale horizontally, the math would change again. By being able to turn instances on and off as needed, public cloud IaaS would again become the favored hosting model.

In all of these examples it should be clear that applying objective, scientific analysis to the cloud placement puzzle allows you to zero in on a choice that delivers the lowest cost with the lowest risk, taking guesswork and opinions out of the process.

In the next series of blog posts, we’ll delve deeper into the art of workload cloud placement, including strategies for right-sizing, modernizing, and eliminating “dead wood” instances.